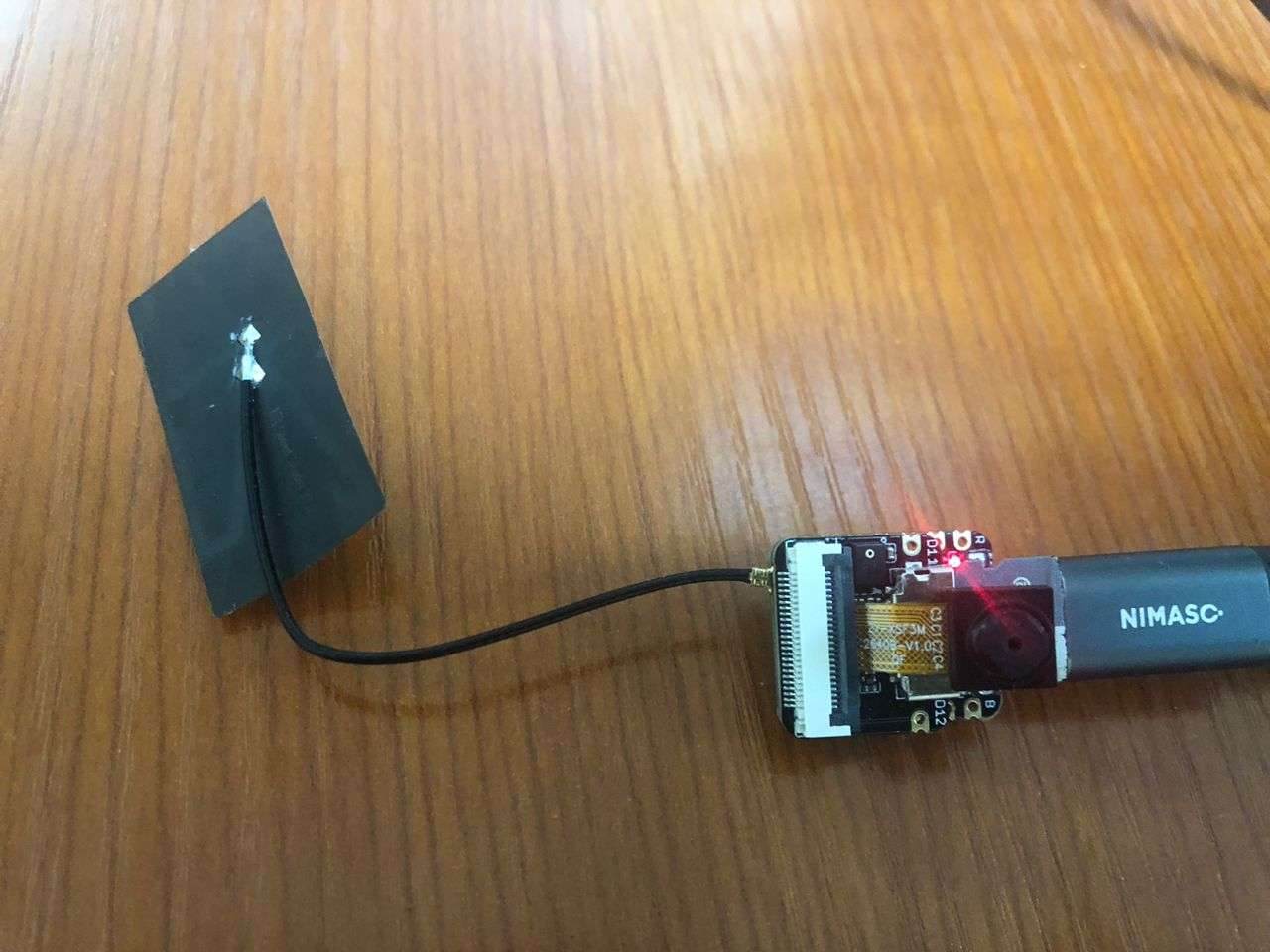

This week I explored how to implement TinyML using the XIAO ESP32S3 Sense camera module. The objective of this exercise is to use the module to identify hand palm lines.

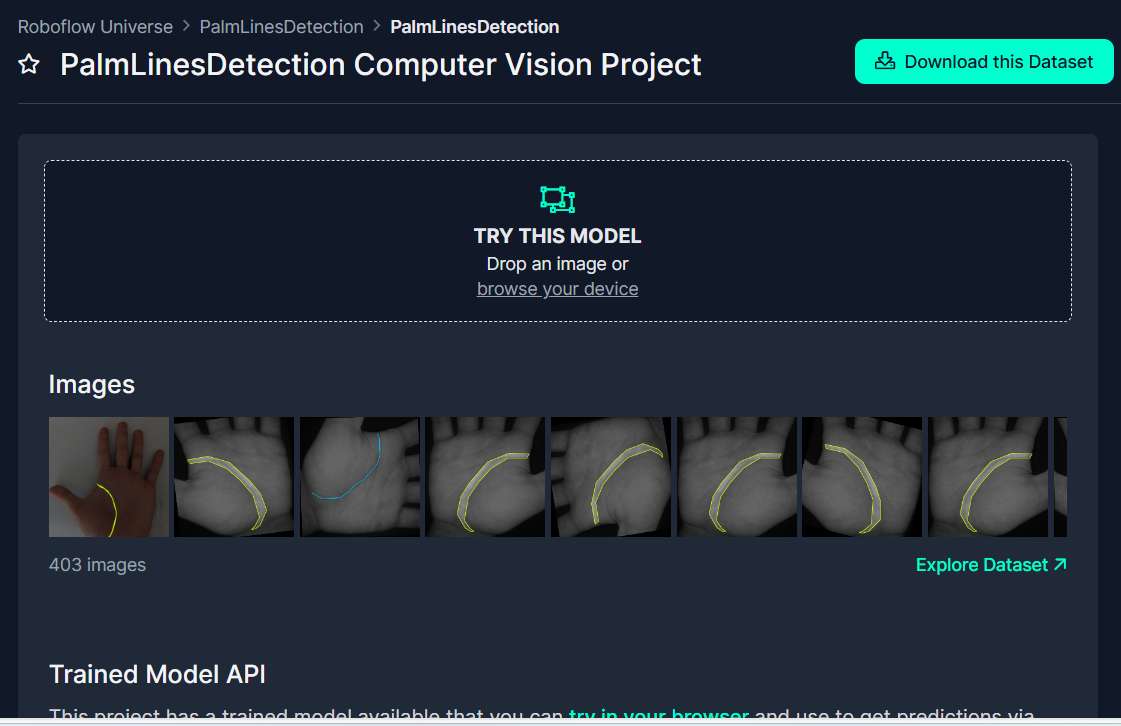

To get started I have browsed different dataset sources such as Kaggle, RoboFlow etc. I then stumbled upon this dataset from Roboflow https://universe.roboflow.com/palmlinesdetection/palmlinesdetection

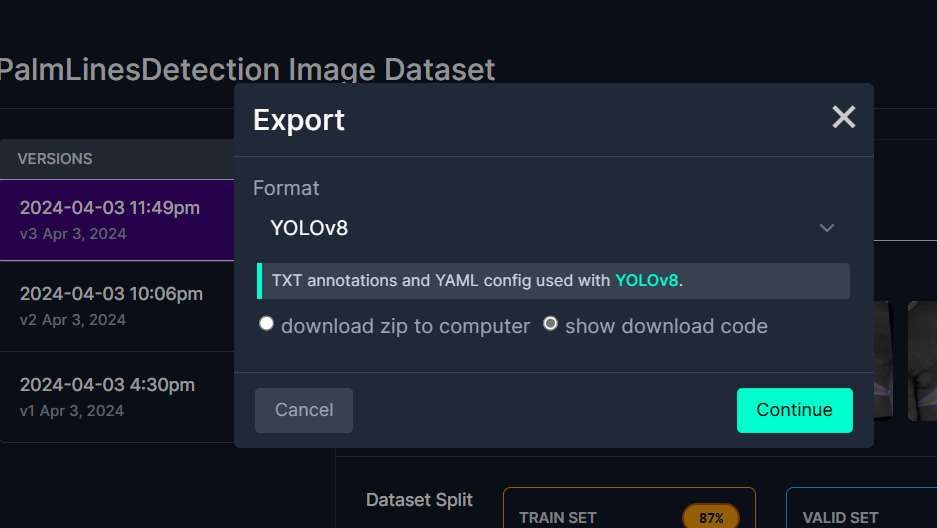

TThe dataset has 3 classes (line1, line2 and line3 corresponding to the lines on the palm downloaded in Yolo format.

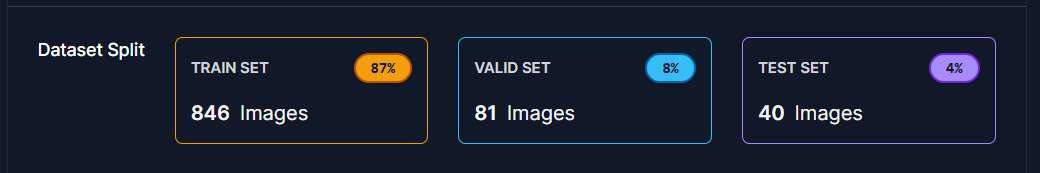

It is splitted into 3 sets; training, testing and validation image set:

The dataset contains the following:

The data.yaml provides the definition of the classes and train, test and valid files locations.

The idea is that I train a YOLOv8 model and use the dataset and convert it into Tensorflow Lite format. The .tflite file is then uploaded through the Senscraft platform.

I have downloaded and installed YOLO through the Ultralytics package.

Training script:

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

# Train the model

results = model.train(data='data.yaml', epochs=10, device=0, imgsz=192)

# export model as TFlite

model.export(format="tflite")

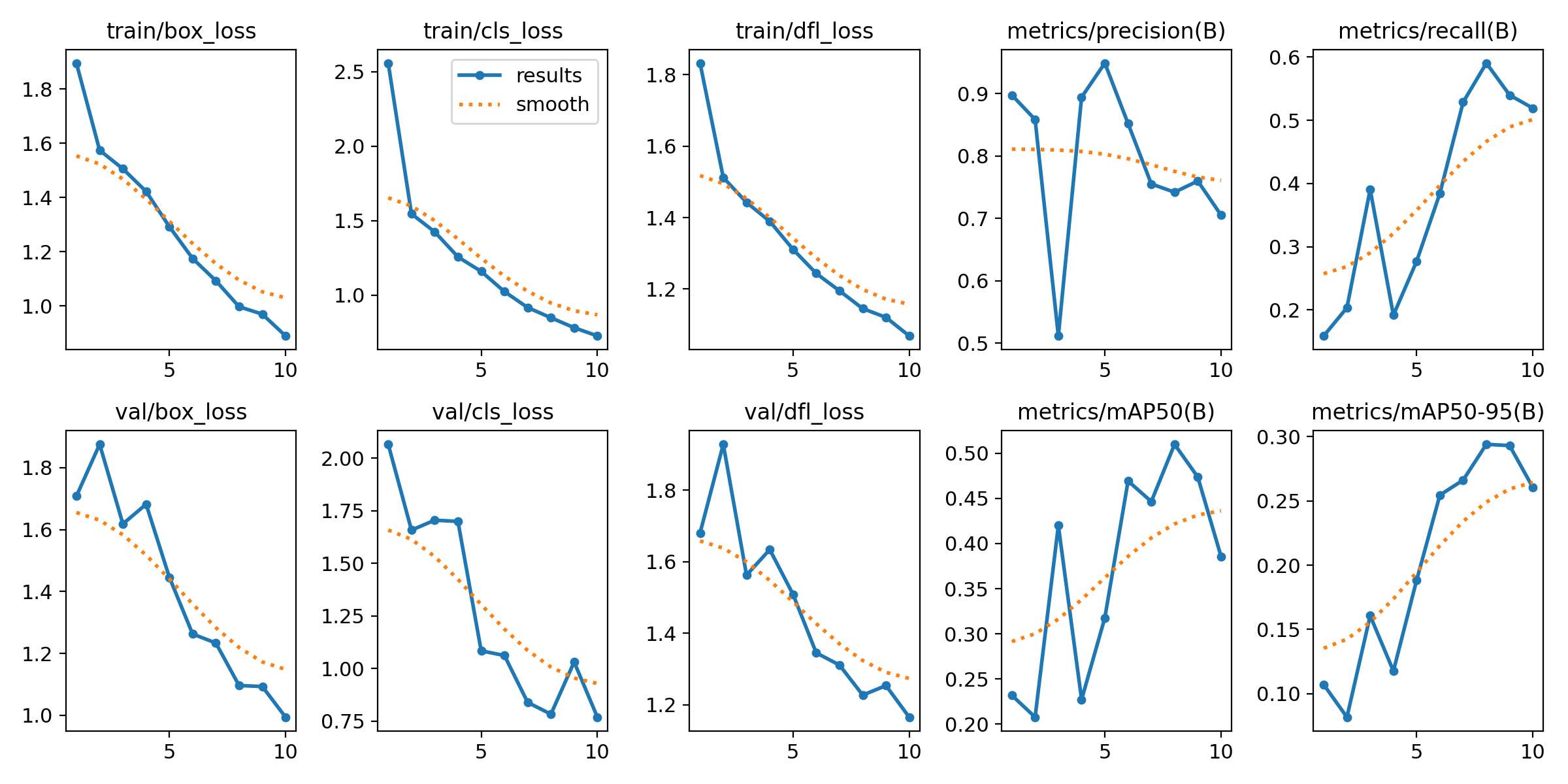

I used a yolov8 pre-train model to re-train on my dataset. I then export the resulting model as .tflite for uploading to the XIAO ESP32S3 model

Training parameters:

Video:

Training Result:

I used the opencv library to read in frames of images from my webcam. I then pass it through the model I trained earlier to detect palm lines.

app.py:

import cv2

from ultralytics import YOLO

model = YOLO('./runs/detect/train4/weights/best.pt')

video_path = 0

cap = cv2.VideoCapture(video_path)

while cap.isOpened():

success, frame = cap.read()

if success:

results = model(frame)

annotated_frame = results[0].plot()

cv2.imshow("Cam Feed", annotated_frame)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

else:

break

cap.release()

cv2.destroyAllWindows()

Result:

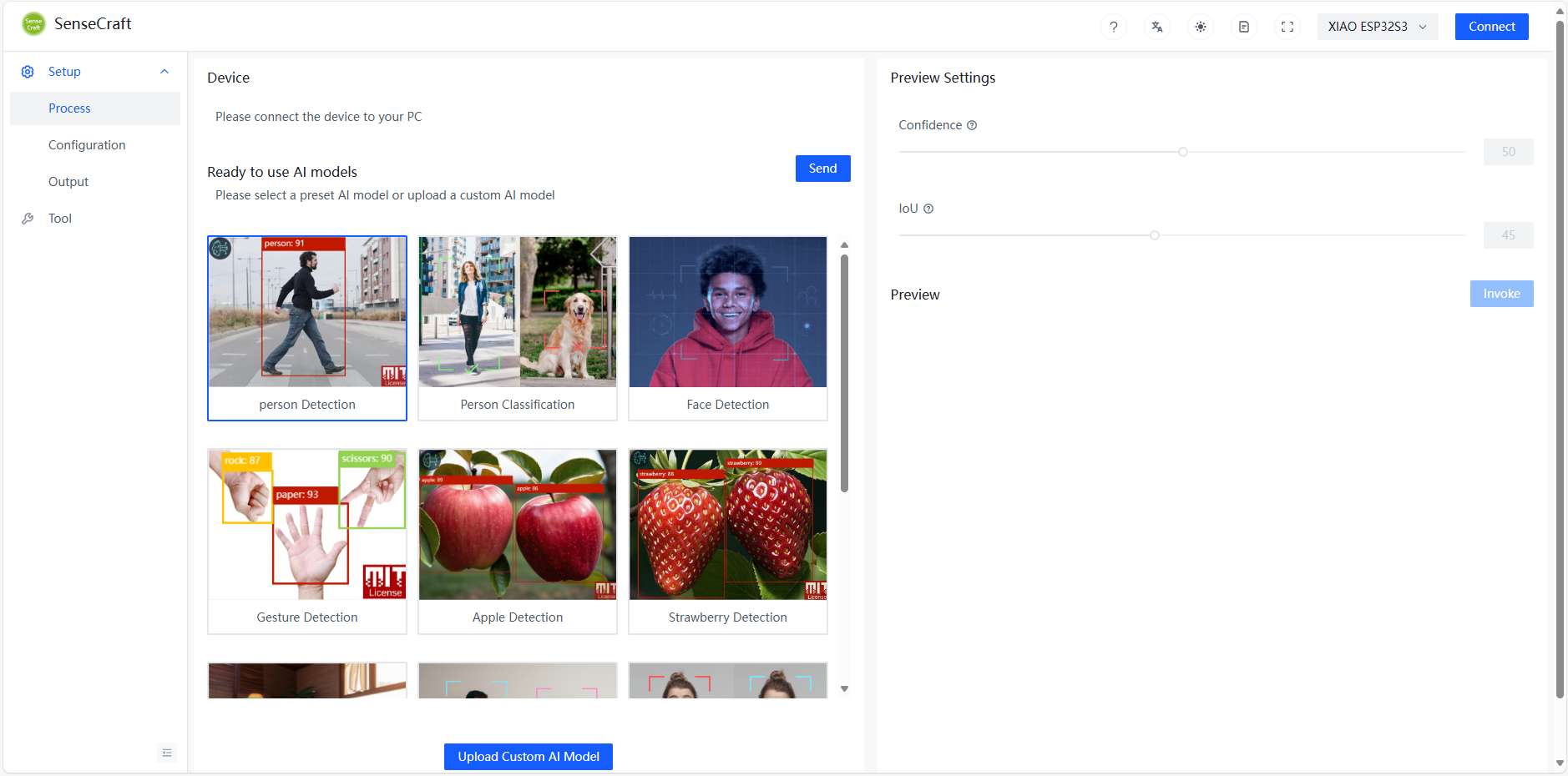

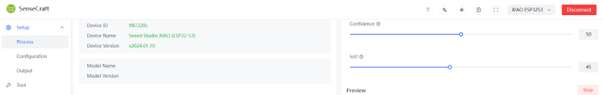

To deploy the model to the esp module, I used the Seed Studio SenseCraft platform.

After connecting the module to my computer, I click the connect button on the top right side of the platform.

Connected:

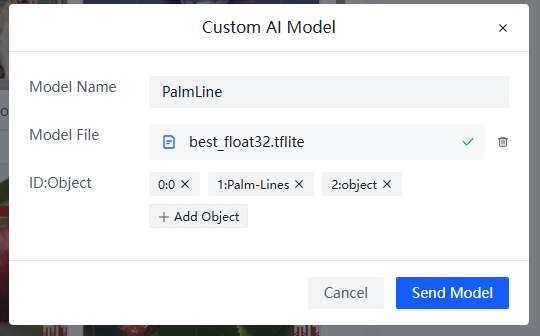

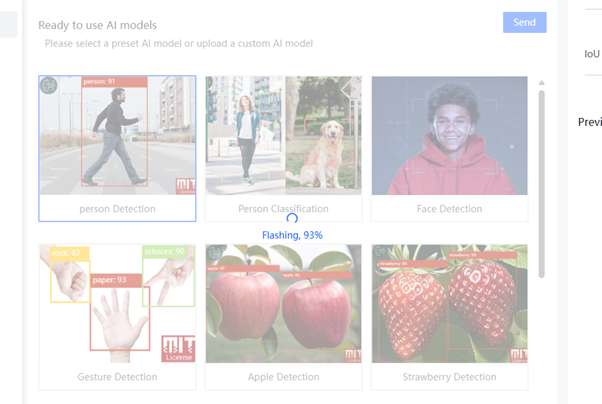

Now I used the upload custom model button at the end of the page to upload my model

I gave the model name as PalmLine, selected the model, added the classes and clicked send Model.

The model was deployed to the ESP module successfully.

Despite the successful deployment of this model, I was not able to invoke the camera and view the result. This is probably because of the model size or other issues that I did not figure out yet. But nonetheless I was able to upload their ready made models for person detection and it works