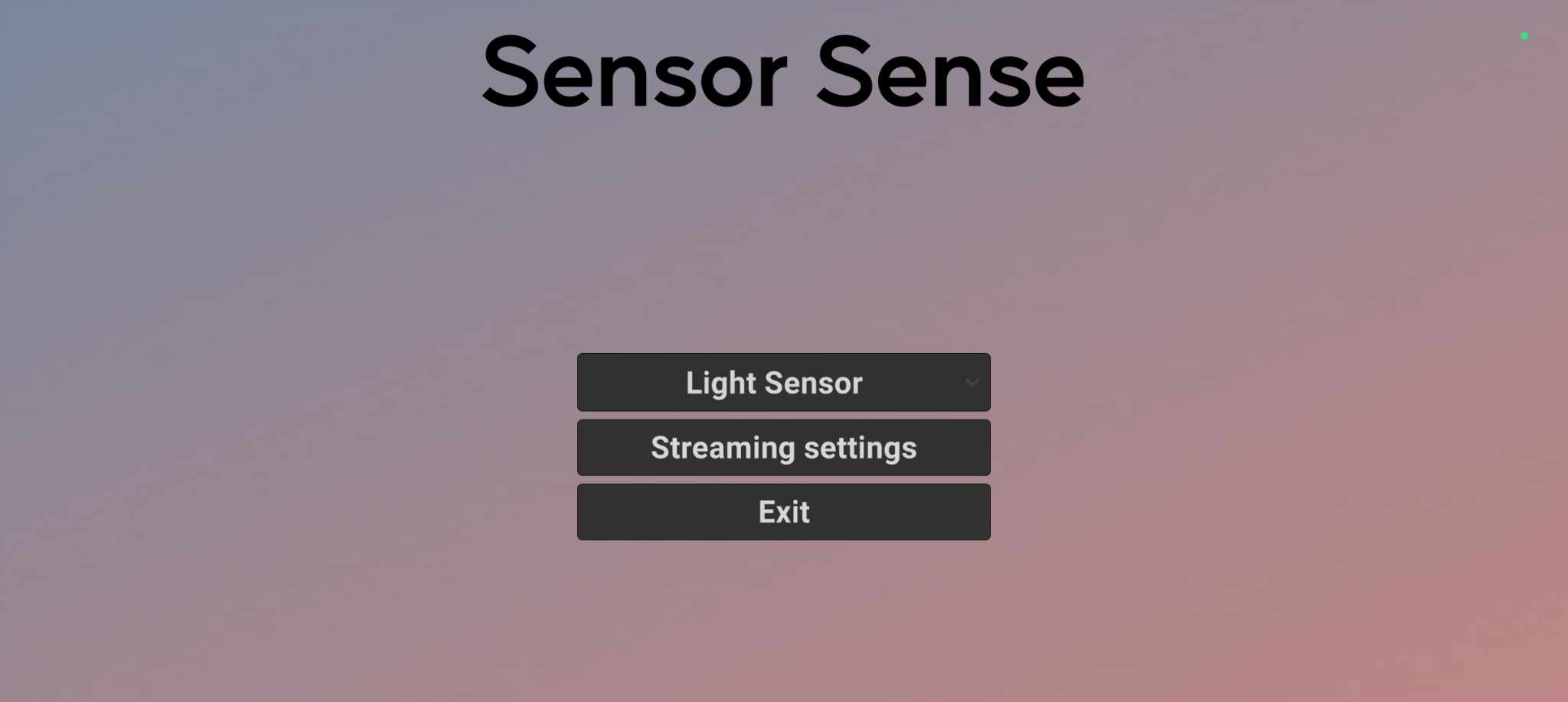

SensorSense¶

The SensorSense app is an Android application developed using Unity that serves as a powerful tool for visualizing data acquired from a device’s sensors. The app allows real-time data visualization, streaming data over local networks, and saving data in various formats. This report provides an overview of the app’s functionality, the essence of its design, and the underlying logic.

You can find the documentation for this project here

Functionality Overview¶

Sensor Integration¶

The app integrates with multiple device sensors, including:

- Gyroscope

- Accelerometer

- Microphone

- GPS

- Light Sensor (using custom Android Java interface due to Unity’s limitations)

Each sensor is represented as a subclass of the abstract class Sensor.

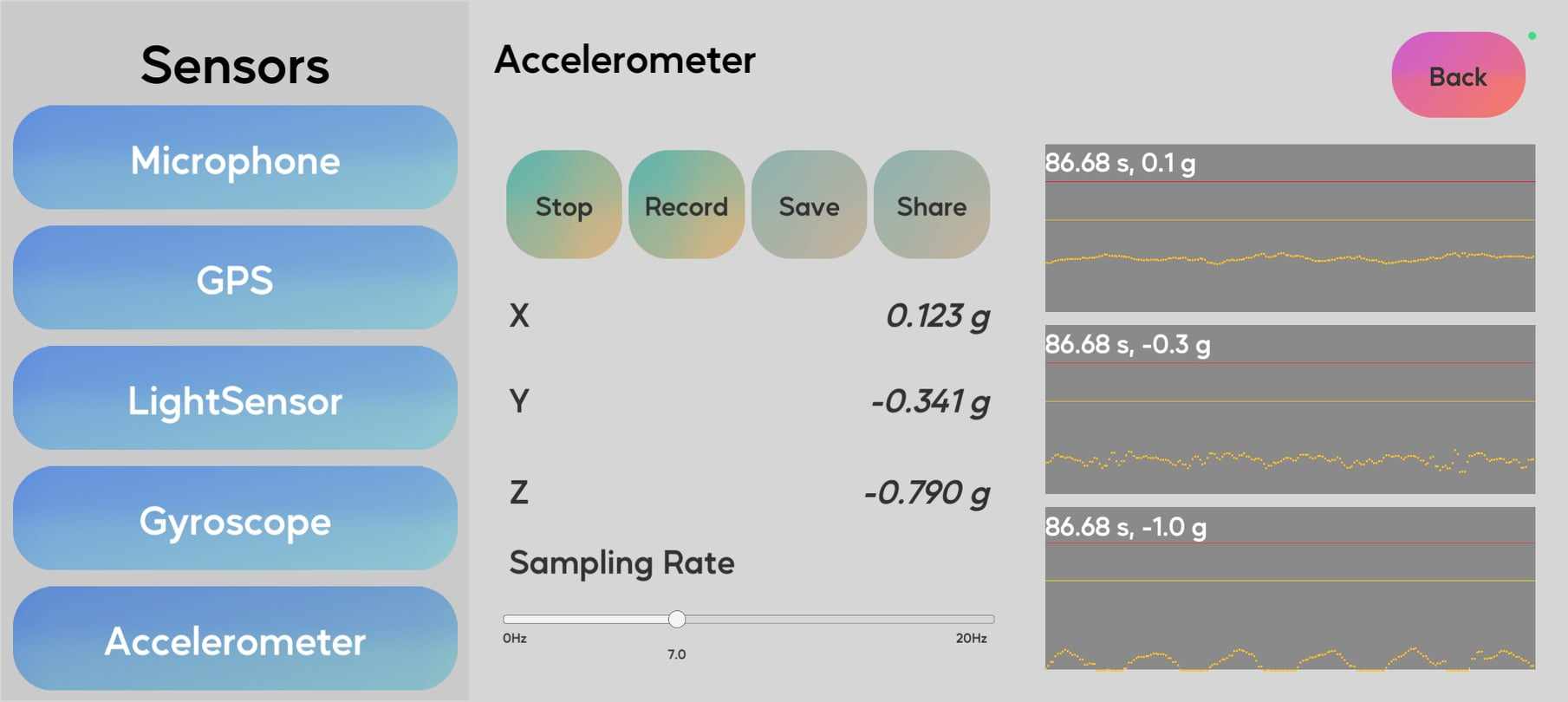

Real-Time Data Visualization¶

The SensorSense app features real-time data visualization using a custom LineGenerator class. This class draws graphs by utilizing Unity’s OnGUI functionality. The graphs visually represent sensor data, such as accelerometer readings, on the screen.

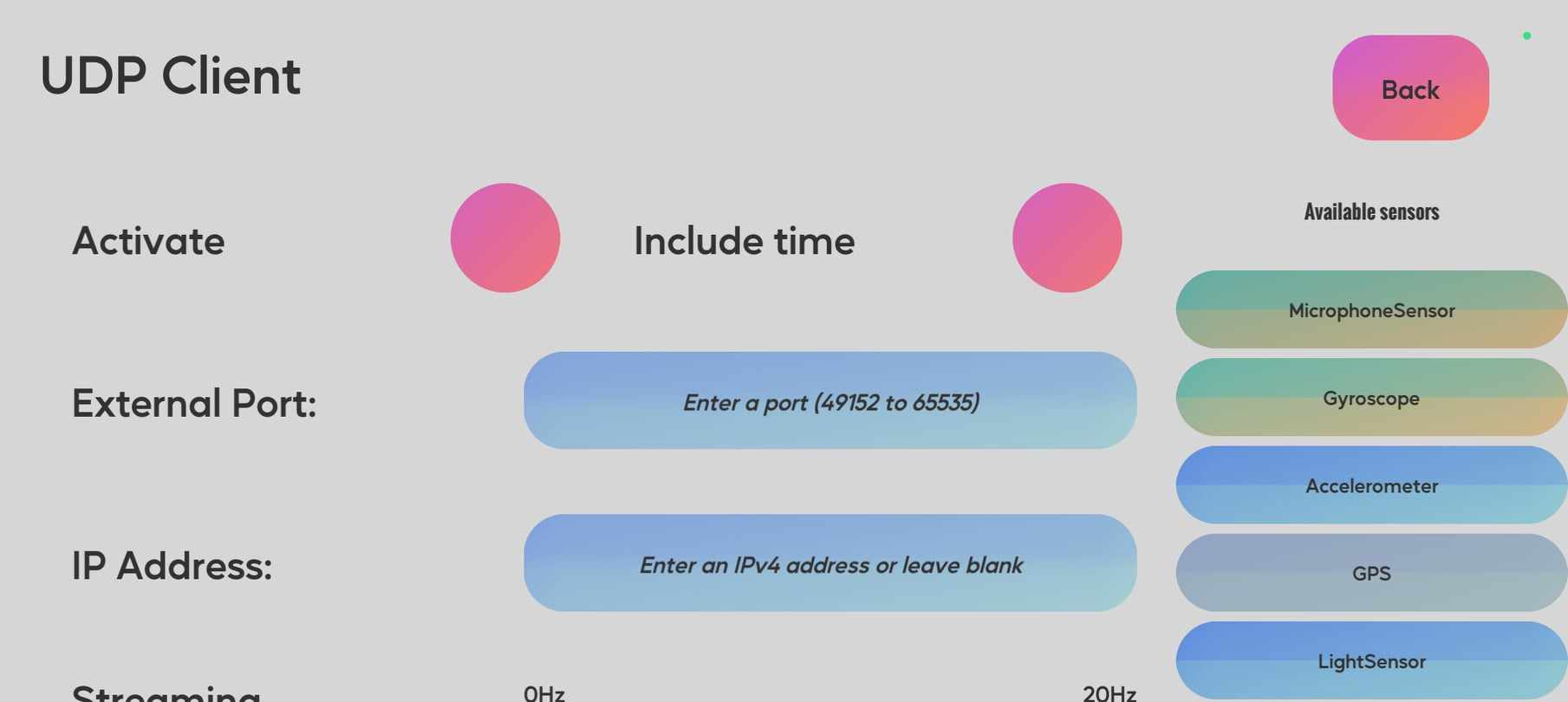

Data Streaming and Saving¶

The SensorSense app facilitates data streaming over local networks using UDP protocol. This enables users to share sensor data with other devices in real time. Additionally, the app offers the capability to save acquired data to .csv files directly on the Android device. This functionality empowers users to store and analyze data for further insights.

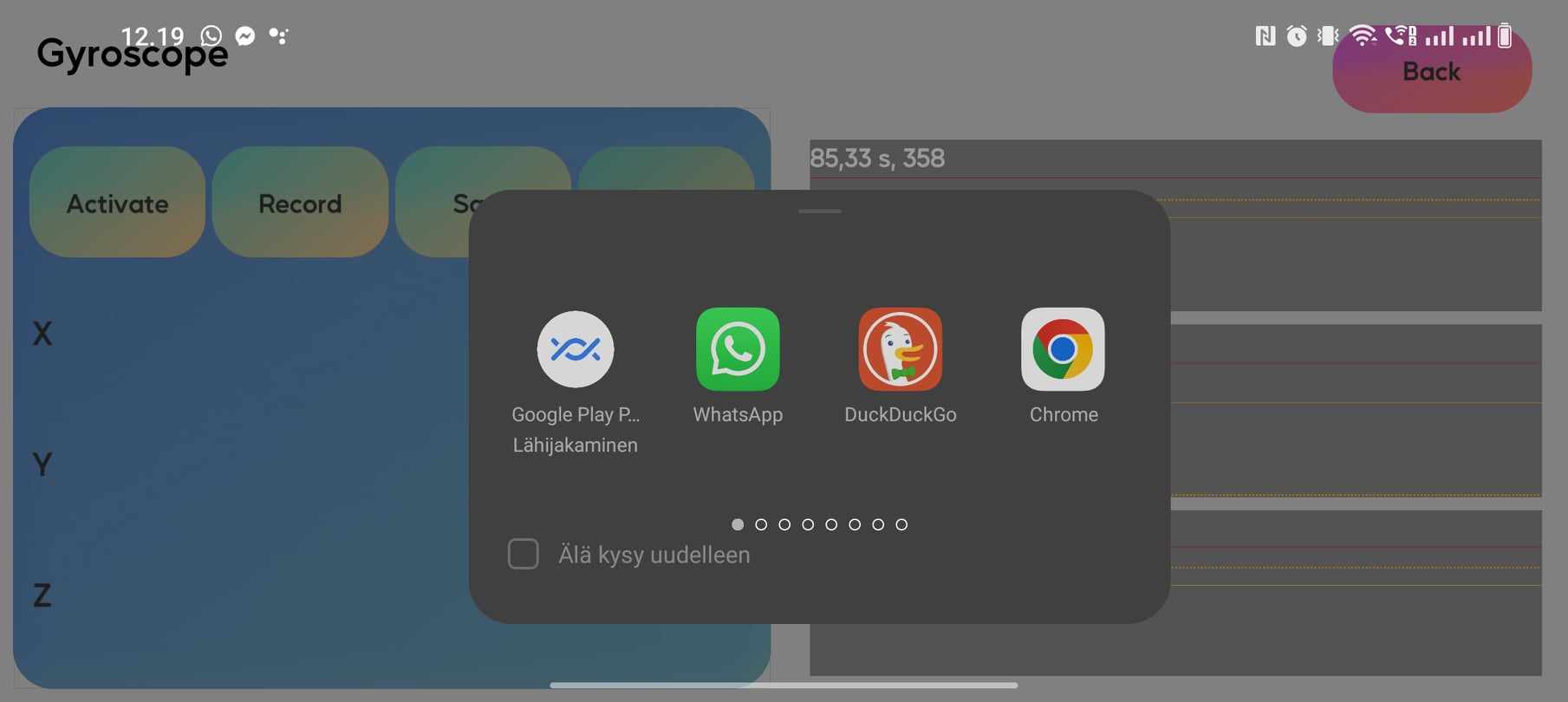

The data can also be shared as a text though any meassging app.

Core Logic¶

Sensor Class¶

At the heart of the app lies the Sensor class, an abstract base class that defines the blueprint for all sensor types integrated into the app. The Sensor class sets the groundwork for sensor functionality, including data retrieval, activation, stopping, streaming, and recording. By enforcing a unified structure, the Sensor class promotes code reusability and streamlines sensor integration.

Data Visualization and Functionality Control¶

The SensorScreen script orchestrates the interaction between sensor data and corresponding visualization graphs. Real-time data visualization is accomplished using a dedicated LineGenerator graph for each sensor. This ensures an accurate representation of sensor readings.

In addition, the script governs the overall functionality of sensor screens:

- Graph updates can be toggled on or off, enhancing flexibility in managing data visualization.

- Interface elements, such as button colors and text, dynamically reflect the current state of the app.

Class Dependencies¶

Practical Applications¶

Beyond the technical intricacies and architecture, the SensorSense app’s greatest potential lies in the practical applications derived from the collected sensor data. One illustrative example is the calibration of a device’s orientation using accelerometer data.

Explore the rest of data processing examples using Python and LabVIEW

Device Orientation Calibration¶

The accelerometer sensor data can be harnessed to accurately calibrate a device’s orientation in space. By utilizing the gravitational force detected by the accelerometer, it’s possible to determine the device’s alignment with respect to the Earth’s gravitational field. This calibration aids in achieving accurate measurements and creating immersive augmented reality experiences.

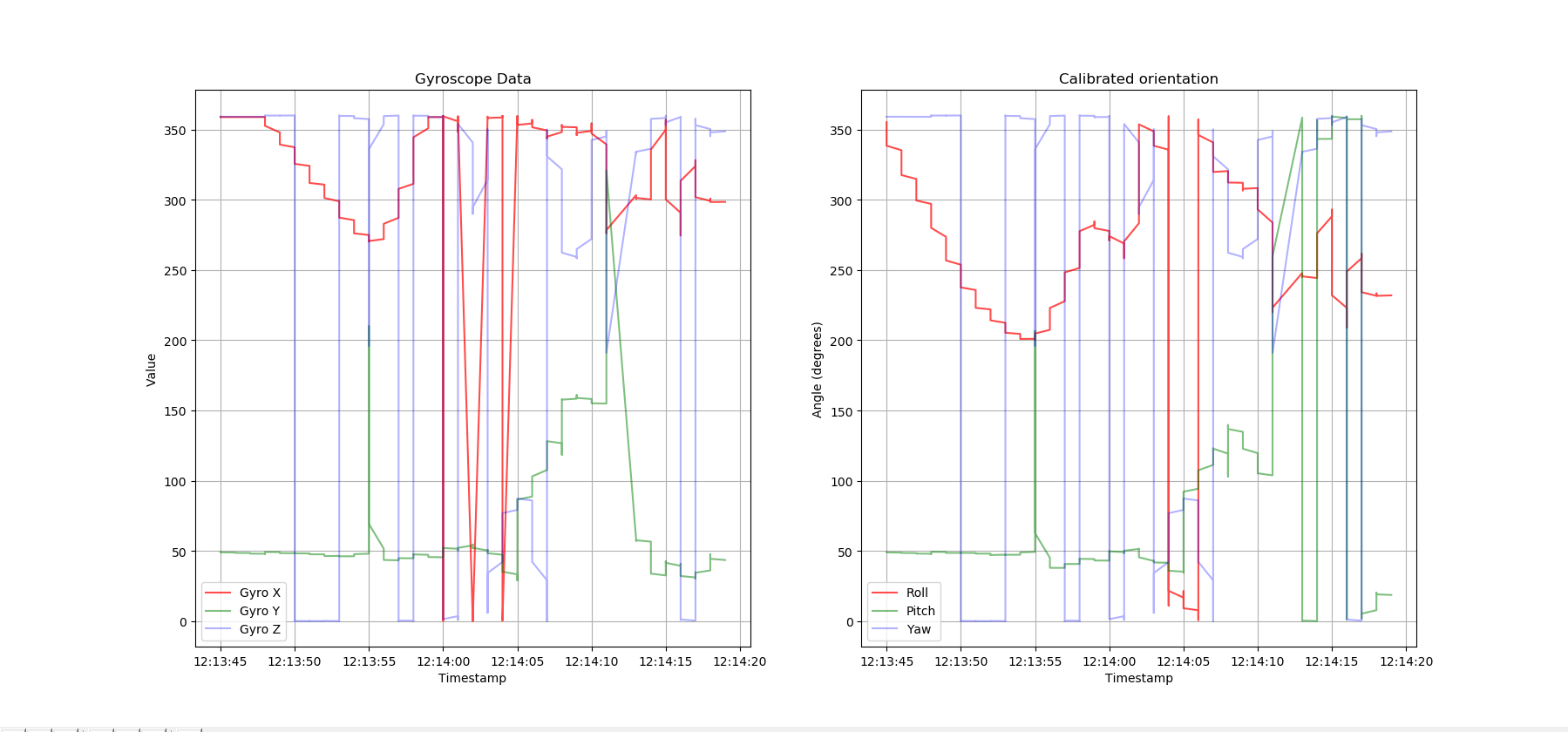

To showcase this, consider a Python script that utilizes accelerometer and gyroscope data to calibrate a device’s orientation. The script employs a complementary filter to fuse the sensor readings and calculate the device’s roll, pitch, and yaw angles. These angles provide insights into the device’s orientation in three-dimensional space.

Python Script: Link to the original script

Here’s a brief description of the script’s functionality:

- The script reads accelerometer data from a .csv file

- It processes the accelerometer readings to calculate the roll, pitch, and yaw angles.

- These angles are used to adjust the device’s orientation, ensuring accurate orientation in applications.

Practical Result and Visualization¶

The calibration process, powered by the complementary filter, yields a profound impact on device functionality, particularly in scenarios involving augmented reality, virtual reality, and 3D applications. The resultant accurate orientation enhances user experience, interaction, and immersion.

Here’s an image showcasing the practical result of the device’s orientation calibration using the complementary filter:

Conclusion¶

The SensorSense app embodies a well-designed framework that seamlessly integrates various sensors through the abstract Sensor class. With the interactive SensorScreen script, the app presents user-friendly screens for data visualization and controls. A practical application is demonstrated by a Python script implementing a complementary filter for device orientation calibration. This illustrates the app’s capacity to harness sensor technology for real-world solutions, highlighting its functional and user-centered design.