20. Project development¶

What tasks have been completed, and what tasks remain?¶

Completed Tasks:

- Rotational tracking using MPU-6050 and MPU-9250 and Mahoney motion fusion algorithim

- Captured good magnometer bias and scale values from figure-8 movements

- Fully functioning UI board that can send button states via I2C

- Fully functioning Master board

- Send UART messages using the HC-05 bluetooth module,

- Read the button state from the UI Board with I2C,

- Read accelerometer, gyroscope, and magnometer values from the registers

- Sufficiently iterate over the fusion algorithim to obtain converged rotation estimate.

- Trained LSTM network to parse a timeseries of 10 and recognize circle motion.

- Laser cut acryllic spacers, support, and wand face

- 3D print and CAD wand body, wand head, button spacer, and button cap mold

- Mold and cast button caps with Ecoflex

Remaining Tasks:

- Replace boring OLED indicator for motion detection with a bitmap of an image.

What’s working? what’s not?¶

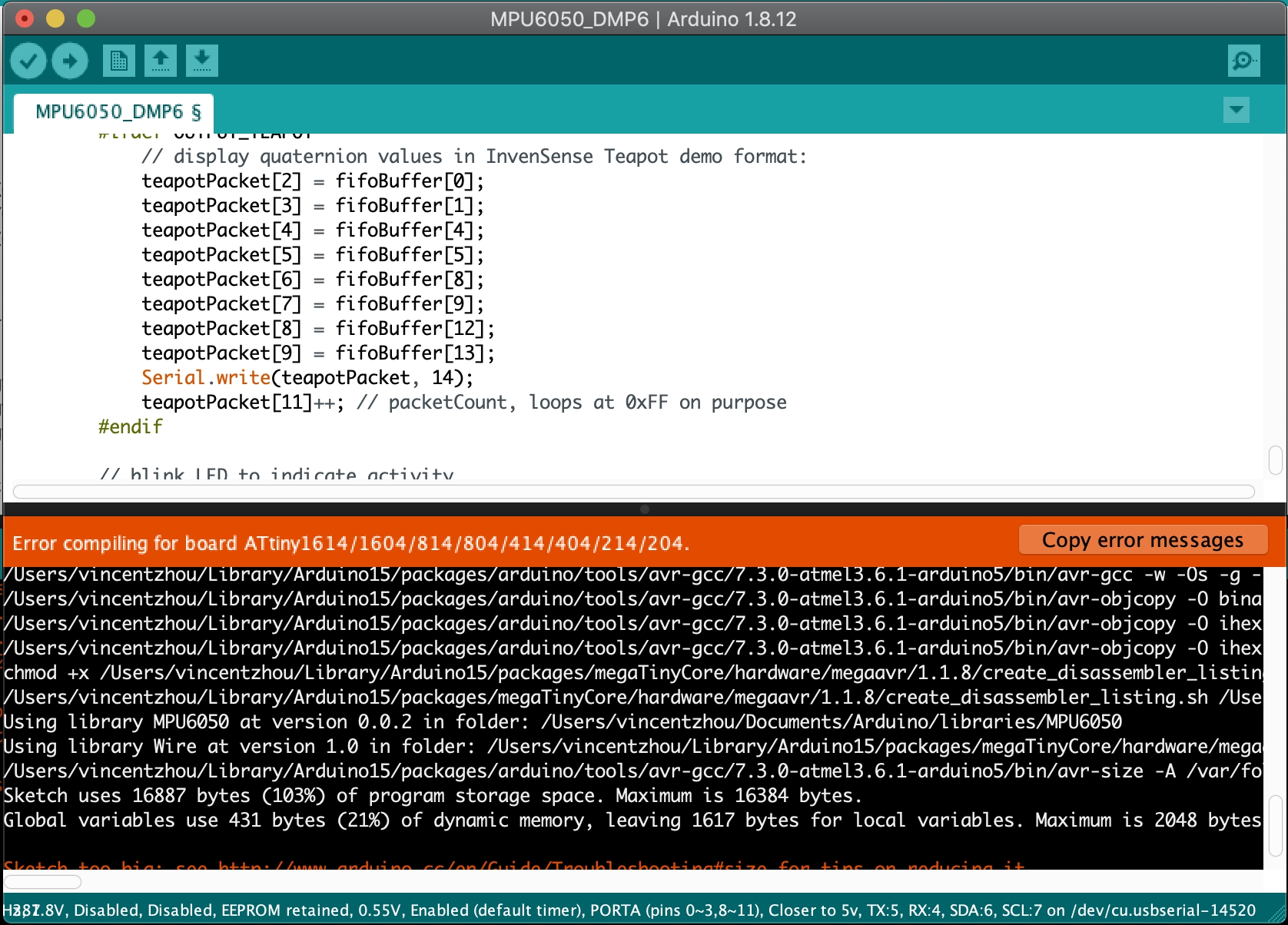

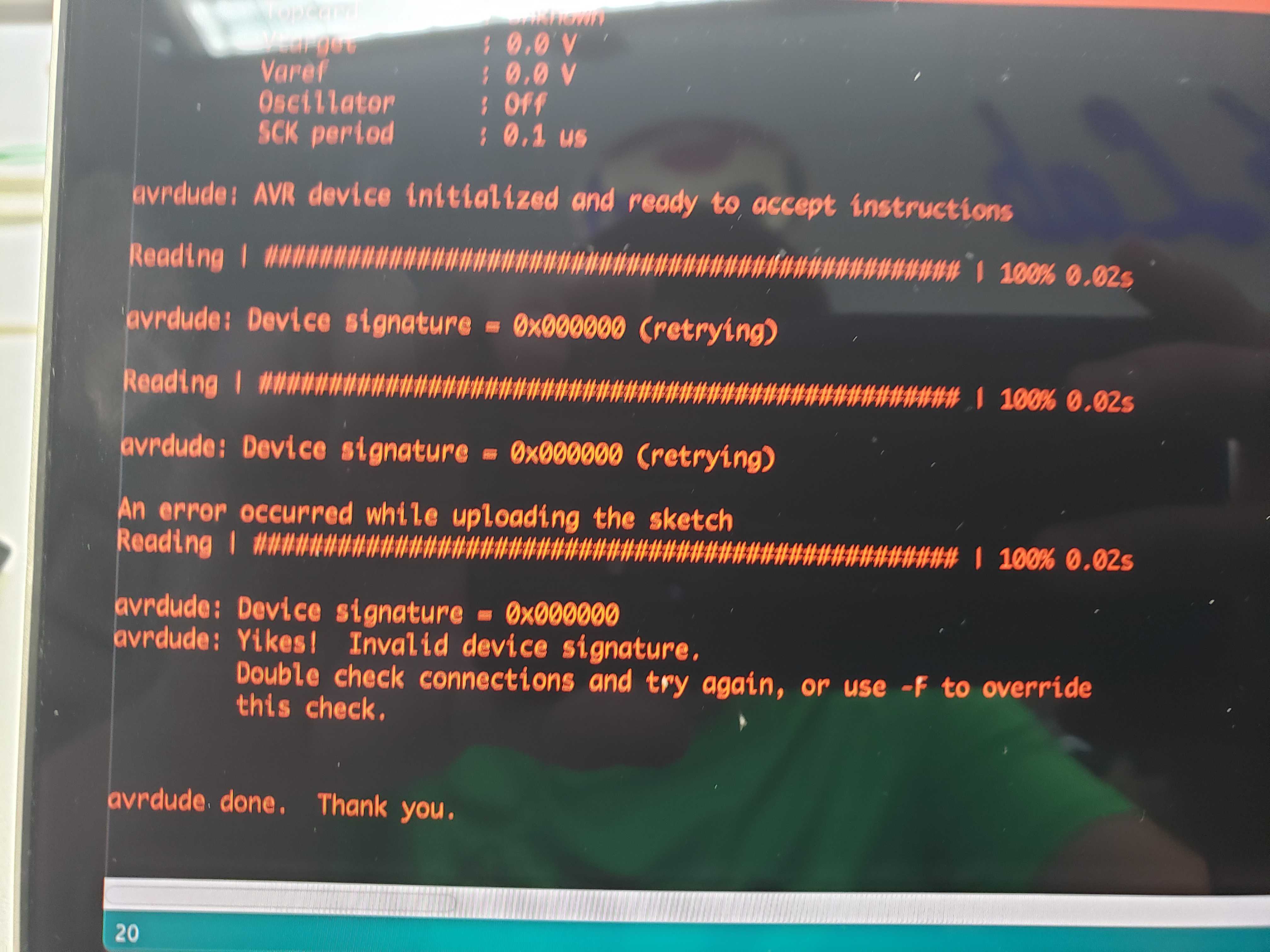

Both the ATTiny1614 board and the ATMega328p board failed to accomodate the project. The AT1614 simply had too little flash memory to fit the big sketch. However, I could not pinpoint the direct issue of the ATMega328p because the chip address showed up as 0x00, indicating that the chip was alive but there was an issue with something else. I highly suspect that my EAGLE CAD schematic was wrong and that I should have referenced a Satshakit for wiring. However, at that point, I suceeded in flashing the sketch onto the ATTiny3216 board, so I retired the ATMega328p board design.

Thankfully, the motion fusion algorithim runs fast enough that the quaternion converges quite rapidly without adjustment of the parameters. There is a slight jitter on the processing sketch but it is bearable.

At first, my LSTM network was completely overfitting on my training data and unable to correctly classify circular motion from random motion. After a lot of additions, my LSTM network was finally able to discern between random motion and circular motion after I had added more datasets and done data augmentation. These were skills that I had applied from my experience in Kaggle Machine learning competitions.

In addition, the rate of data communications between my computer and the OLED board and Wand board is less than optimal. For the wand, 10 times per second was enough to get data recognized but it was tedious to repeat the circular motion for 1 hour straight in order to get 1000 time series sample of size 10. In addition, the processing sketch showed very rough and laggy motion due to the cosntrained data transfer rate. For the OLED board, the numbers update at a very painful rate due to the limitations of constantly transmitting characters over serial.

What questions need to be resolved?¶

One question is whether or not there is a faster transmition standard for Bluetooth and USB. RS-232 is a major bottleneck for data transmission. Meanwhile, virtual reality systems can track controller movement at well above 144 Hz. Furthermore, an unnecessary but intriguing questions is why my did my ATMega328p board fail. I cross referenced the wiring of the ATMega328p with users on the Arduino Forum and Stack overflow but the board failed anyway.

What will happen when?¶

Here is the GANTT chart of the my fab academy project:

What have you learned?¶

I have gained incredible insight in the sheer difficulty of tracking accurate rotation movement with an IMU and classifying the motion. I was under the misconception that the IMU was accurate enough to use raw values and that I could simply feed a false and positive data set to a machine learning algorithim. However, I was soon thrust into the world of motion fusing and utilizing all three instuments on the IMU to create a precise and accurate rotation. In addition, I drew my knowledge of data augmentation to develop new data agumentation methods for increasing the available data for gesture recognition.