Interface and Application Programming

Have you:

[x] Described your process using words/images/screenshots

[x] Explained the the GUI that you made and how you did it

[x] Outlined problems and how you fixed them

[x] Included original code

The Validation Machine - MQTT, Node-Red, P5 Serial, and Teachable Machine

I worked with a group of MDEF students on a project called, The Validation Machine. In this project, my group: Bothaina Alamri, Jasmine Boerner-Holman, Guilherme Leao Duque Simoes, and myself; all contributed to building a machine from salvaged consumer electronics, networking, and interface design.

I started the project by helping to identify parts from an HP printer that we would use in the project. I was contributing to the project remotely from Boston while most of my team was in Barcelona. Still, I helped in locating datasheets for the parts that informed design decisions for the device.

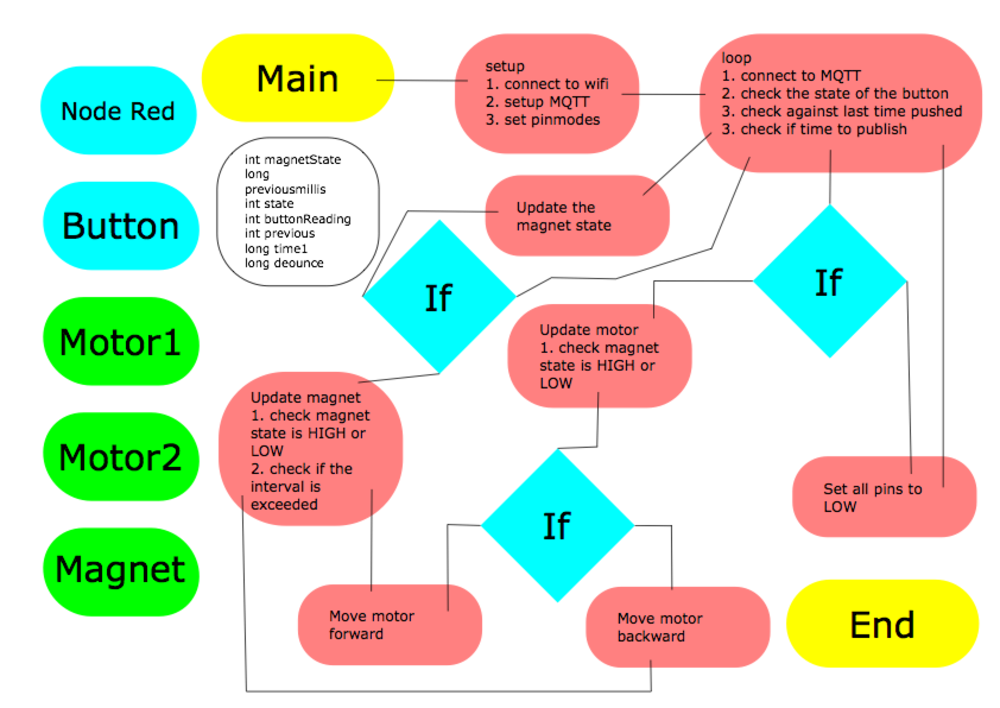

I then roughed out a flowchart to describe to my group and others how I would code the electronics. I also described how I would connect to a server. Finally, I would use a webpage as a client to control the project remotely.

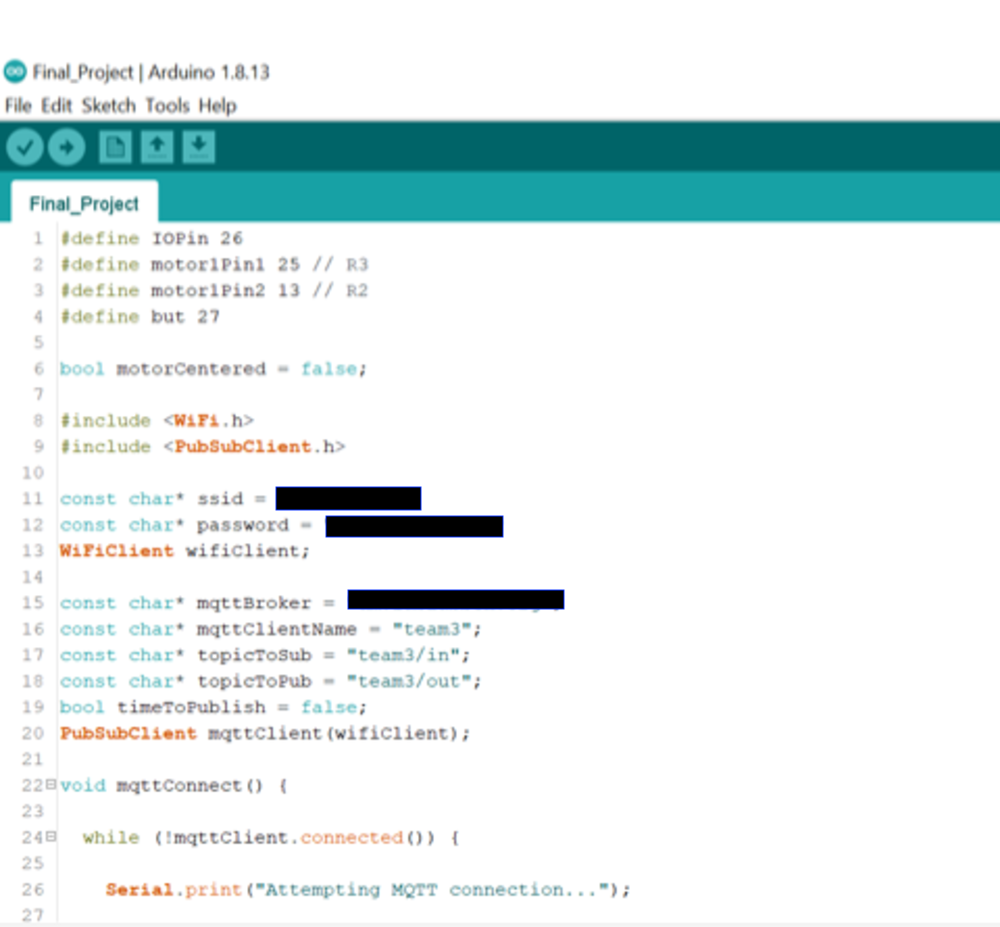

I then coded the actions and connectivity of the device. There are two pins controlling the motor which controls the forward and backward motion of the machine. There is also a third pin which turns a magnet on and off. The machine can be controlled locally through a button that we have on the device. Furthermore, the machine connects through WiFi to an MQTT broker hosted by Fab Lab BCN. When the device is connected, it publishes a “hello” message to the MQTT client. It is then subscribed and listens for a “wreck” message. A callback function listens for messages and checks whether it has received “wreck.”

Once the message to “wreck” is received, the magnet turns off and releases a ball. The program waits for 100 milliseconds before turning the magnet back on. The motor then moves forward for 200 milliseconds, before returning to its original position within 300 milliseconds. Finally the motor stops for two seconds, this accounts for users not just button mashing and breaking the animation.

I then connected to Node-RED which is installed on a Raspberry PI set up for MDEF group projects. Through the visual code, I was able to set up a button to publish the “wreck” message to the MQTT client. I also added a slider to control the speed of the motors. It took several iterations to get the physics correct for the interaction between the ball and the target. So, I decided to leave the speed and timing as is hardcoded.

For this iteration of the project, we held a photo shoot to showcase the project for the rest of the class. I was able to capture my screen while connected to the Node-RED server. After I clicked the button, the message was published to the client and the machine performed the code. I was pretty happy, because the interface and coding not only helped me to contribute to a project in Barcelona from Boston. I was ultimately able to control my project through the UI.

Taking the project a step further, I decided to add a layer of machine learning. Using Google’s Teachable Machine, I was able to train a model to accept hand gestures to define three separate classes. My group continued work with the Validation Machine and wanted to also add a layer of social impact. The group was motivated by a current trend of communicating experience with domestic abuse through the use of hand signals.

I further trained the model by adding a variety of images. From the local environment, the team asked for hand gesture samples from a diverse sampling of people considering: gender, skin tones, and whether or not folks wore fingernail polish. I further added samples of GAN created portraits from “This person does not exist.” To this end, I was able to add another layer of machine learning by incorporating images created to resemble real people. I am able to export Teachable Machines to a p5 sketch. From there, I connected to the machine through p5 serial since there were issues connecting to Node-RED to publish messages.

Group Assignment

At Fab Lab BCN, Xavi Dominguez guided us on interface and application development. We spent time with several different platforms and talked about how to connect them to our projects.

We first used a Miro board to identify technologies that we have experience with. We then began to prototype an application with the tools that we were familiar with. For the Abundance project, I wanted to think about not only how display data for user in this iteration of the project. I wanted to track data for a longer term project that would promote urban farming with aquatic plants through an online platform.

Link to Miro:

https://miro.com/app/board/o9J_lGV-AYI=/

In order to start working with tools, we remixed some examples in A-Frame. A-Frame uses Javascript to render virtual reality scenes within a web browser. I used some of the Minecraft example to help remix the hello-world example. I then tweaked the variables to reposition objects and change animations.

Individual assignment

MIT App Creator

The main goal of this week is to use an application sent in MIT AppCreator to connect to an HUZZAH32 through Bluetooth. The HUZZAH32 then sends a message to boards over asynchronous serial communication based on button presses in the mobile application.

The process coincides with project development in embedded networking and communication:

Apr 28: networking and communications

I started by following a tutorial that I found to make an IoT application that connects to Bluetooth devices. While everything seemed coded well, I was not able to test the application through my iPhone or the Mac OS X Android Emulator since the Bluetooth extension only works with Android hardware.

Moogose OS, Google IoT Core, and Angular

To avoid further platform issues, I resolved to try using Node.js to develop a web application that I can host locally. It just so happened that the first tutorial that I found uses the ESP32 and DHT22. It’s likely that I can use this tutorial as a base to start development and work through any issues related to hardware, once I actually connect to my devices.

Link to Tutorial:

https://torbjornzetterlund.com/how-to-setup-google-iot-core-with-bigquery-and-an-iot-sensor/

I started by setting up a local environment for Angluar. There are cloud hosted solutions for this. I decided it would be best to continue to work as locally as possible. I did an update of npm and installed Angular onto my system.

Angular – Setting up a local environment:

https://angular.io/guide/setup-local

I run the CLI command “ng new my-app” while adjusting the name of the application. I accepted all the defaults. I was first asked to accept Angular routing. I then accepted CSS as my form of stylesheet. The command then created over 20 files and began to install the necessary packages. It also initialized git. So, I wanted to know where the remote repository is stored. The local repo is stored as a subfolder of wherever I ran the command. I first launched the server from the subfolder of my root directory. This opens the browser to localhost at port 4200. I closed the browser tab. I then stopped the process in Terminal with a ctrl+c hotkey command. I then moved the folder to my Desktop for easy access and restarted the server. Everything worked so far.

I then returned to the IoT tutorial. The next step was to install the MongooseOS command-line tools. The guide below takes 12 minutes to complete:

https://mongoose-os.com/docs/mongoose-os/quickstart/setup.md

Before following the guide, I decided that it was best to update and upgrade my packages in homebrew. I then tapped the specific keg and installed the mos tool.

This requires a Google account! The next step was to install the Google command line tools. I first checked my Python version. Then I checked my hardware name to select the appropriate version of the bundle. I downloaded the bundle and moved it to my home directory where I ran the install script. I accepted the defaults and restarted my Terminal shell. I initialized the SDK. I logged in to Google and authenticated the Cloud SDK. The command line tools attempted to create a project. I moved on to the next step before I noticed that the tool was still running. After a first attempt, I got an error that my project name exists. I added some numbers to my project name. This was almost completed, but I needed to accept the Terms and Conditions. I logged in to my Cloud Platform dashboard and a popup asked me to accept. (https://console.cloud.google.com) Once that was done, I attempted to create the project for a third time from Terminal. This was successful. After a couple of browser refreshes, the project appeared online.

The next step is to download the skillshare-IoT-session software from GitHub. I want to learn more, because the account hosting the repo is Greenpeace. Apparently, there is a workshop that coincides with this repo. I cloned it to my Desktop.

After cloning the repo, I returned to the tutorial. I selected the project on the Cloud Platform. I had a challenge finding the Pub/Sub section from the sidebar. So, I used the searchbar in the Cloud Platform to find it. I then added the topic and subscription as suggested. I can return to this step to modify the topic and subscription for later iterations.

The tutorial states that I need to create a registry with the IoT Core. This requires billing access and some survey taking. I was able to navigate this step with my personal account. Hopefully the free trial and credit should be enough to cover the purposes of this project. I can now communicate with the IoT Core through MQTT or HTTP protocols.

I spent some time troubleshooting, because I got the error: Local copy in “demo-js” does not exist and fetching is not allowed. I tested the board with the Arduino IDE to see if there was a problem with the port or the connection. Ultimately, I needed to update to the latest version of mos:

At this point, I am up to step 5 of 7 for the mongoose quick start:

https://mongoose-os.com/docs/mongoose-os/quickstart/setup.md

I selected the port and the board in the mongoose OS. I then ran mos build from the command line tool in the browser. I then entered the command to register the IoT device. I got an error which directed me to start a service account. Despite that, I still got an error. I finally found my solution in the below comment:

https://github.com/kubernetes/kubernetes/issues/30617#issuecomment-239956472

Mongoose OS is a powerful solution for prototyping. I was still a bit put off by how much setup and cloud dependencies were needed to publish and subscribe to topics on the Google IoT Core. Ultimately, I need a solution that will run on a local web server. So, I will try another route.

2018 Class Notes

This week is about desktop applications. The assignment is to write an application that interface with an input or output device. The assumption is that the data will be read through a serial port.

A Hello World started as the shortest program to produce an output. We have been using C to control the microcontroller. In C, we compile. In Java, we compile to a byte code and that can run on any platform. Anroid is sort of based on Java, and there is a sensitive legal discussion. Processing, if you like the Arduino IDe, then you will like this. p5 is Javascript with the spirit of Processing. For visual model-based programming, there is LabView, Scratch, and those sort of environments. AppInventor is also visual data flow. Bash is a standard Unix shell. Python builds to a great extent on APL. It is well-documented. Perl and Ruby are related in spirit, as script languages. There are tools to compile them, but you can run them through an interpreter. Javascript started as simple scripting for web pages. Mozilla runs MDN which is a good repo for documentation. There is a whole development suite for Javascript built-in to browsers like Firefox. Mods, written by Neil, is also a visual model-based programming environment.

We have been using the FTDI cable to do seral communications. Python has a library to talk to serial ports. import serial tells the program how to talk to serial. A browser can not talk to serial, but Node can. python-ftdi and node ftdi let you talk to individual pins, but it won't be necessary for this week. GPIB, VISa, PyVISA are ways to talk to test equipment. MOTT, XMPP, IFTT help to talk to groups of devices. Sockets are how you talk to the Internet. With sockets, you can send messages to anywhere on the Internet. For security, browsers cannot do that. There are web sockets that can send messages to anywhere on the Internet. Node has a web socket server.

In order to store data, you can use flat files. It is perfectly fine to store data in file, and then read the data into memory.

For user interface, if we look at the distance interface from input week, then we will see that Python uses Tk which is a standard for graphics. wx is another platform of widget libraries. Qt is another set of widgets for Python. HTML forms is another set of widgets that is older, but it can be called from Java. jQuery is another widget set. Because of the economics and ease, there are many ways to organize multiple Java apps. Meteor and many other examples are frameworks for Javascript development.

In terms of graphics, you may want to do visual representations of what is happening. Web standards support multiple graphical elements. Canvas elements in HTML are bitmaps. To talk to web pages directly, you need a web socket. The difference between canvas and SVG is that you can create objects directly on webpages that can be manipulated. With canvas, frame by frame you need to redraw. Three.js uses WebGL to use Javascript to talk to the GPU. The GPU talks to the browser efficiently. WebVR is a maturing standard for talking to virtual reality devices. THREE.VRController is a bridge between Three.js and virtual reality devices. OpenGL is under the hood but can be used in C and Python. For game engines, you can use Unity and Unreal to talk to serial devices

For sound, SDL is a standard. PyGame is a Python binding for SDL. HTML5 is a grab bag for multimedia features. HTML5 added media, such as with the Web Audio API.

2018 Class Review

Carolina Portugal, she did not have any background in programming. Her interface was a basic happy or sad face when a button is pressed.

Solomon Embafrash, had problems with Python. His interface was detailed in his Final Project documentation.

Rutvij Pathak, his main motive was to have an interface for his photogrammetry final project. He was using a commercial Arduino. He started to work in Processing, but ultimately opted for Python.

Alec Mathewson, used Max patch to connect his interface. Max was named for Max Matthews who worked on digital music and was a mentor to Neil at Bell Labs.

Shefa Jaber, built the graphical interface with Processing. Irbid used an Arduino and breadboard for the electronics as they were waiting for an order from Fab Foundation.

Joris Navarro, used Xamarin to create a cross platform mobile app. It can create a native iOS and Android application. It is also

Jari, used Unity to create an interface. He went to the Unity asset store to create a game level that talks to his Fabduino. When a character in the game world touches a powr up, an LED will light up on the board.

Antonio Garosi, used Unity to create a 2D game that talked to an Arduino commercial board and a breadboard.

Marcello, used Open CV to do facial recognition that then manipulated a stepper motor.

Hasan, used Node, Plotly, and JSONP to create a Real-Time sinewave graph. He needed to interface to a microcontroller.