Interface and Application Programming

- Group assignment:

- Compare as many tool options as possible.

- Document your work on the group work page and reflect on your individual page what you learned.

- Individual assignment

Navigation

- A long conversation with ChatGPT

- What didn't work and why

- What worked and how I improved it with some ideas

Conversation with ChatGPT

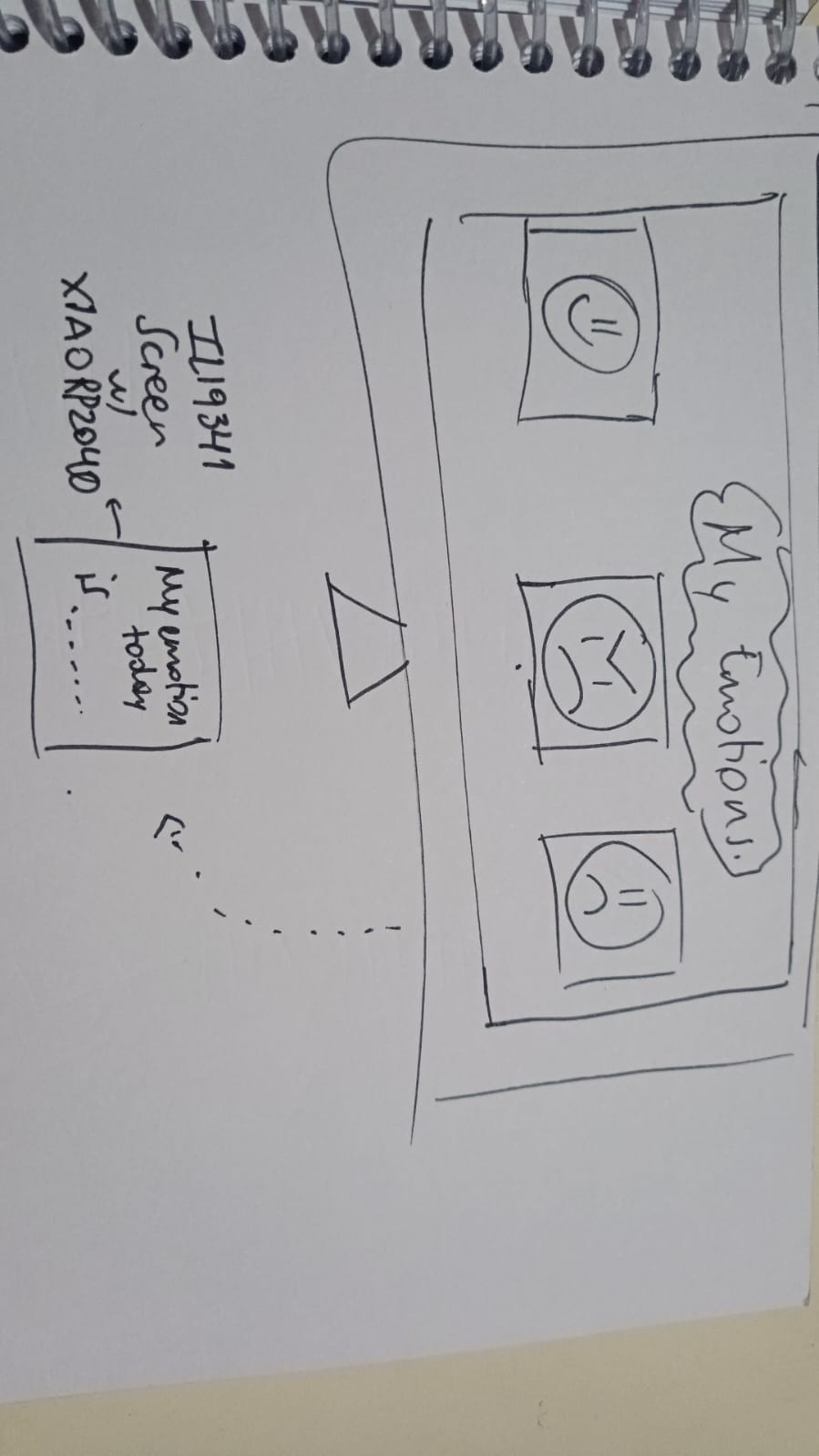

My idea was to make a web page that would connect to the xiao and allow me to send things from the web page to the output.

The main idea was to create buttons on the page that a person could press and it would show that emotion on the screen. Happy, Angry, Sad. If you press it in the computer, the signal is sent to the Xiao and the output shows that up, in this case, the ili9341 output.

I am not too sure how to create this types of interfaces so of course I tried to get help from my very loyal friend ChatGPT (you gotta start getting used to sucking up to them and learning how to make the most of them because they are truly taking over the whole internet, getting scary), and it was a pretty long conversation where I asked it to explain to me how to create html quickly with my idea of the app, and also how to make the code in MicroPython to connect to the XIAO. I will leave the whole conversation as it was.

I will warn you, it gets messy cause my flow of work became pretty chaotic when things started to fail one after another. But well, hardware is like this. When it is something you are not completely familiar with, it will bring you errors and errors, thankfully nothing exploded and I got to where I wanted, but not without having a big fight with how things are done before.

What didn't work and why

Problem is XIAO doesn't really have anything prepared for this kind of communication via the web apparently. Like, it needs to have a wifi module, or another micro that will take care of that part. It is a pretty simple board, not prepared for networking communications that require the internet.

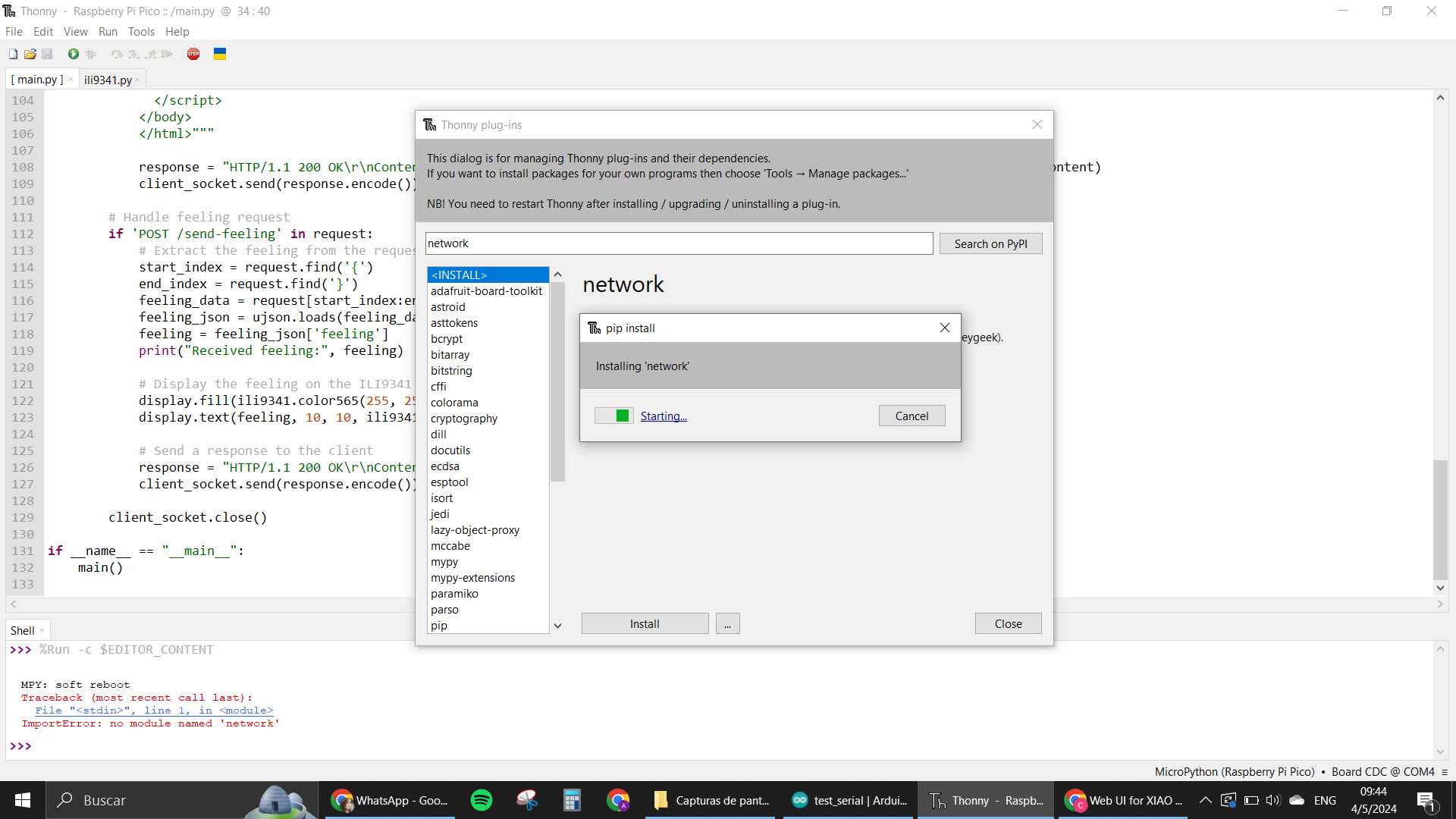

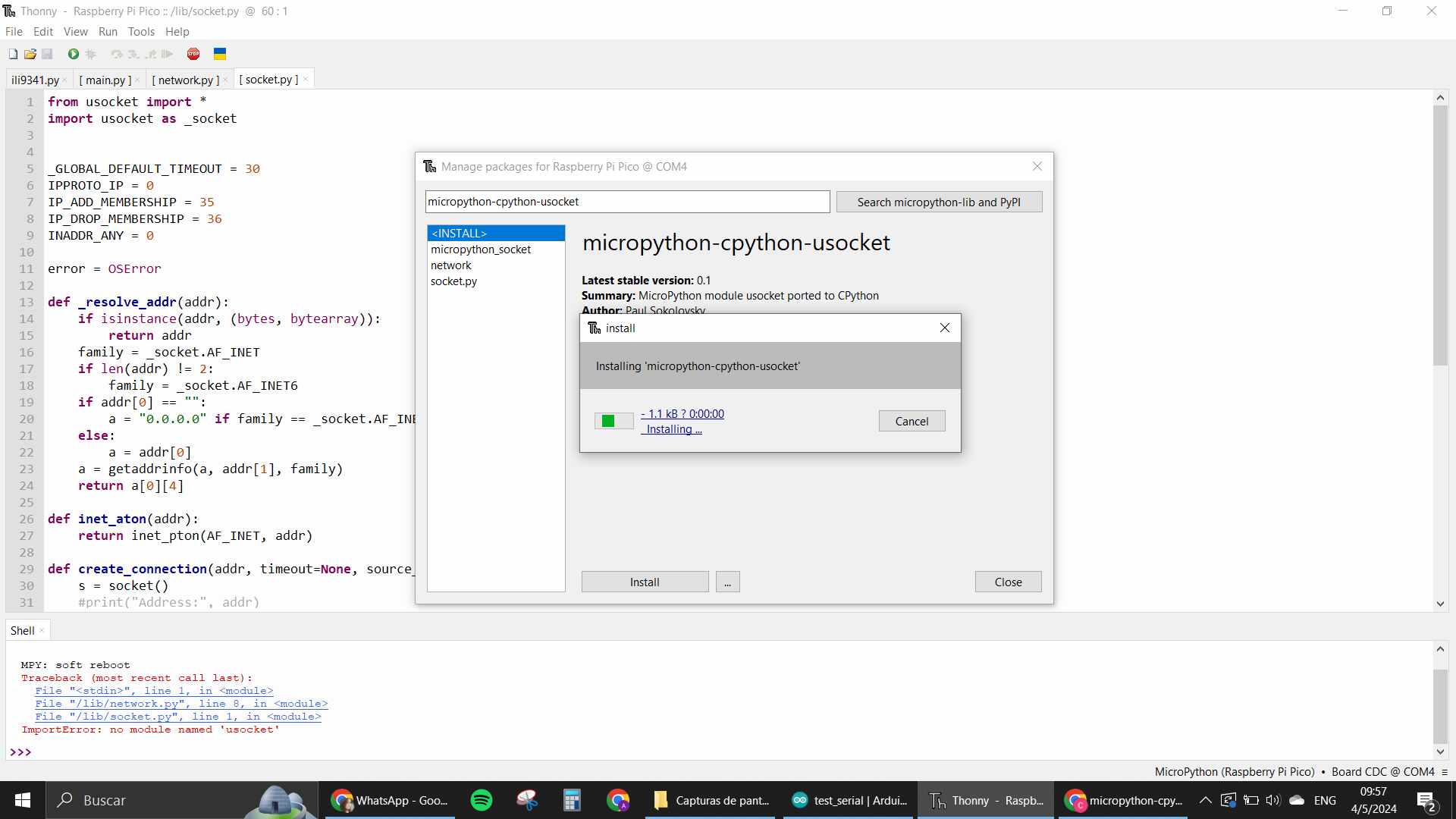

I tried with the codes that chatGPT gave me, installed all the libraries and tested and tested but it wasn't really doing much.

The code basically needed me to install many libraries in my XIAO that would work the web section part. But after installing those dependencies, I still kept getting errors. There was one called "usocket" that apparently needs to be built in with the firmware in the uf2. But the MicroPython compiler wasn't finding it. I was left pretty confused.

I asked chatGPT and found out what I'm saying about the XIAO not having anything that allows for working with internet, so that is when I started to understand that probably the problem was my Xiao and the lack of extra hardware for it to work like I needed.

Also the hole process in micropython is not as smooth cause there isn't enough information in how to do this kind of thing for this specific microcontroller, and the version that we are using to program it is not exactly built for the Xiao, is a raspberry pico version for micropython. Most likely, there would be less bugs in that micro if we were to use the pico itself for programming and there are more applications with it online to go off of.

Anyway, continuing with our application, so many problems were coming up. I gave up on the idea of making it with the web, as I just think the ideal for this kind of application would be a microcontroller that already has some wifi module integrated. Therefore, after failing with the web, I asked for something more local, like an application that would create a window in my computer and connect it to the micro via serial.

This seemed way easier, and used a simple library from python named tkinter.

Tkinter needed to be created in the computer itself, with a python script tho, not run directly from the XIAO. So I opened Visual Studio Code and made the tkinter program side there.

I created the program that would deal with the computer side of things with serial using python language, and separately the program that would deal with the hardware, still in micropython, and the hardware were still the xiaorp2040 and the screen.

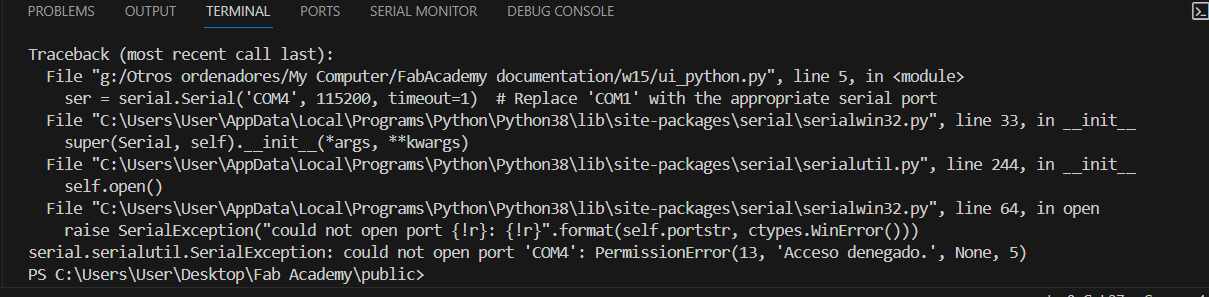

The program was apparently working but wasn't doing anything in the xiao, there was a constant keyboard interrupt that stopped the program altogether, even though I wasn't really pressing anything. Another issue was that the port for the communication was busy, making the deployment of the UI program in python also complicated.

I kept getting an error that said "access denied" with regards to the COM port. When I closed the Thonny editor and tried running the program again, the screen popped up and worked as intended. So basically that led me to understand that doing both things at the same time was not gonna be possible because the Thonny environment keeps the port busy all the time, since you can test your programs directly without even loading them into the micro. So the only solution that I could come up with at the moment was to do it with another environment.

What worked and how I made it better

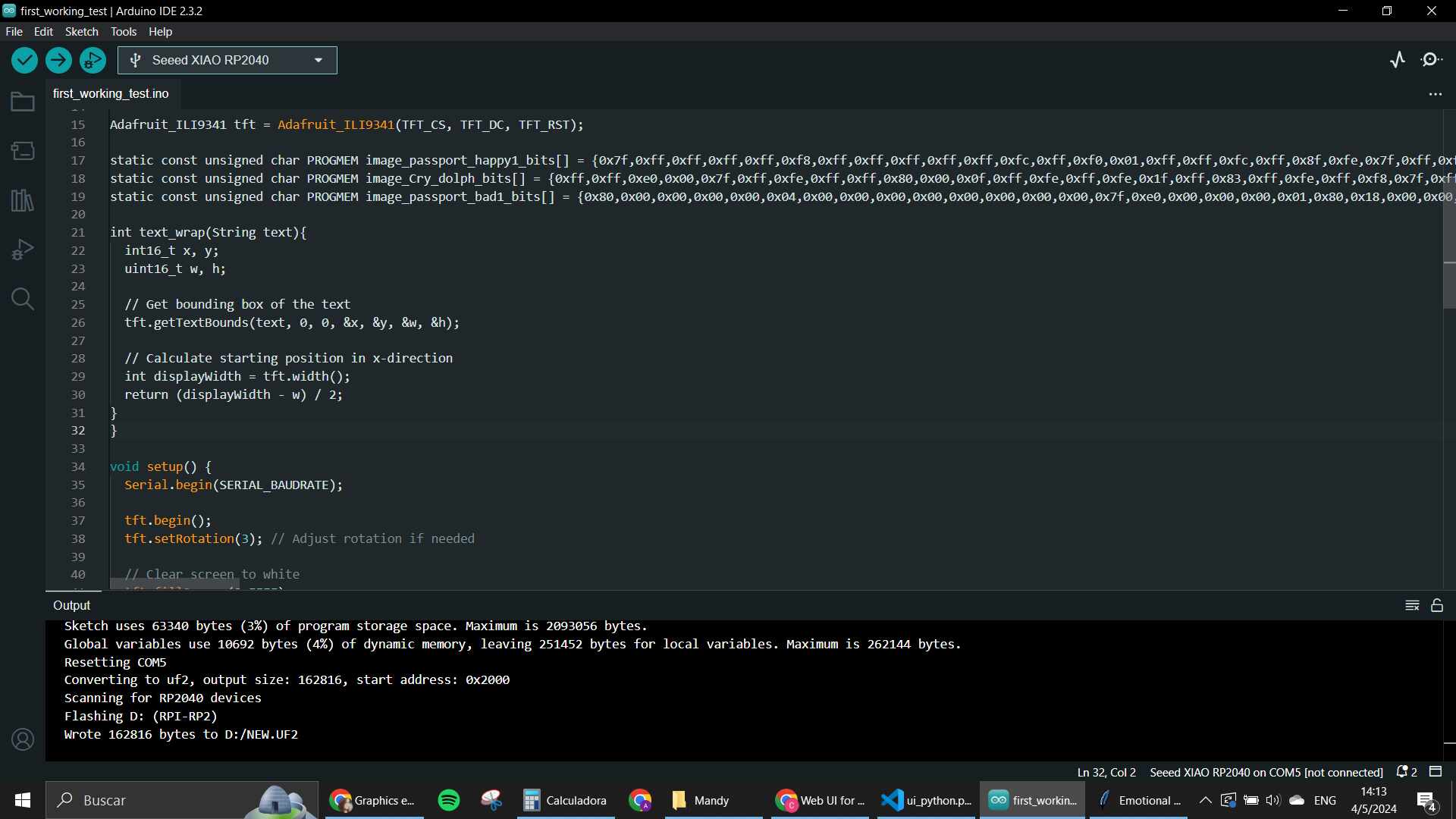

That is where I went to arduino. I asked to have the code in the arduino IDE environment to test it and see if something finally worked.

AND IT DID!

The serial communication works so much better in the C++ environments I have yet to understand exactly why. But after loading the program and opening the GUI on python, the program reacted and worked YAY. FINALLY. Basically I had to know where the port was, use that info in my python code for the GUI, then I would upload the code to the arduino first, since that is the only moment where I would be using the connection with the xiao and the port itself, unless I open a Serial monitor. But since the "serial monitor" for my project was directly on my ili9341 screen, I didn't really need to open it. After that, I ran the code for the GUI and started testing the buttons to see what was happening.

I didn't like that the screen wasn't really being cleared after each iteration, and also didn't like the "invalid command" section. So here is where I started customizing the code to my liking.

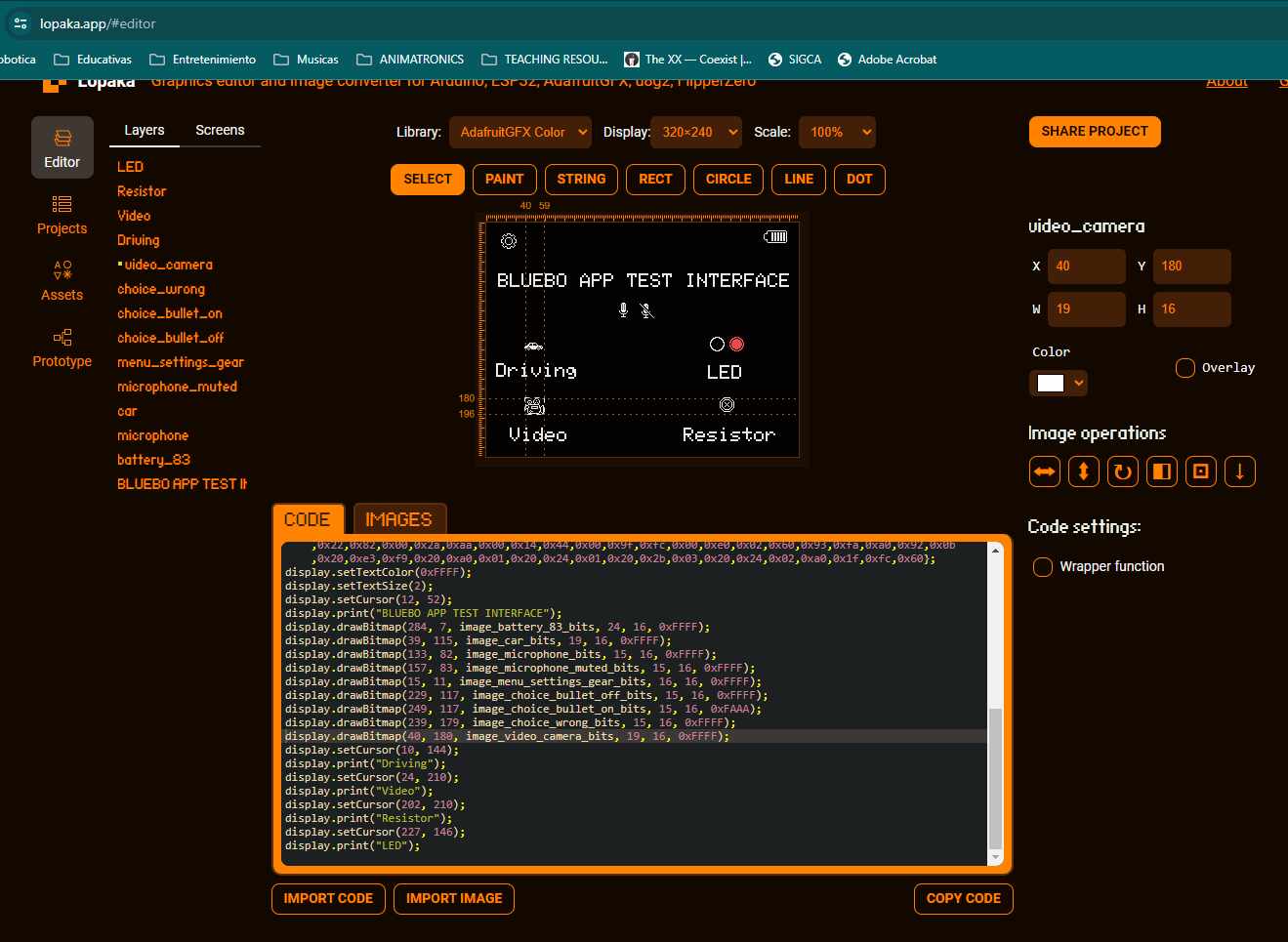

I decided to use the "lopaka" app that another student talked about in the last review we had

with Neil, I really liked how it made it easy to create interfaces for

OLED screens and that had so much available to customize with pretty

common libraries like the one of Adafruit_GFX which

is like the most used one for OLED in general. I used it in this case

to test some of the designs that appeared in there to see how they

would look on my ILI9341 screen.

This app I feel would be great to code big sections of screens that

always look the same and use them for other things too. That is why I

wanted to try it out in this part. I kinda made a mock of how the one

for Bluebo would look, we will see if it gets used.

This app I feel would be great to code big sections of screens that

always look the same and use them for other things too. That is why I

wanted to try it out in this part. I kinda made a mock of how the one

for Bluebo would look, we will see if it gets used.

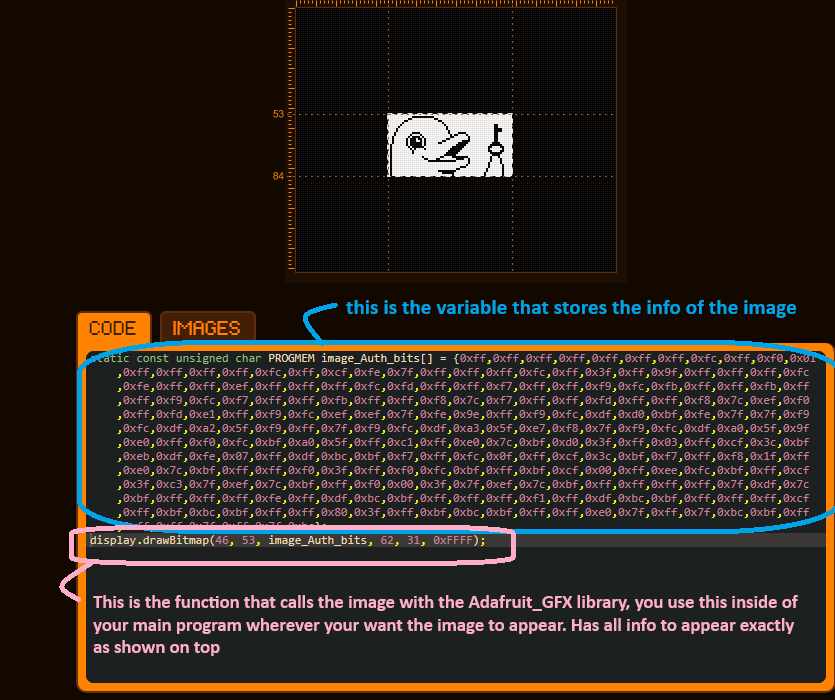

I wanted to use some of the already made images that were available there. Since the emotions I wanted to show were pretty straightforward, I thought I would certainly find something to help me. And lucky me there were these cute dolphin ones that I grabbed to test!

The code is distributed in 2 parts for the images, since they are static, the first part of the code is the variable that has the image stored in HEX, you need to save this part on the top, where you define all of your variables. The second part is the function that allows the image to show up in the screen. I also changed colors and stuff like that in the final code that I used for my microcontroller.

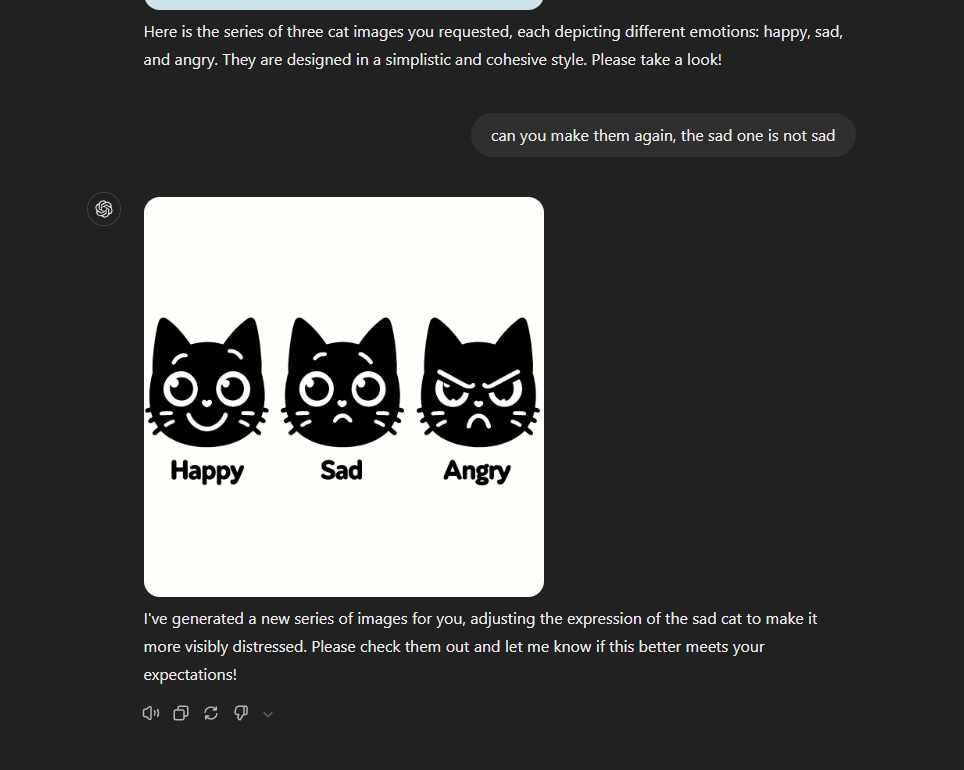

I also made some cat faces for the Interface in the computer with AI. Downloaded and added them to the UI python code. Tested them and changed the screen so all of them would be in the same line. All of it together working nicely down here.