13.Networking and Communications

Group Assignment:

Group Assignment for week 13, Fab Puebla.

First Attempt:

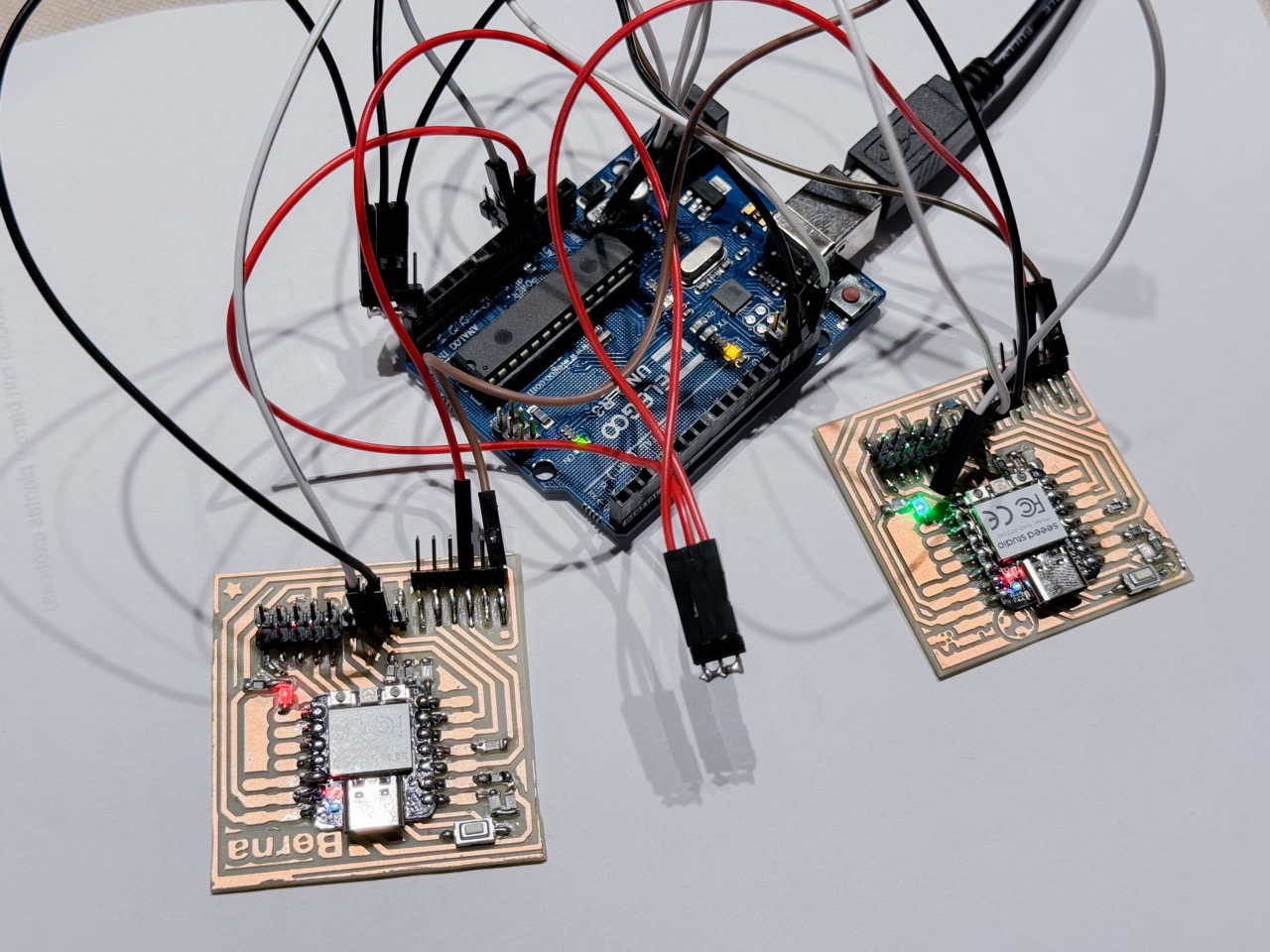

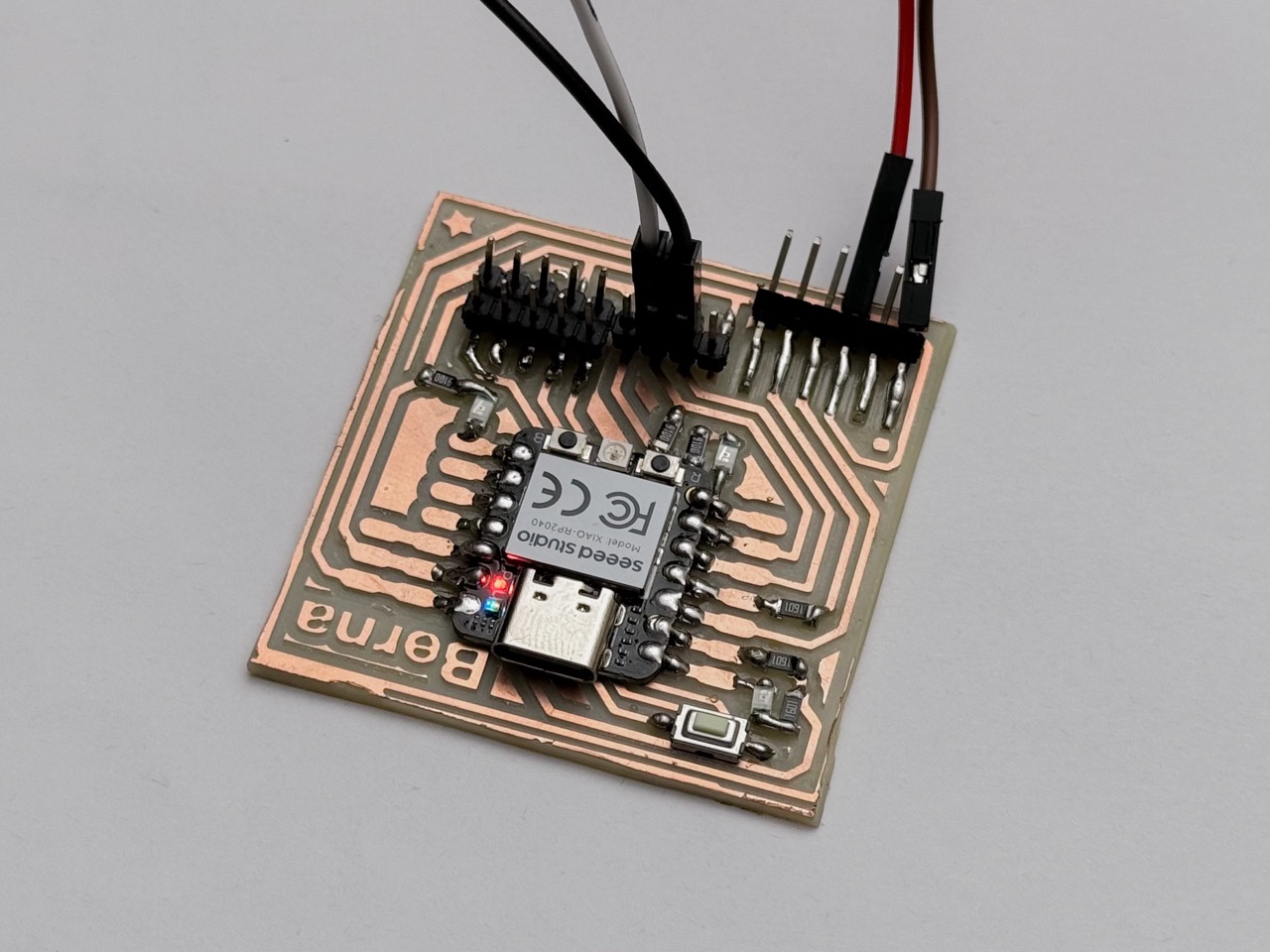

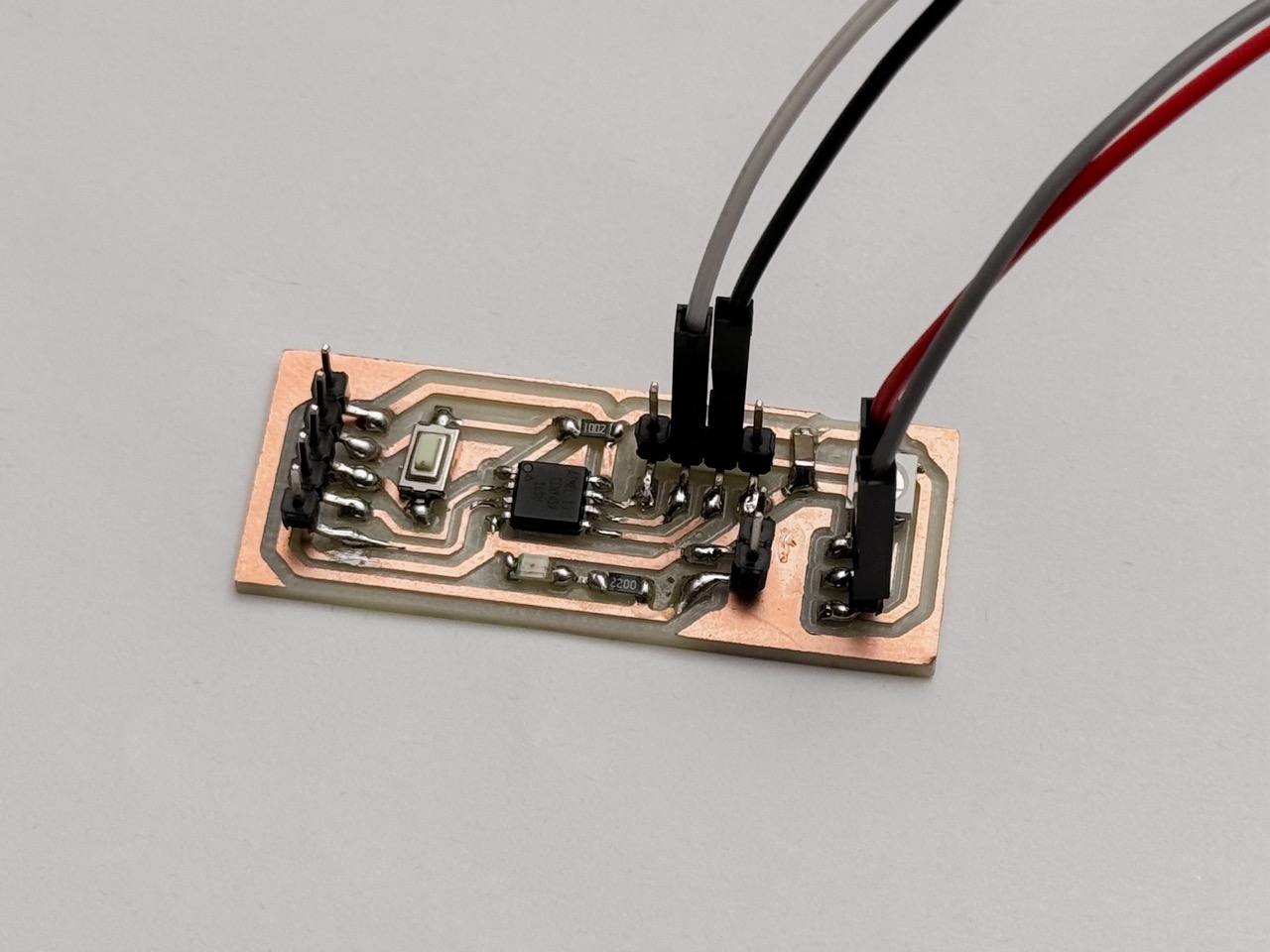

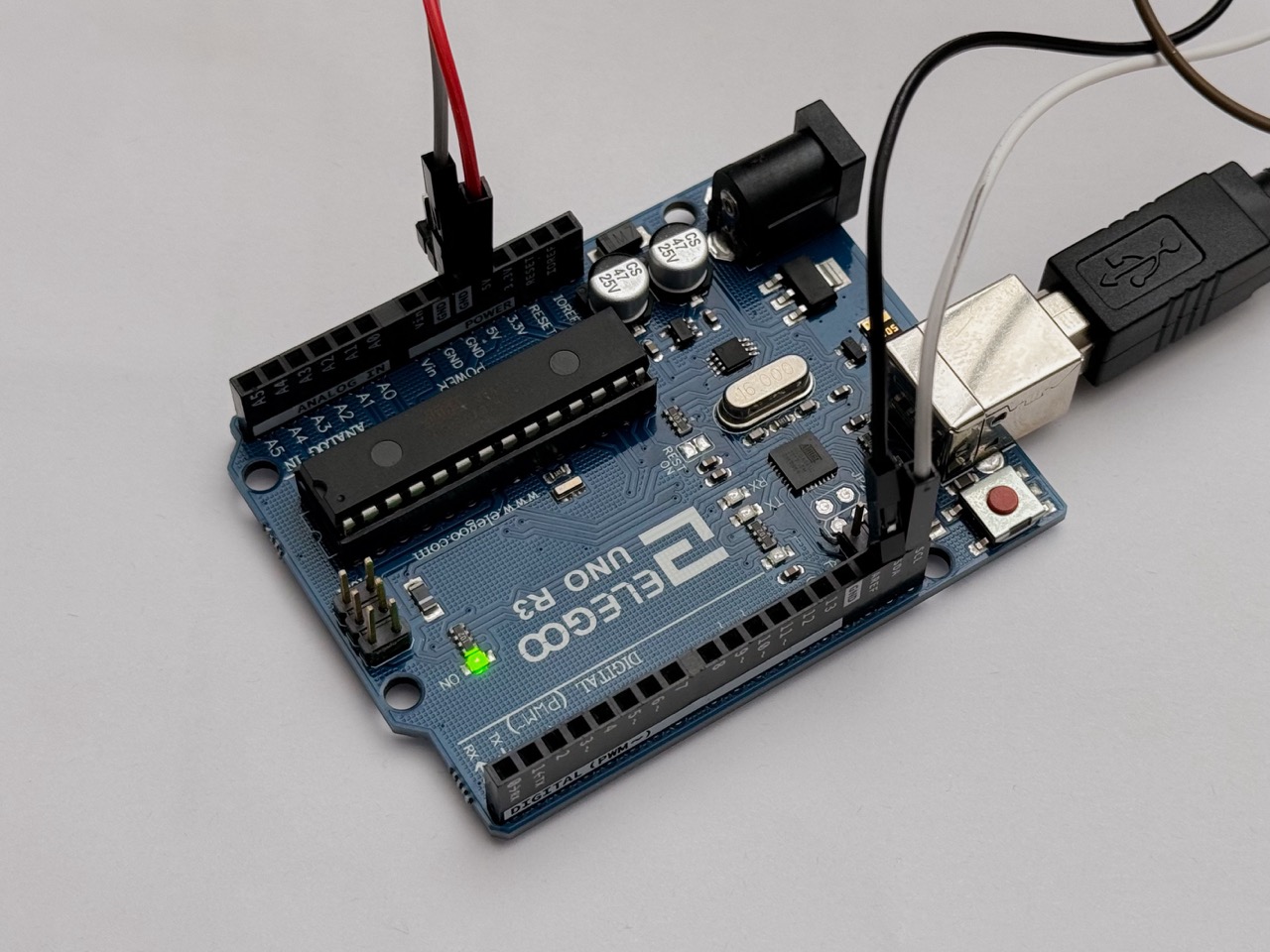

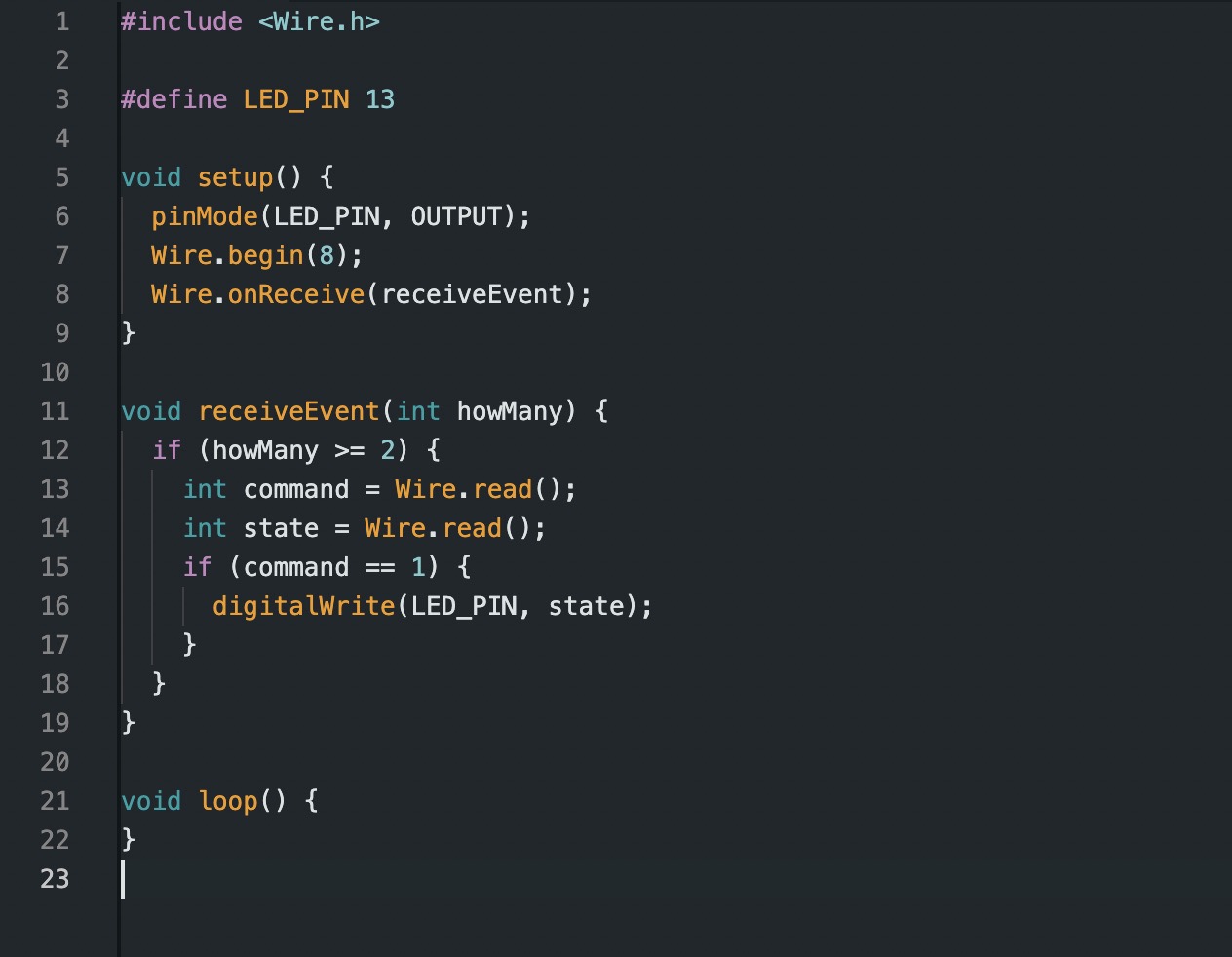

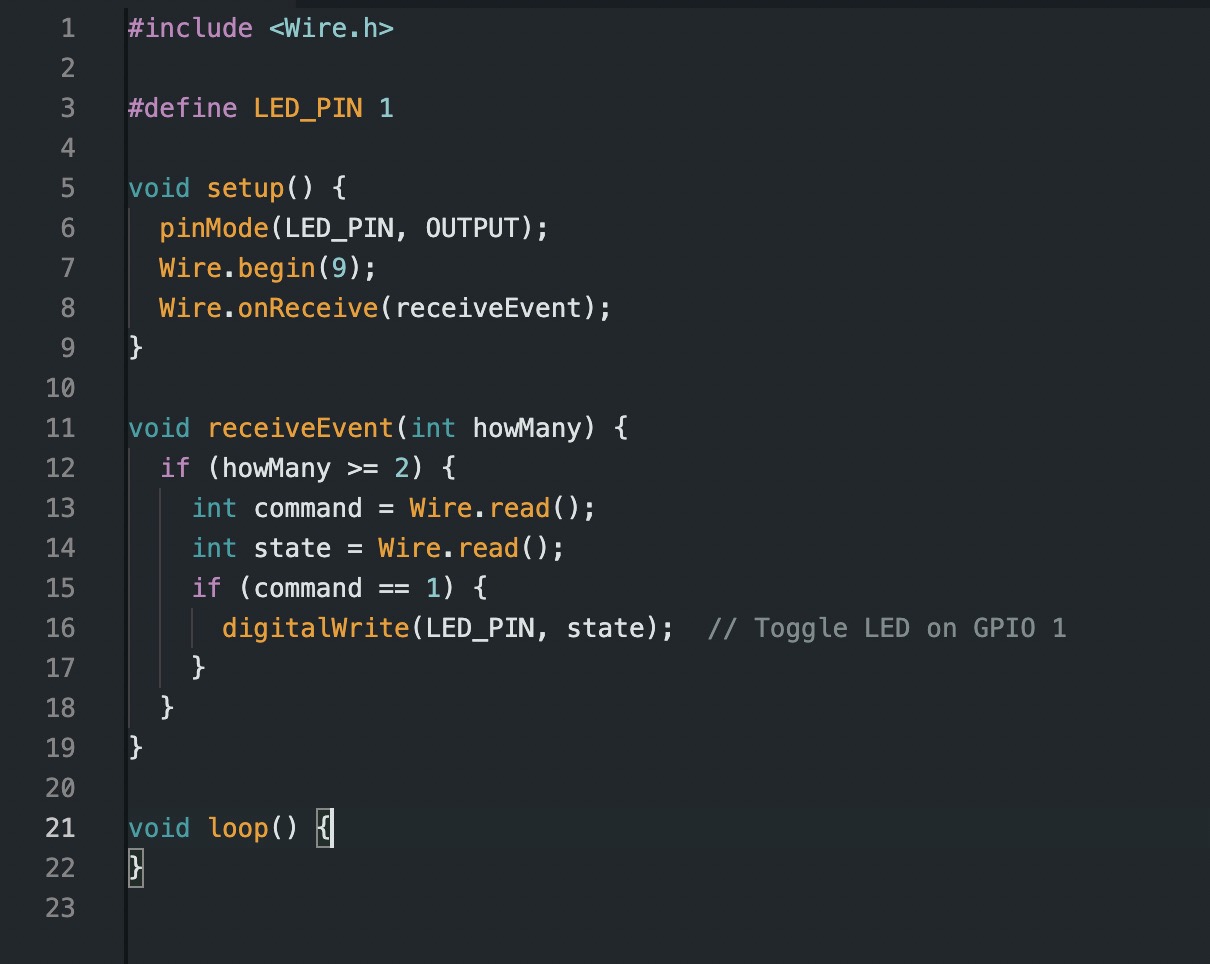

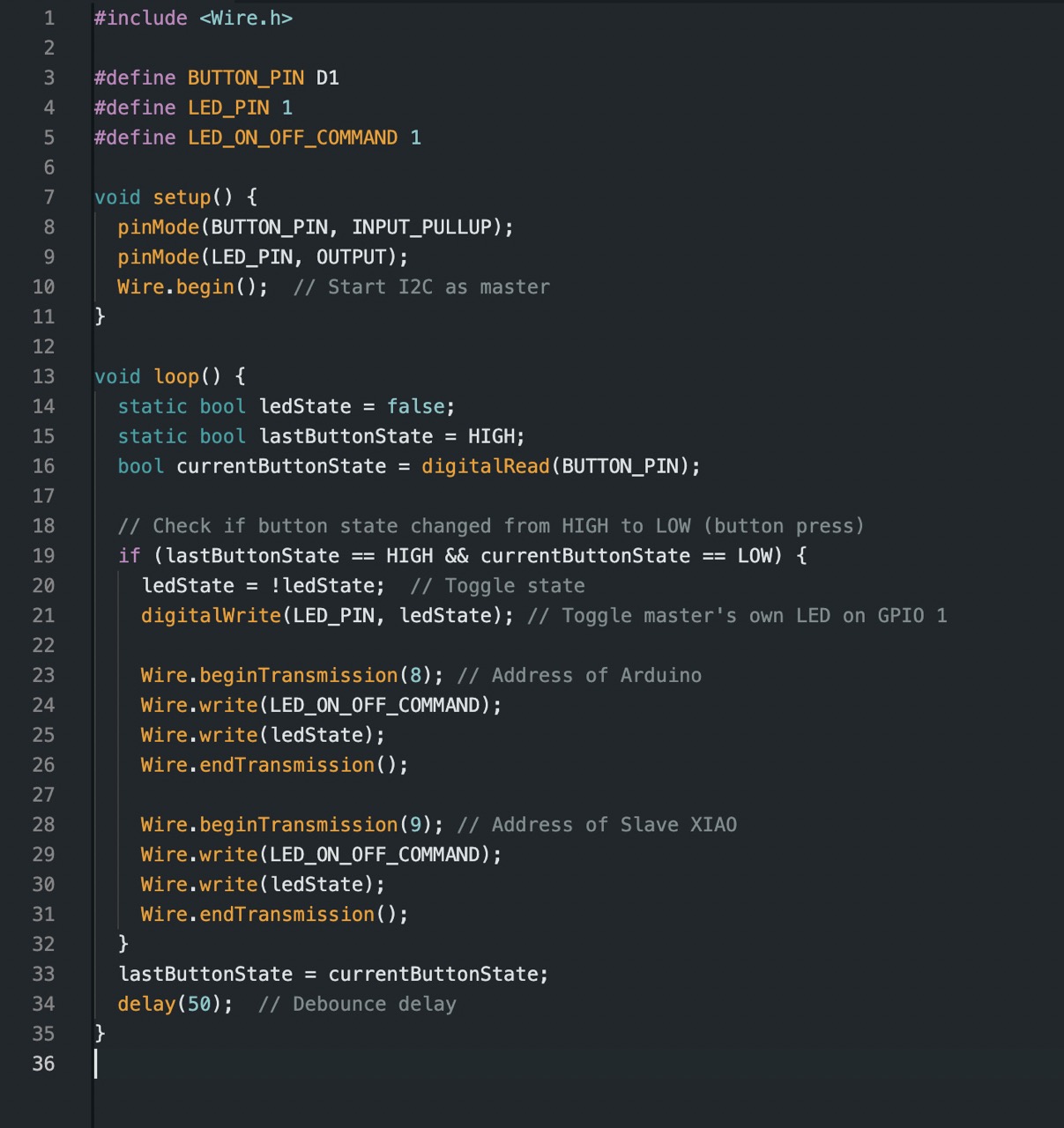

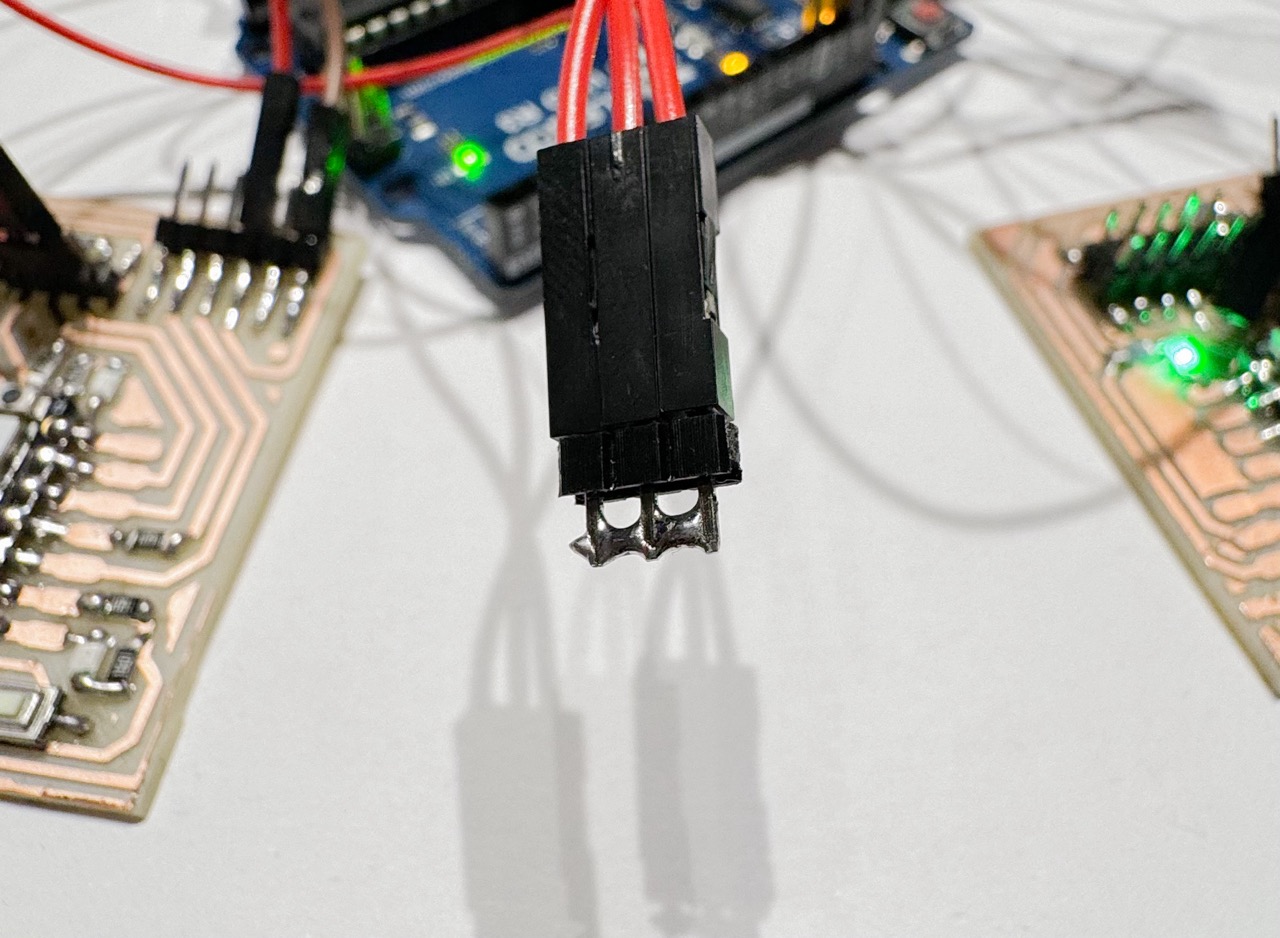

This week I had some difficulties completing my assignment, I initially intended to use a board y designed for an ATTiny 45 and two xiao boards like the ones we made in week 6. Unfortunately I couldn't manage to connect to the ATTiny this time, I managed to do it just fine for week 7 to controll a NeoPixel but for some reason I couldn't connect this time, im not sure where the problem actually lay so I tried using two xiaos and an Arduino and that seemed to do the trick so I stuck with that. These are the three boards, one specifically designed for the Xiao, one designed by me for an ATTiny 45 and finally a regular Arduino Uno.

We started by learning about the advantages and disadvantages of different protocols:

UART:

The first was very limited, for starters it could only interface between two boards which was a dealbreaker and second of all none of my classmates had experience with it which led me to believe this could be an older protocol or one that was great for very specific situations but definitely not an extremely versatile one.

SPI:

The first was very limited, for starters it could only interface between two boards which was a dealbreaker and second of all none of my classmates had experience with it which led me to believe this could be an older protocol or one that was great for very specific situations but definitely not an extremely versatile one.

I2C:

The first was very limited, for starters it could only interface between two boards which was a dealbreaker and second of all none of my classmates had experience with it which led me to believe this could be an older protocol or one that was great for very specific situations but definitely not an extremely versatile one.

Choosing components:

I experimented with several

apps for photo scanning and found significant differences among LiDAR, photogrammetry, and

NERFs/Gaussian splatting. I found NERFs and Gaussian splatting fascinating and look forward to their development over time.

Unfortunately, they generate point clouds, which can't directly be used for 3D printing since that requires a mesh.

Therefore, I only tested them without considering them for 3D printing. I then tried both LiDAR and photogrammetry. I believe

LiDAR is more precise; however, it has a limitation: it offers lower resolution than photogrammetry for close-up objects.

Thus, it is well-suited for scanning large objects like rooms and cars but not ideal for smaller, more detailed objects.

I observed the infrared pattern of LiDAR on the iPhone and noted that the dots are quite large and sparse, so I decided to

opt for photogrammetry. I aimed to use only free software and was interested in trying AliceVision.org since it's open-source

and comprehensive, but it only runs on Windows, which was a limitation for me. Consequently, I ended up using my iPhone and

an app called KIRI Engine. It's not entirely

free but offers a trial period, and I managed to complete a few scans.

I experimented with several

apps for photo scanning and found significant differences among LiDAR, photogrammetry, and

NERFs/Gaussian splatting. I found NERFs and Gaussian splatting fascinating and look forward to their development over time.

Unfortunately, they generate point clouds, which can't directly be used for 3D printing since that requires a mesh.

Therefore, I only tested them without considering them for 3D printing. I then tried both LiDAR and photogrammetry. I believe

LiDAR is more precise; however, it has a limitation: it offers lower resolution than photogrammetry for close-up objects.

Thus, it is well-suited for scanning large objects like rooms and cars but not ideal for smaller, more detailed objects.

I observed the infrared pattern of LiDAR on the iPhone and noted that the dots are quite large and sparse, so I decided to

opt for photogrammetry. I aimed to use only free software and was interested in trying AliceVision.org since it's open-source

and comprehensive, but it only runs on Windows, which was a limitation for me. Consequently, I ended up using my iPhone and

an app called KIRI Engine. It's not entirely

free but offers a trial period, and I managed to complete a few scans.

Learning how to use Kicad:

The process of photo scanning was relatively straightforward. However, since the models are processed in the cloud and I was using the free version, obtaining the results took some time. I began by scanning a plant pot outdoors; unfortunately, it scanned the entire garden as well as the plant, resulting in relatively poor resolution for the plant's mesh and a subpar outcome. The next attempt was indoors, using two photography lights aimed at the ceiling to provide very even and soft lighting, which led to a much better result.

I started by printing a piece that had too small of a gap, so the two pieces fused together and I broke it trying to separate the two pieces. I improved the second version by fixing the gap and I also made the base a little shorter and thinner since I was just wasting material and print time. The second one printed faster and had a larger gap, but not large enough, so I printed a third, but the gap was now too large and the piece dislodged mid print, making that print unusable too. The fourth finally worked, and it worked great, it took little force to separate the two pieces and now it works smoothly. Then I got ambitious and tried to print two stacked on top of each other, unfortunately, I couldn’t find an appropriate gap that would allow it to separate but not mid print, I ended up with lots of single joints with rough ends. I learned a lot by printing several pieces with slightly different dimensions, in the future, I want to try more prints when I have more time. I hadn’t anticipated all the time I would lose with failed prints and with the inefficiency of printing at home but spending most of the day in school. Most of these prints took a couple of hours and many of them failed. I believe I also have a problem with my filament.

Arduino Neopixel tutorial for beginners

After sanding this was the final result:

The sanded piece looked more finished and slightly more curved but nowhere near what I wanted to achieve, so I decided to use my 3D model to make a render of how it was suposed to come out, I used an aglomerate texture to make it look like the real product and used it for the surface on which the table was sitting but increased the scale of the texture to show it off in the render.

I tried adding a pane of glass to the top in the render but had issues with shaddows, the glass absorbed too much of the light and made it look like tinted glass, so I ditched the idea but will look into it in the future since I know I'll be needing glass for renders in the future.