Project

Final Project

Week1 - Initial Proposal

"PoTone" (トーン鍋)

What's this?

Like cooking and eating “Nabe(鍋, hotpot in Japanese)”, players can play rhythm sequence (or more sound patterns) just by putting things on the surface of the instrument device.

Why make it?

Electronic musical devices allow us to play complex sounds by a few (or even without) human players. However, many instruments tend to require more onerous touch that is hard to play for immature players and children. Bunch of buttons, nobs, lines, pads are great for various expression, however we would have to say it's an outcome of self-satisfaction of manufacturer. Also, some high-end(expensive) devices or analog synthesizer devices are too big to bring with. So, I want to make electronic musical device that satisfies following list.

- play easily - simple interface

- play with anyone - multiple player

- play anywhere - portable

Who can use this?

Whoever want to play the music including kids!

How it works?

- For each interval,

-

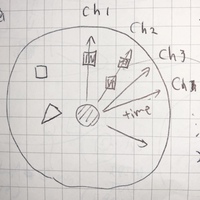

- (Input and Processing)Take a photo capturing the object shape on the top of device in very low resolution

- (Conversion)Covert the image pattern into sound pattern for each tracks

- (Play)Repeat the sound pattern.

When image is changed. You can tweak sound sequence by turning the volume, turning the tempo and putting the object on the surface.

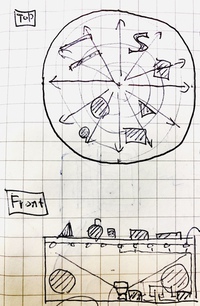

- Like pizza pieces, each degree in image is assigned to MIDI channel.

- Radius of rounded image on top of the table is assigned to time sequence. The instrument plays the sequence repeatedly for each interval.

Input

-

- camera module

- potentiometer(for volume and tempo)

Image processing

-

- Area in the circle for degrees are pre-assigned to “track in sound.

- Through mid point to outside of the circle, image is analyzed and translated to sound pattern.

Output

-

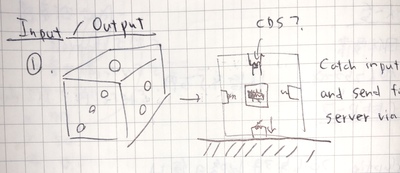

- MIDI out (MIDI usb) connect to external DAW and send MIDI signal to that.

- LED light for tracking the sequence visually

- (optional?) Audio out (in this case, we need to have sound source internally. we might be able to use mozzi, music library for arduino)

Brain Storming

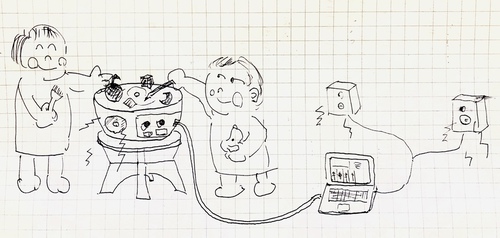

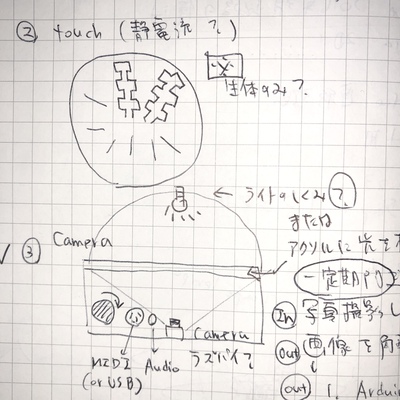

Firstly, I came up with idea that embed sensor to the object and communicate with server(Image: 1). Cds (light sensor) or accelerometer in object side would be able to catch the light status or direction of the object. However, it will restrict the object who can be played and increase complexity of communication. So, I go to the plan to catch the status on the top of instrument at the instrument side.

For catching "how object is put on the top of the instrument", one idea is capacity response sensor(Image: 2). Seeing example of the multi touch that was introduced at the past Fab Academy Input Devices week, it looks very powerful and that can catch slight change of step response.

Though I like to try this sensor at the week of input devices if possible, capacity response requires bioelectric potential or water etc. So, this also restricts user experience.

That's why I'm going with an idea to use camera as an input device(Image: 3) and use processed image as input information.

Reference - the past great ideas and implementations

- Sound generation

- Mozzi is an audio synthesis Arduino library(it's even not MIDI but can make audio sound from Arduino pin). I tried some experiments using breadboard and Arduino UNO.

- Sonic Pi can be used in command line based performance like live coding

- Summary of Midi message by midi.org

- A list of Web Audio MIDI, browser based MIDI interfaces

- Implementation of audio device and product

- This youtube video explains how to change Arduino device as an USB MIDI Device updating the firmware using "Arcore" library

- A youtube video of DIY Arduino MIDI controller project explains how it works and how to make it.

- James Fletcher, FA2014 made an Attiny44 based sound modular device

- Fiore Basile, FA2014 made a tiny MIDI device as an artifact of "input device" week.

- Xiaofei Liu, FA2019 made a disc player that can translate graphics into music!

- Soft MIDI Controller by Deepti Dut, FA2017 explains about the coordination via Device - loop MIDI - SerialMIDI - DAW(Ableton)

- Rectable(youtube video) is the one that put object and make sounds with more highly graphical and complex way.

What's "Nabe(鍋)"?

Hot pot(“Nabe") is one of the favorite cuisines for Japanese family especially in winter season.

Nabe’s recipe is quite straight forward to cook - you put assorted and cut ingredients of fish, meat, vegetables and processed food like tofu into a single hot pot. Putting it on a portable gas cartridge stove on top of table, then cook all at the same (and long) time. The taste of Nabe is quite various and different between families or district. Broth soup in a pot is popular. The other case is simple boiled water(with ponzu: soy source with citrus taste). A number of people eat together from the same pot.