Project Overview

Planning

For my Final Project I've decided to go with my advisor's idea of building something for the J-003 Software engineering classroom, known as "Rafa's Cave", in honor of Rafael Perez our beloved teacher. This classroom is like a "hub" for all of us Software engineering students in Ibero Puebla. Naturally, with me being a software engineering student, I spend most of my free time in The Cave.

After some thought, I came up with the idea of building a "help desk robot head", to be stationary in Rafa's desk. This robot will work like a low-level "Alexa" virtual assistant, running voice commands to solve our biggest problem in Rafa's Cave: Needing Rafa and him not being there.

The idea is to give this robot a voice-mail functionality, receiving messages from students and reproducing them to Rafa when prompted. Plus, there is the possibility of adding other voice commands like greets or light control, but those will be considered as time allows it.

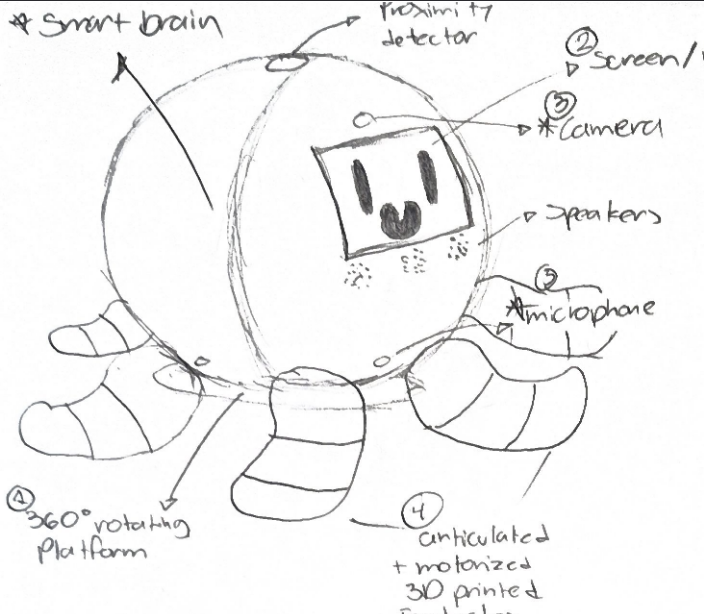

It's Casing

When making the first draft of the project on paper, I was trying to draw a head, but ended up with a balloon-like shape. So for that reason, I transformed my robot head into a robot octopus. Fun fact: the octopus is like the representative animal of the degree. Why? I actually don't know, but it's something everyone know. So, in a way, this robot octopus would be a "pet" for Rafa's Cave, hence why we will give it some play functionality.

Back into topic, the casing will be globe shaped. The exact size is yet to be defined, as it heavily depends on the amount of hardware that will go into it, specially the robot's brain(s) and face (more on that later). The casing will need some sort of frame. What type of frame is yet to be decided. And if possible, the plan is to give it some sort of "skin" texture. This texture might be fur, as to give it a plushy vibe.

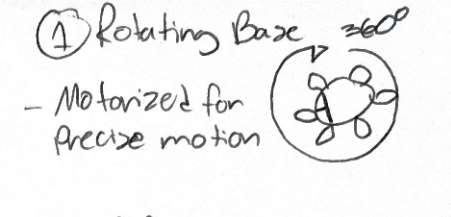

It's Movement

As this robo-octopus will be stationary in Rafa's desk, I'm planning on giving it a 360° rotating base. This movement will allow us to give it interesting functionality (more on that later). This is the main movement mechanism of the robot. In addition, I'm planning on giving the octopus some 3D printed articulated tentacles. These tentacles will move on their own with the base movement, and in their base we can add some servomotors to give the tentacle some upwards movement that will come in handy for giving it life (more on that later).

It's Sensors and Vision

The robot will have 3 main input devices for triggering specific actions:

The fist one will be microphones, implemented in an array along the base of the casing. The main function of the microphone array is to receive voice commands from students and Rafa. But, there is something else planned for this sensors (more on that later).

The second one will be a camera module. This camera module will allow us to do some person tracking.

The third one will be a proximity sensor placed on top of the robot's head, which will help us trigger some play functionality.

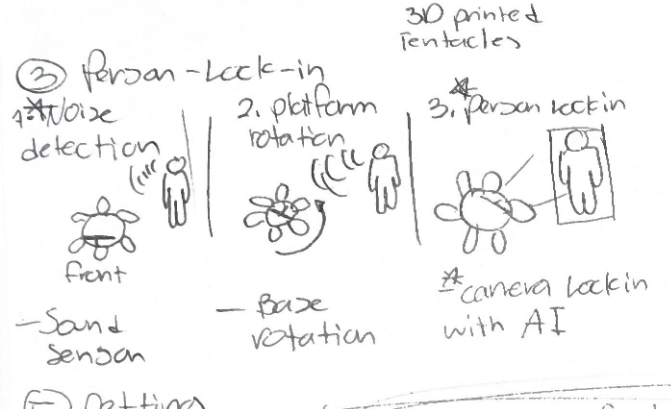

Person Locking

The idea is to use the microphones and camera module (Xiao esp32s3 sense as the time of planning) to trigger a person locking mechanism. This mechanism consists on 3 main steps:

- Noise direction detection: The array of microphones can be used to pin point the general direction from which the noise (in this case the voice command) is coming from.

- Base rotation: This will trigger the base movement mechanism to spin towards the direction of the person talking.

- Person locking: Using the Xiao esp32s3 sense with a condensed person recognition ML model (specifics on this are yet to be planned), the robots movement will be locked to the first person detected once the robot has rotated to the general direction the sound came from. It is important for it to ocurre in this exact order, as to ensure (as most as possible) that the person being locked-in to is the one who triggered a command.

Why Xiao esp32s3 sense?

This camera module may not have the best camera quality. The thing is, we don't need quality. The Xiao esp32s3 sense is the best camera module for its size. It will allow us to seeming-less integrate it into the casing. Plus, the Xiao is proven to work really well with person detection ML models.

It's life

It is important to give our robo-octopus life-like functionalities. These functionalities will come in the form of reactions to voice commands.

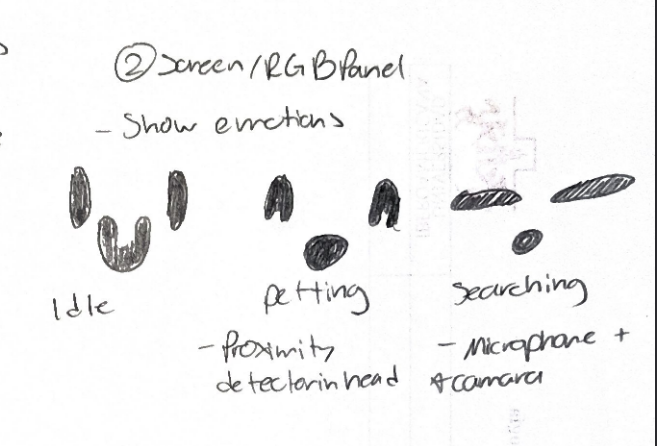

The facial expressions

We are planning on using a screen or RGB panel to give the robot a cute face. This face could be just eyes or both eyes and mouth, this decision will be made in a future. This panel will represent "emotions" linked to certain voice commands. This emotions include:

- Happy face when greet or pet

- Idle face pre/post command

- Sleeping face triggered after inactivity

Simulating emotion

Facial expressions are just one half of the emotions. Both the tentacles and rotating base can be used to further enhance this play features. For example, the petting functionality: When a hand is placed on the octopus head (simulating a pet), the proximity sensor will trigger the "pet" emotion. The "pet" emotion will change the face on the screen/RGB panel to a cheerful face and will trigger the tentacles servo motor to move in a "dancing" sequence, up and down. Once the hand is out of range of the proximity sensor, the emotion will stop.

Sounds

The robot must use some sort of voice module to give feedback when receiving a command, and reproducing the voice mail messages left from students. If possible, sound can also be implemented with the emotion triggers to fully give life to our beloved class pet.

It's Brain

Here's the most important part of the project, and sadly the least developed one at the time of writing this video. The first option would be to use an esp32 for the "Alexa like" functionalities. The choice of this controlled was made as inspiration on this GitHub repo that teaches how to make a DIY Alexa device with esp32 and Wit.AI.

Still, the esp32 cannot handle both the voice commands and person recognition functionalities. Even just the person recognition cannot be handled by the esp32. So a dual-brain array is proposed, using the esp32 to handle voice commands and something else yet to be determined for the person detection with the xiao esp32s3 sense.

Another brain suggested might be the NVIDIA Jetson Nano, as it is powerful enough to handle both the person recognition and tracking, virtual assistant functionalities and any other motion control needed. If allowed to, this would be my brain of choice.

The plan

Most of the skills needed to develop this project must be learned somewhere. If only there was an international "hands-on learning experience where students learn rapid-prototyping by planning and executing a new project each week, resulting in a personal portfolio of technical skills."...

Updates on this project will be made as I progress in my FAB Academy 26 journey. With every new skill gained in any of the weeks, I'll be closer to completing my project. In the end, everything written up to this point is just a basic concept, experience will make clear if anything planned here needs change, adjustment, reworking or maybe it's just right.

January 22nd