My Final Project: 3-Axis CNC Pen Plotter¶

This project is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License (CC BY-NC 4.0). This means you are free to share and adapt the work, as long as you give appropriate credit. Do not use it for commercial purposes. Indicate if changes were made. For any reuse or distribution, you must make the license terms clear to others.

Slide (wip)¶

Video (wip)¶

Final Project Overview¶

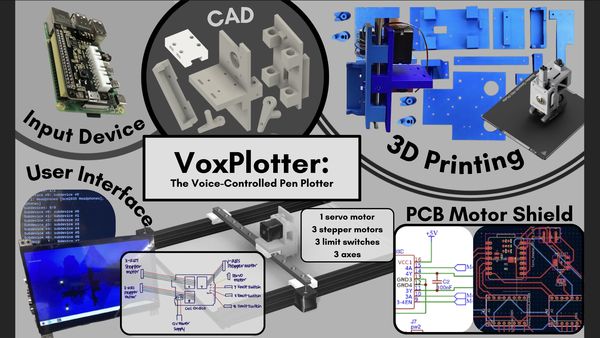

My project, The VoxPlot, is a 3-axis voice-activated pen plotter that executes curated, prewritten G-code drawings based on spoken prompts. A ReSpeaker 2-Mic Pi Hat connected to a Raspberry Pi captures voice input, which is transcribed using speech-to-text software. The transcribed text is processed locally to identify a matching G-code file from a library of pre-generated commands. These G-code files are created in advance using tools such as Inkscape with G-code extensions, ensuring accuracy and reliability. Once selected, the G-code is transmitted via serial connection to a GRBL-compatible controller, which drives the X, Y, and Z axes of the machine. A touchscreen interface attached to the Raspberry Pi allows for system control, status monitoring, and manual input.

Model¶

System Diagram¶

Bill of Materials¶

Project Schedule¶

| Week | Dates | Goals/Tasks |

|---|---|---|

| Week 1 | April 28 – May 4 | Get Speech-to-Text + Touchscreen Working - Set up ReSpeaker 2-Mic Pi Hat - Install/test speech-to-text software (Vosk, Google STT, or similar) - Confirm Raspberry Pi can capture voice and convert it to text |

| Week 2 | May 5 – May 11 | Connect Speech-to-AI - Set up OpenAI ChatGPT API access (or local AI model) - Send recognized text to ChatGPT - Get a text response from ChatGPT - Display AI response on Pi (command line or touchscreen) |

| Week 3 | May 12 – May 18 | Machine Construction - Assemble the 3-axis pen plotter - Install motors, belts, lead screws, or rails - Mount the pen holder - Wire up the stepper motors, servo motors, limit switches - Test manual motor movement (basic electronics check) |

| Week 4 | May 19 – May 25 | G-code Generation Basic - Write a simple Python script that takes AI output and generates basic G-code commands (ex: square, circle) - Test manually entering sample commands and getting G-code - Start defining simple drawing "templates" |

| Week 5 | May 26 – May 31 | Machine Movement - Set up GRBL communication (pySerial or UGS) - Test sending basic G-code to pen plotter (move X, Y, Z manually) - Connect the full chain: voice → AI → G-code → movement (basic test!) - Start troubleshooting any mechanical or wiring issues |

| Week 6 | Jun 1 – May Jun 7 | Full System Integration + Polish - Full system test: voice → AI → G-code → machine drawing - Finish touchscreen UI (edit/send command) - Final video/photo documentation - Finish website and final project presentationThis is a sketch of my idea: |

Deeper Overview¶

Input: Audio Capture + Transcription (Whisper)

The user speaks a command such as “Draw a flower” into the onboard microphones on the ReSpeaker 2-Mics Pi HAT. The ReSpeaker captures audio and sends it to the Raspberry Pi. The Raspberry Pi runs Whisper (or some other similar system) to transcribe the audio into text. The transcription is saved or passed directly to the next step.

Processing: Prompt Matching to Prewritten G-code

The transcribed prompt is processed by a Python script that matches the text to a predefined list of supported commands. Each supported prompt corresponds to a curated G-code file that was created in advance using software like Inkscape with G-code extensions. Once matched, the appropriate G-code file is retrieved and prepared for sending.

Output: G-code Sending to GRBL

This step involves communication with a GRBL-compatible controller via USB serial. The Raspberry Pi uses pySerial to open a serial connection to an Arduino Uno running GRBL firmware, which is mounted with a CNC shield. A script reads the selected G-code file line by line and sends it over the serial connection. GRBL interprets the motion commands and drives the stepper motors accordingly.

Interface: Touchscreen Control

Using the 7” Raspberry Pi Touchscreen, there is a local interface (Python UI) that shows the transcribed prompt. The user can re-record, retry, or start the drawing. The UI may also visualize the selected G-code file before sending. Ideally, it displays plot status (e.g., "Drawing...", "Idle") to monitor machine activity. At minimum, it confirms whether the correct prompt was recognized before proceeding.

Touchscreen - Raspberry Pi 4¶

When I purchased the 7 Inch HDMI Touchscreen LCD Display it came with instructions on how to connect it to a Raspberry Pi. Even so, because it was my first time working with a Raspberry Pi, I was still confused. When I tried searching for tutorials online, a lot of them already assumed I had some base level of knowledge I didn't.

Figuring out where each of the three connective parts went was not necessarily the hardest part, but installing the driver was. I ended up following this tutorial to power it on even though it was different than what I had.

After that, I just inserted the MicroSD chip with the driver and powered it on and I got to the landing page.

ReSpeaker 2-Mics Pi HAT - Raspberry Pi 4¶

The Mic HAT is installed on GPIO headers of the Raspberry Pi. I ended up following this tutorial for the microphone.

I was originally worried that this way of connecting the microphone would block the rest of the GPIO pins I would need for other aspects of my project, but I learned stacking headers would be a solution to that.

With it attached, I was able to get the Raspberry Pi to recognize my microphone by accessing the Command Prompt window on the touchscreen.

CNC Shield - Pen Plotter¶

GRBL-compatible CNC shield connections with X, Y, and Z axis stepper motors, servo motor, and X, Y, and Z limit switches.

[create a PCB]

Pen Plotter Mechanics¶

X-axis¶

Using a 12 inch 40x80mm Aluminum T-Slot Extrusion, I attached a stepper motor pulley system that will allow the motor to control the movement of the x-axis.

This is the way the v-slot wheels connected to move along the aluminum extrusion. It also shows how the limit switch is pressed down as the wheels make contact and reach the end of the rail.

This is the stepper belt and timing pulley.

This shows the belt end and the idler pulley.

Pen Lift¶

Below is the 3D parts for the lift mechanism printed out and assembled. The mechansim was inspired by How To Mechantronics' pen plotter.

The 3D printing machine part of the project is based on the DIY Machines tutorials. I downloaded the .stl files.

I had used Fusion360 for engineering class before, but I had never created a 3D model of a project/machine. I consulted ChatGPT with these prompts.

Based on what I learned, I went to Create → Insert Mesh and added all the .stl files I downloaded.

I also changed the material through Modify → Appearance and I changed it to matte black PLA.

Plotter Base¶

Instead of an adjustable design that I was thinking of before, I decided to design my base to be more cosmetic. Inspired by Angelina's Pomo Desk, I wanted there to be an LED lighting aspect.

Plywood Structure¶

LED Lighting¶

After milling out my board, I soldered on resistors and headers:

I then connected it to the XIAO RP2040 by attaching it to the jeaders and to the LED strip via jumper wires:

Frosted Acrylic Panels¶

Originally, I was going to do acrylic panels, but in the lab I also found frosted ones, which I felt would give my machine a better ambiance. I found one sheet that seemed to have enough room for the panels I wanted to use. To test how it would look like in reality, I positioned the sheet over the LEDs at a similar distance.

I decided I really liked how it looked, so I made some rudimentary designs based on the rectangular holes I wanted my panels to go through. I tested the dimensions using carboard first, because I did not have enough of the frosted acrylic to gamble on error.

Wooden parts¶

The design I went with included some stacking. I had two large curved rectangles with holes in each corner. There were two supportive squares in the center where the LED strip will wrap around. Then, in each corner, there are quarter-spheres, also with holes in them for where they will be secured with the base and upper wooden plates.

I did some sample tests using cardboard on the laser cutter.

Since it ended up exactly as I imagined, I moved onto milling the pieces out on the ShopBot, and this was the finished cut.

I found knobs in the lab to use as well as a screw with a flat round end. The knob allowed me to screw it to the tightness I wanted.

This was a shot midway through assembling.

This was the final result of the wooden base with all the frosted panels attached:

System Integration¶

Subsystem Interfaces¶

Below is a flow chart for reference. This was made using Lucid Charts.

Input: Microphone → Speech Recognition (Whisper)

The ReSpeaker 2-Mic Pi HAT connects through the GPIO pins on the Raspberry Pi. Power is supplied through the Raspberry Pi GPIO header. It takes in raw audio for transcription by Whisper running on the Pi.

Processing: Whisper → Prompt → Prewritten G-code Selection

The transcribed command is interpreted by a local Python script. The system selects a matching G-code file from a curated library of prewritten commands. These files are handcrafted or created with Inkscape and stored locally on the Pi.

Output: G-code → GRBL Plotter

The Raspberry Pi communicates with an Arduino Uno over USB. The Uno runs GRBL firmware and is mounted with a CNC shield. The Pi sends G-code over the serial port using pySerial. The CNC shield is powered by a 12V supply, while the Arduino is powered via USB from the Pi. GRBL interprets the incoming G-code and actuates the motors for X, Y, and Z movement.

Interface: Touchscreen UI

The 7” Raspberry Pi touchscreen connects via DSI ribbon cable and is powered with 5V DC. The interface enables the user to confirm the recognized prompt, control plot start/stop, and monitor plot status.

Methods of Packaging¶

For my Raspberry Pi 4, I will use a case like this one.

As for cable management, when I get to wiring all of my motors and the Raspberry Pi, I will use a braided cable sleeve, or something like it to make sure they are neatly secured and do not fall all over the place. The motors will be mounted on the pen plotter with custom 3D-printed parts, the microphone attaches to the Raspberry Pi on its GPIO pins, the touchscreen has its own aforementioned stand that could be attached to the pen plotter, and the pen is attached to its lifting mechanism also via 3D-printed parts. Since most of the parts of my pen plotter will be 3D-printed, save for the parts that I buy, I will make sure the parts are of high quality. And since my cable managing will be custom designed, I will make sure ports are accessible in said designs through cutouts.

Question Responses¶

What does it do?

It is a 3-axis voice-activated pen plotter that executes curated, prewritten G-code drawings based on spoken prompts.

Who’s done what beforehand?

My project is inspired by Jack Hollingsworth's Ouiji Board, which uses ChatGPT to generate responses and control stepper motors to physically "move" a planchette. I learned about this through the ouiji board group project from last year’s Fab Academy cycle. Both projects demonstrated how artificial intelligence could be used for real-world motion control through G-code to command stepper motors. I was especially drawn to the voice-activated aspect, which made the interaction feel more natural and autonomous. I wanted to explore that further in a visual way.

What did you design?

I designed the 3D components such as the pen lift mechanism, the carriage that holds the pen, and the motor mounts and attachments for the linear rail. I also design the cable management system, the housing for the Raspberry Pi and touchscreen, and the base to stabilize my machine. Additionally, I designed a custom PCB to manage motor control and driving tasks.

What sources did you use?

I used some tutorials found online like this one for the ReSpeaker Microphone, this one for the Raspberry Pi, as well as How To Mechatronic's pen plotter for structural inspiration. I also conversed with ChatGPT for troubleshooting and advice at certain stages.

What materials and components were used? Where did they come from? How much did they cost?

This is my bill of materials that lists out all the components I used and answers the above questions.

What parts and systems were made?

3D printed parts will be fabricated in-house and the custom PCB will be designed and milled using lab equipment. The ShopBot is also used to create the wooden base of my machine, to give it more stability.

What processes were used?

-

CAD for designing 3D parts and the custom PCB.

-

3D Printing for fabricating custom mechanical components like the pen lift mechanism, motor mounts, and structural brackets.

-

CNC Milling to manufacture the custom PCB and the milled base. Soldering for assembling the custom PCB and making reliable electrical connections between components.

-

Laser and maybe vinyl cutting for aesthetic base structural parts and enclosures.

-

Embedded Programming – to configure the GRBL firmware on the Arduino and interface with the custom motor driver PCB

-

Python Scripting to build the touchscreen interface, handle audio transcription (Whisper), interact with the ChatGPT API, and send G-code to GRBL.

What questions were answered?

- Where does the custom PCB best fit in the system? Should it act as a signal breakout board, or handle logic beyond what the CNC shield provides?

I initially considered using a custom PCB as a simple signal breakout board, but I ultimately decided to design a PCB that functioned more like a motor driver.

- How reliable is Whisper’s transcription in real-world (noisy) environments and will it be consistent in general?

[N/A]

- How robust is ChatGPT’s G-code generation? What can I do so that it doesn’t output invalid toolpaths?

I learned that it is not robust enough for my project. This is what I learned from Neil when he reviewed my project.

- Should Inkscape be used as an intermediary step between AI and G-code output? Would it be better to have a secondary option where ChatGPT generates an SVG that’s then processed into G-code using Inkscape?

Adding onto the previous question, yes, it would be good as an intermediary. That would be a level above pre-written G-code as it is more automated and AI-intensive.

- What is the best way to display and preview G-code on the Raspberry Pi touchscreen? How would I visualize it?

[N/A]

- What fail-safes or feedback systems are needed (limit switches or emergency stop)?

As of now, I have added limit switches. I think I will connect an emergy stop with them if given the time.

What worked? What didn’t?

My original idea for AI to G-code was not really plausible. Mr. Nelson made the suggestion that I could have AI generate an image then use the image in a g-code making software. After the meeting, Mr. Dubick also told me I could go the route where I pre-code certain images that the machine draws properly. Then, I'd just voice the command "Draw me a ___" with one of the pre-developed images. This would make it more reliable.

How was it evaluated?

This project was evaluated based off the accuracy of the voice recognition, mechanical performance, the user interface usability, system integration/stability/quality, safety, and overall user experience.

What are the implications?

I think this project bridges a gap between human intention and machine action. It opens up possibilities for more intuitive human-machine collaboration, especially as/when AI tech advances. It makes digital fabrication more accessible if its only based on voice commands, although my project is just a nascent version of the potential with voice to output machination.

But additionally, the modular structure of the system (voice input, Raspberry Pi processing, motor control) makes it extensible beyond drawing. With modifications, it could evolve into a general-purpose voice-driven fabrication platform for laser cutting, CNC milling, or even robotic interaction.