WILD CARD WEEK: ARTIFICIAL INTELLIGENCE IN EMOTION RECOGNITION

This week, we had the freedom to choose something that was not in the assignments and implement it. A branch of programming that interests me is artificial intelligence. The ability to create something and train it in a way to automate it so that it can perform more precise actions is truly impressive, and there are many applications in this field.

While studying biomedical engineering, I have realized that there are many applications of this intelligence in this discipline, such as m onitoring the biomechanics of a person's gait or recognizing malignant or benign tumors. In this case, I want to apply a program in Python to train an emotion recognition model. I consider recognizing three emotions to be sufficient: Happy, Neutral, and Scared.

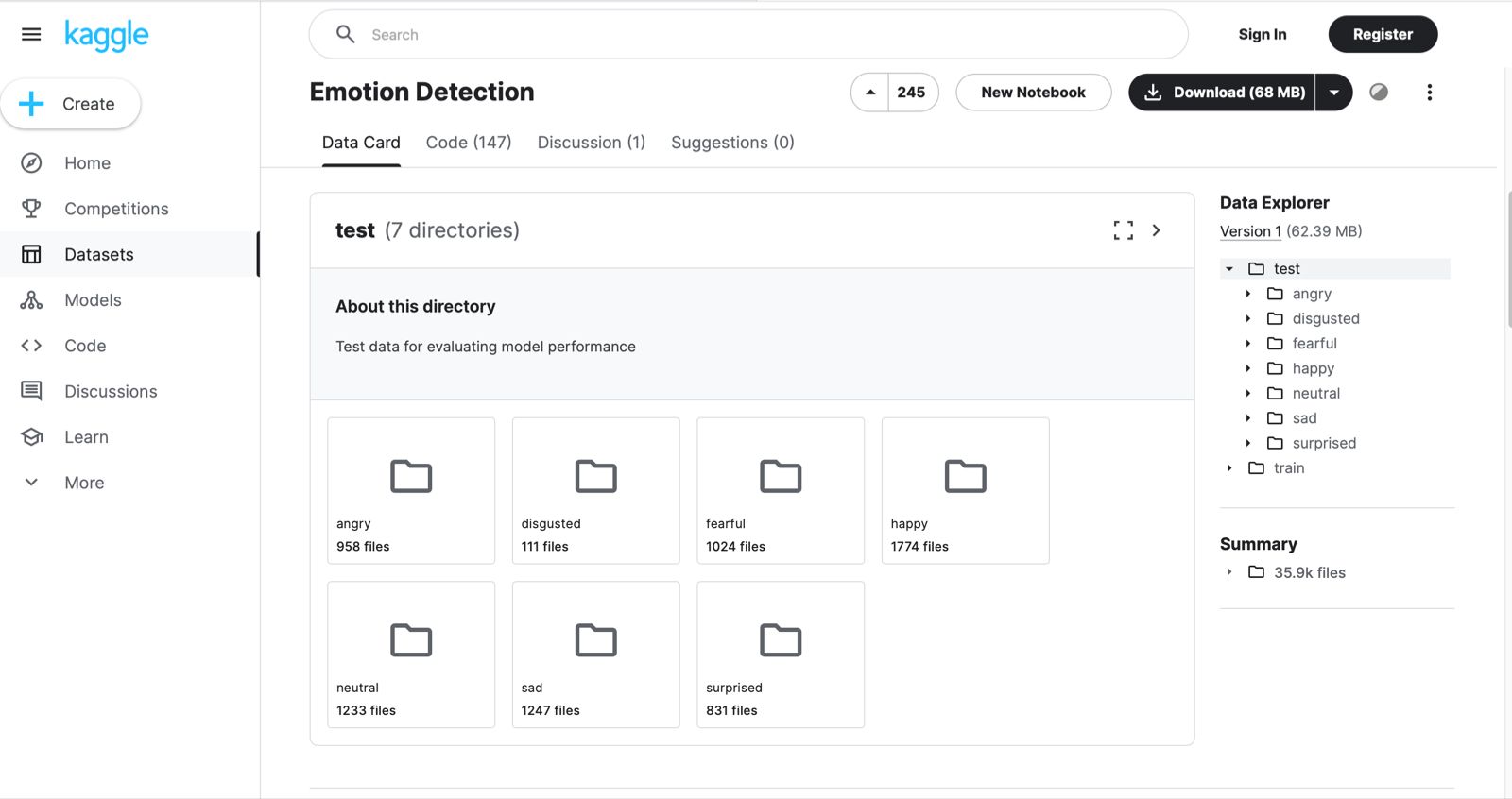

Data Set

For the dataset, we will download the images to train our neural network model. In this case, it is from the website kaggle.com.

We added the dataset to our folder, divided into two parts: Train and Test. We will see what this is about later on.

Programming

Training Model

This AI model is designed to recognize emotions in images of human faces. In this case, it focuses on three specific emotions: fear, neutral, and happy. The idea is that the model can analyze an image of a face and determine which of these three emotions is present.

How does it work?

The functioning of the model can be divided into several stages:

Data Loading:

First, we load a dataset of images representing the emotions of fear, neutral, and happy. These images are organized into folders according to the emotion they represent and are used for both training and evaluating the model. Each image is labeled with the corresponding emotion.

Image Preprocessing:

The images are resized to a uniform size of 48x48 pixels and normalized so that the pixel values are in the range [0, 1]. This facilitates the processing and learning of the model.

Model Architecture:

The model is built using a convolutional neural network (CNN), which is especially effective for image processing. The architecture includes several convolutional and pooling layers to extract relevant features from the images, followed by dense layers to perform the final classification.

Model Training:

Once the architecture is defined, the model is trained using the training images. During training, the model adjusts its internal parameters to minimize the error in predicting emotions.

Model Evaluation:

After training, the model is evaluated with the test images to determine its accuracy in classifying emotions.

Model Saving:

Finally, the trained model is saved for future use. This allows the model to be loaded and used to make predictions on new images without the need to retrain it.

Lets Run it

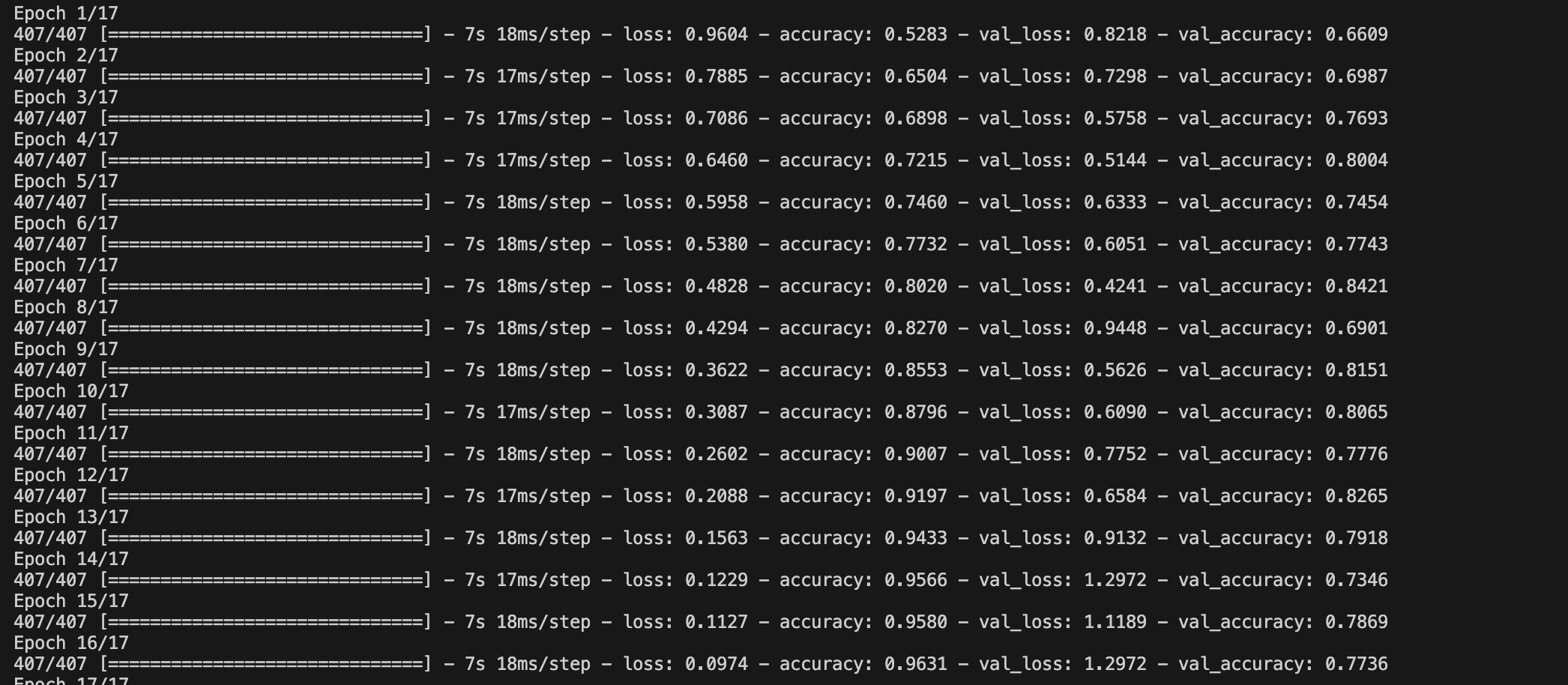

Once we run the code, the training of the 17 epochs we selected will begin.

During training:

There are two important values at this point when running the program: loss and accuracy.

Loss: During training, the achieved loss was approximately 0.0803. This metric indicates how much the model's predictions deviated from the true labels. A lower loss indicates better model performance in terms of accuracy.

Accuracy: The accuracy during training was around 0.9709, meaning the model correctly classified 97.09% of the images in the training set. This indicates how well the model is learning the distinctive features of emotions in the images.

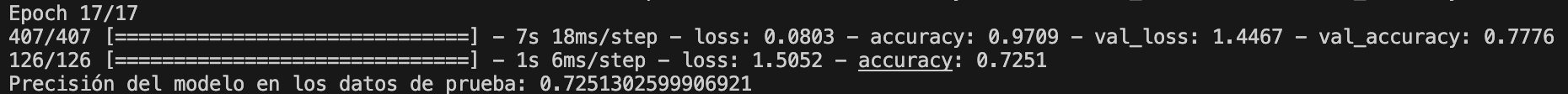

Result of the training

Upon concluding the training of my artificial intelligence model, it's crucial to perform an evaluation using independent test data to understand how well it generalizes to previously unseen situations. During this evaluation, I calculate metrics such as loss and accuracy. Loss indicates how close my predictions are to the actual labels in terms of error, while accuracy reveals how many of my predictions are correct relative to the total test data. These results are critical for determining the effectiveness and reliability of my model in real-world scenarios, beyond the controlled training and validation environment.

Test Loss: When evaluating the model with the independent test dataset, the loss was 1.5052. This metric indicates how well the model performs on completely new and unseen data during training or validation.

Test Accuracy: The model's accuracy on the test data was 0.7251, meaning it correctly classified 72.51% of the images in the test set. This metric is crucial as it demonstrates the model's ability to generalize to new data outside of the training and validation sets.

Program of recognition

After saving my emotion detection model, this program uses it to identify and classify emotions in real time from a video stream from the camera. I set up face detection using MediaPipe and load the previously trained emotion model. As the program captures video frames, it detects the faces present and crops the images of these faces. Then, it uses the model to classify the emotion of each face and displays both the detected emotion and a bounding box around each face on the video display screen. This real-time detection and classification capability allows evaluating the model's effectiveness in practical and dynamic situations.

Let's try it

Developing and implementing this artificial intelligence model to recognize emotions in human faces has been a very interesting and educational experience for me. From loading and preprocessing the data, to building a convolutional neural network, to training and evaluating the model, I have created a tool that can classify emotions such as fear, neutrality, and happiness in real time.

However, during the evaluation with test data, I realized that the model has an accuracy of 72.51%. While this is not bad, I noticed that it sometimes confuses the feeling of fear with neutrality, which happens quite often. This suggests that there is room for improvement, especially in better differentiating between these two emotions. This experience has shown me the importance of continuing to refine the model and perhaps considering more distinctive features or better balancing the dataset to improve its accuracy and robustness in real-world situations.