Wildcard Week: PLA Origami (Because Why Be Sensible?)

Overview

This week I did what every responsible adult does when given access to expensive machines: I misused them creatively. The plan? Make PLA Origami — yes, folding a rigid plastic plate by deliberately scorching it with a laser. Quiet down, safety committee; it’s Wildcard Week.

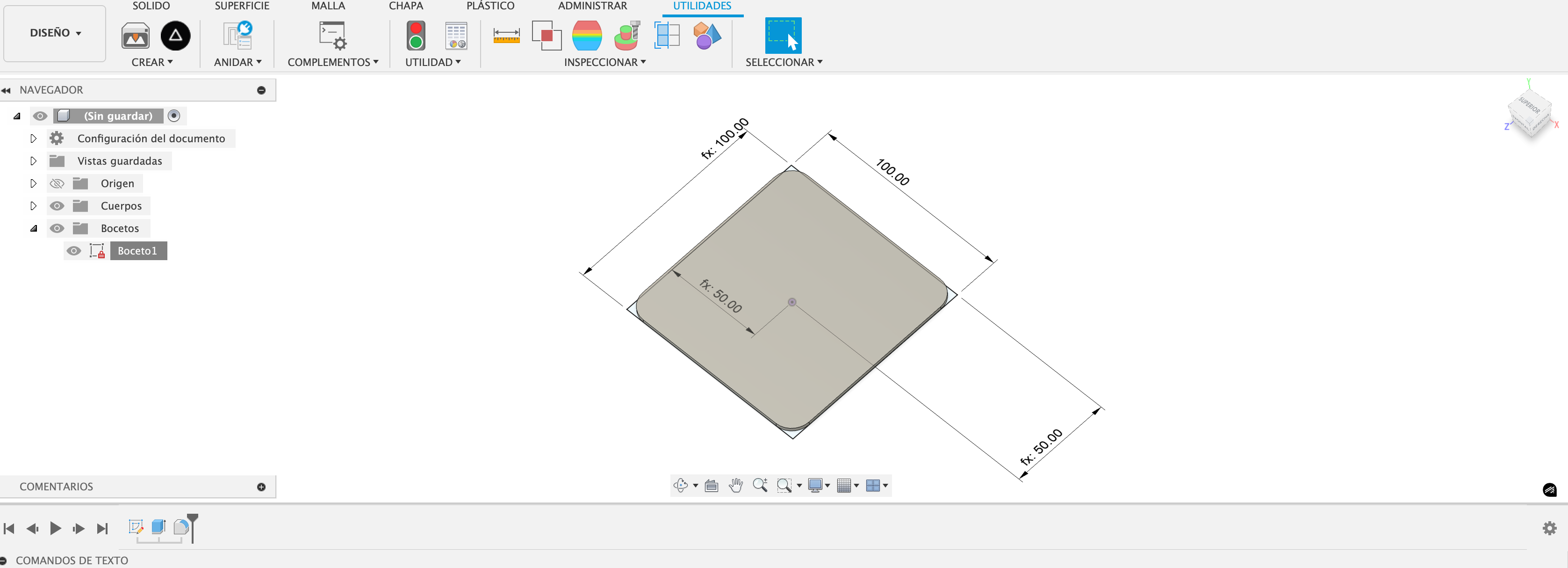

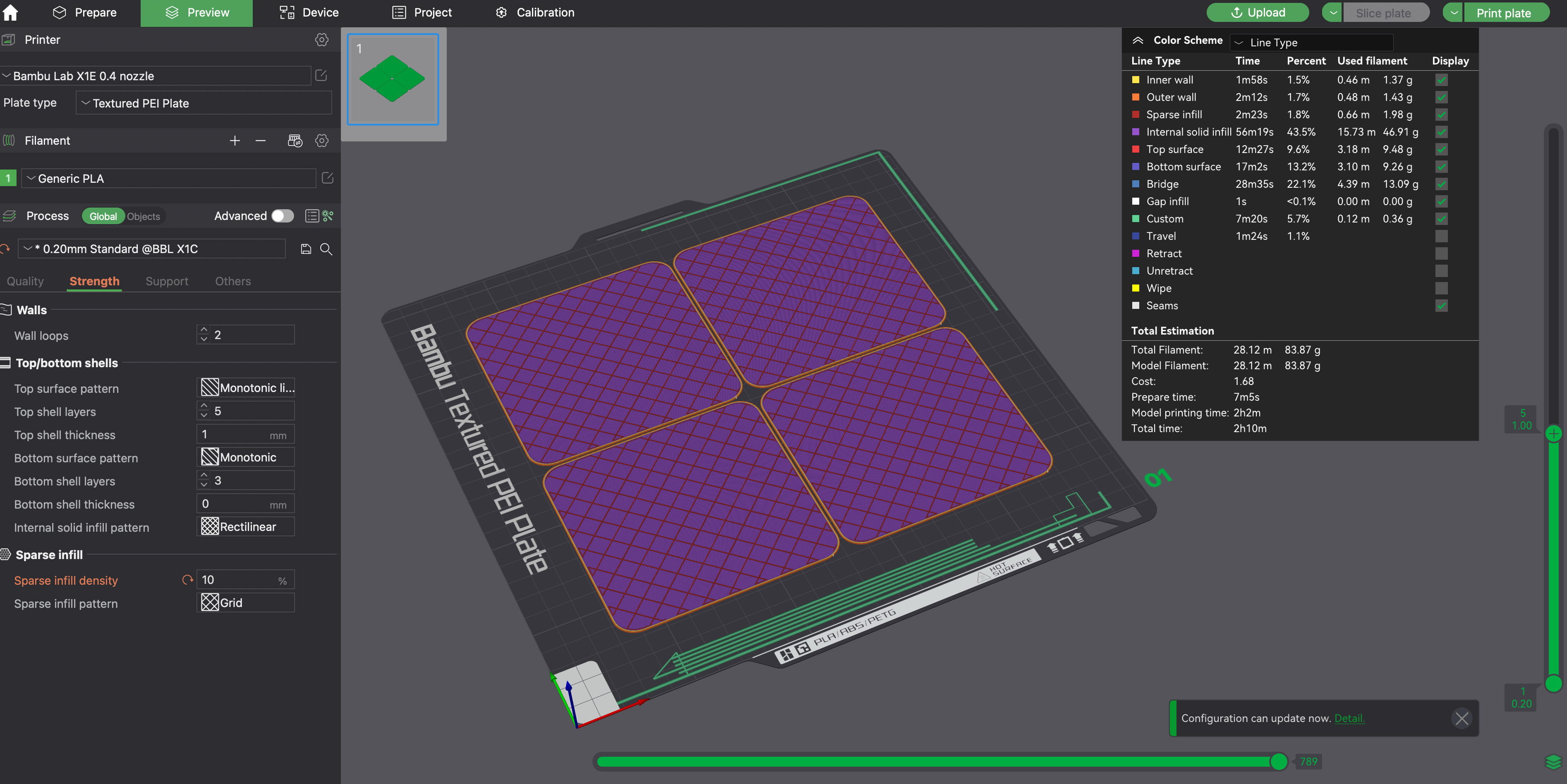

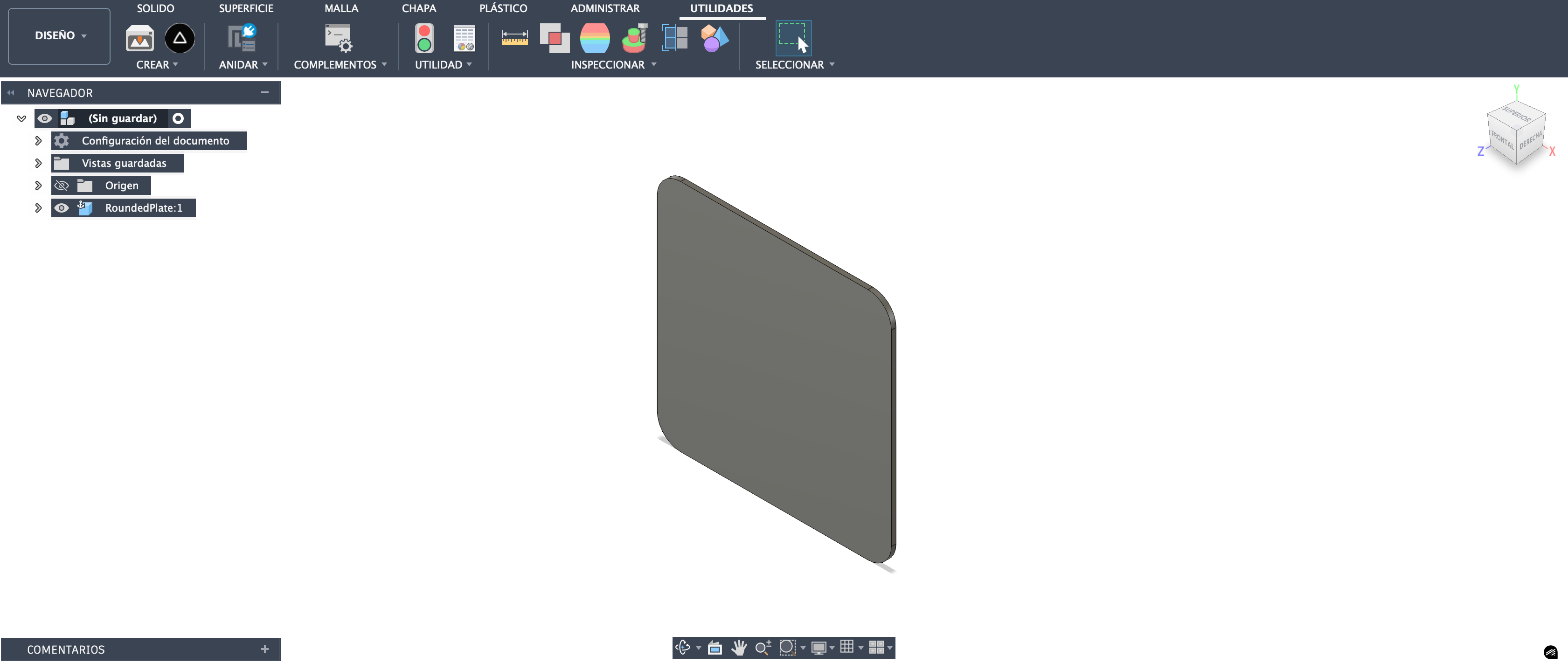

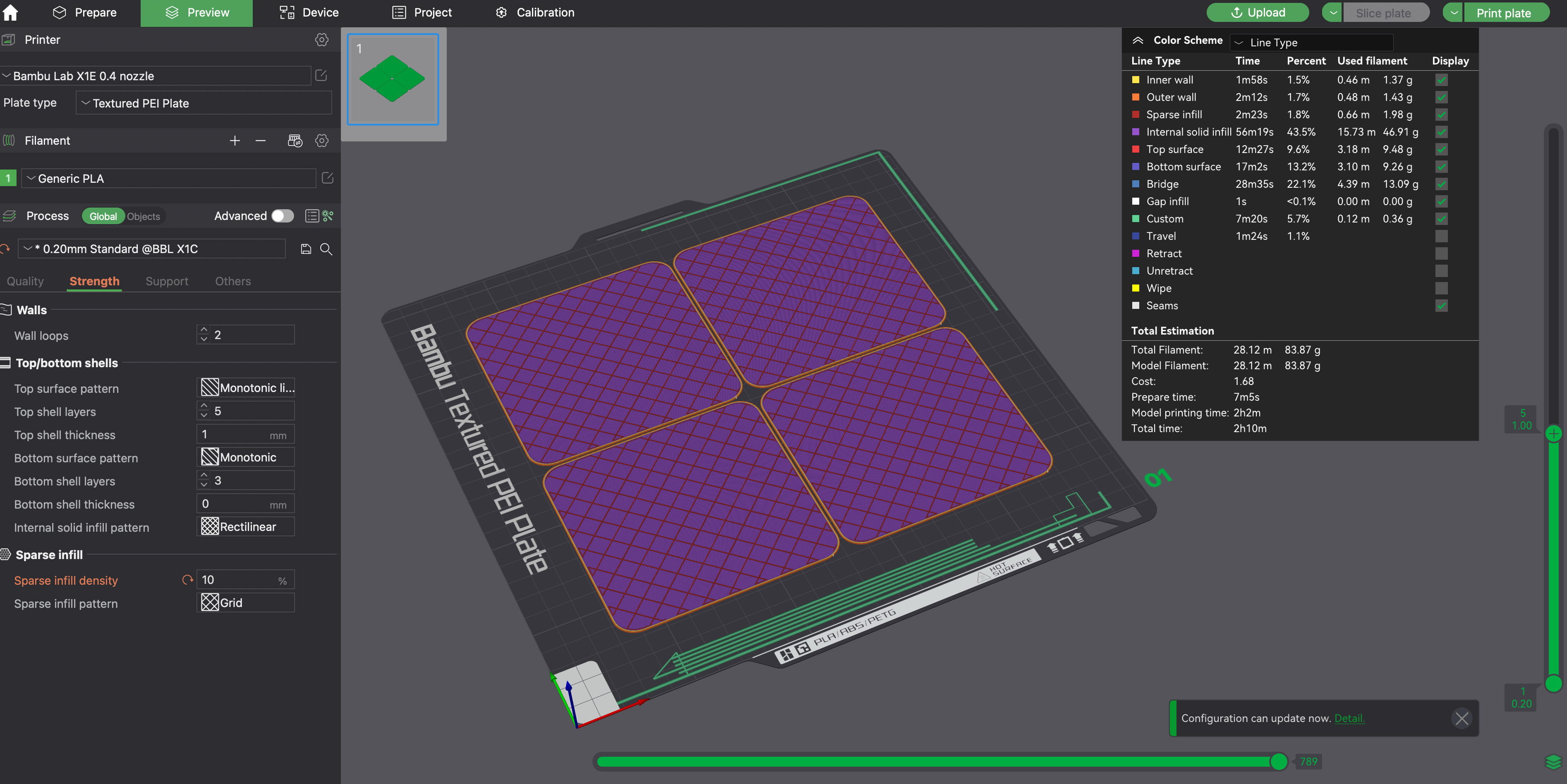

Step one was writing a tiny Python script for Autodesk Fusion that spits out a 100 mm × 100 mm × 2 mm plate with rounded corners. Export STL, toss into Bambu Studio, clone like a greedy raccoon, slice, and print four yellow beauties. Two hours later I had perfectly boring plates destined for profoundly irresponsible use.

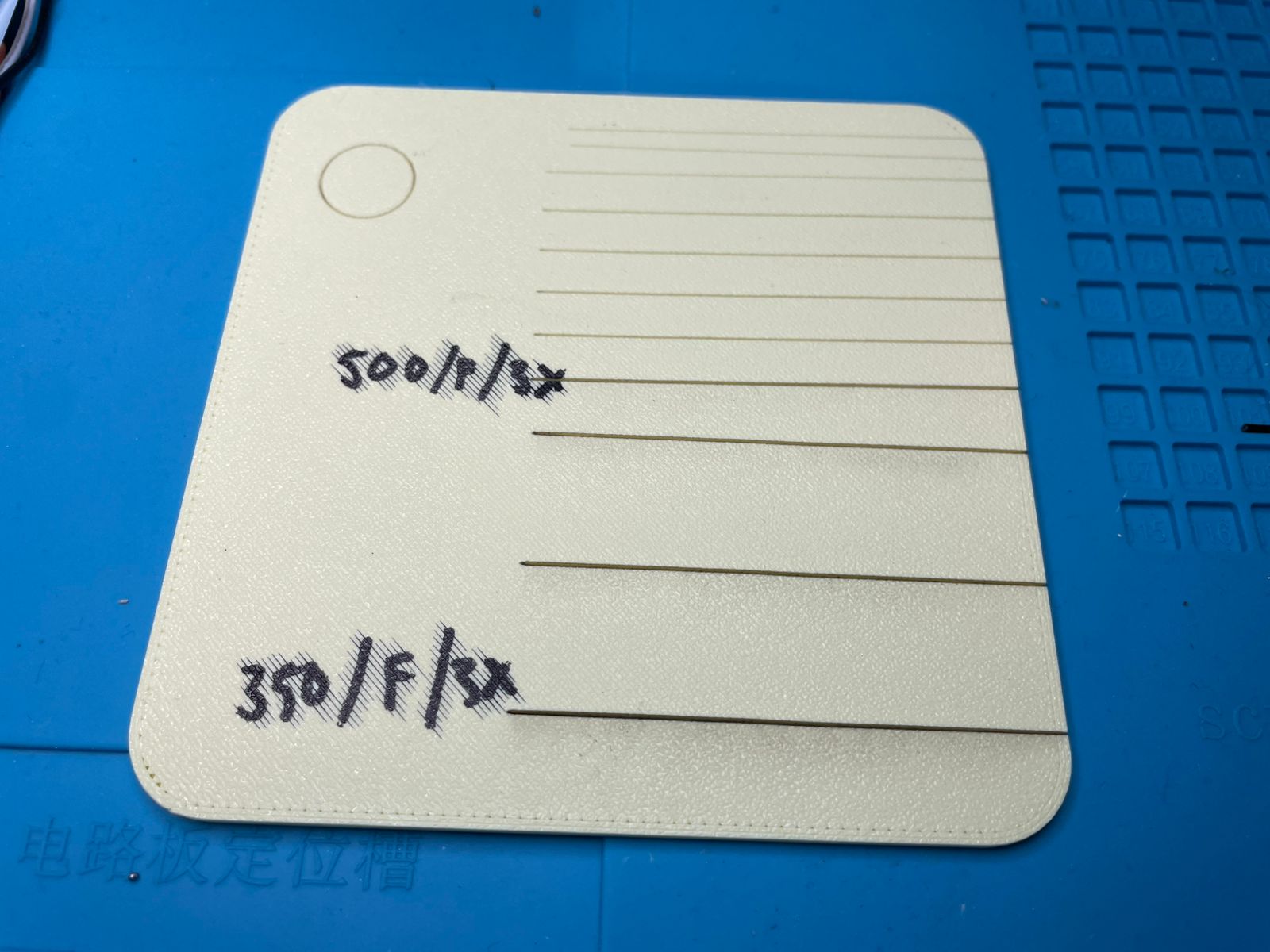

Next came the Glowforge. I know: PLA isn’t exactly a laser’s best friend. And yet, here we are. I dialed in two settings:

- Cut: 350 speed, full power, 3 passes.

- Score-for-bend: 500 speed, full power, 3 passes.

The scoring doesn’t cut through — it just wounds the PLA enough to make it fold where I want. Ruthless, but effective.

Checklist

Why This Isn’t in Any Other Week

This is about abusing processes on purpose: 3D print a rigid sheet, then laser-score it to fold. It’s not machining, not composites, not straightforward 3D printing, and definitely not a “recommended materials” lecture. It’s a mash-up that only belongs in Wildcard Week.

Process: From Fusion to Folded Nonsense

1) Fusion Scripted Plate (because clicking is for cowards)

A Python script in Fusion 360 generates a 100×100×2 mm plate with rounded corners. Export STL, print, and feel like a wizard. No drama — just geometry doing as it’s told.

import adsk.core, adsk.fusion, adsk.cam, traceback

def run(context):

ui = None

try:

app = adsk.core.Application.get()

ui = app.userInterface

design = app.activeProduct

if not isinstance(design, adsk.fusion.Design):

ui.messageBox("Switch to the Design workspace first.")

return

rootComp = design.rootComponent

occs = rootComp.occurrences

newOcc = occs.addNewComponent(adsk.core.Matrix3D.create())

comp = newOcc.component

# --- 1. Create sketch ---

sketch = comp.sketches.add(comp.xYConstructionPlane)

lines = sketch.sketchCurves.sketchLines

# Fusion uses cm internally → 100 mm = 10 cm, 10 mm fillet = 1 cm

half_size = 5.0 # 10 cm / 2

corner_r = 1.0 # 1 cm = 10 mm

# Draw a rectangle with filleted corners manually

p1 = adsk.core.Point3D.create(-half_size + corner_r, -half_size, 0)

p2 = adsk.core.Point3D.create( half_size - corner_r, -half_size, 0)

p3 = adsk.core.Point3D.create( half_size, -half_size + corner_r, 0)

p4 = adsk.core.Point3D.create( half_size, half_size - corner_r, 0)

p5 = adsk.core.Point3D.create( half_size - corner_r, half_size, 0)

p6 = adsk.core.Point3D.create(-half_size + corner_r, half_size, 0)

p7 = adsk.core.Point3D.create(-half_size, half_size - corner_r, 0)

p8 = adsk.core.Point3D.create(-half_size, -half_size + corner_r, 0)

# Straight segments between tangent points

lines.addByTwoPoints(p1, p2)

lines.addByTwoPoints(p3, p4)

lines.addByTwoPoints(p5, p6)

lines.addByTwoPoints(p7, p8)

# Arcs for rounded corners

arcs = sketch.sketchCurves.sketchArcs

arcs.addByCenterStartSweep(adsk.core.Point3D.create( half_size - corner_r, -half_size + corner_r, 0), p2, 1.5708)

arcs.addByCenterStartSweep(adsk.core.Point3D.create( half_size - corner_r, half_size - corner_r, 0), p4, 1.5708)

arcs.addByCenterStartSweep(adsk.core.Point3D.create(-half_size + corner_r, half_size - corner_r, 0), p6, 1.5708)

arcs.addByCenterStartSweep(adsk.core.Point3D.create(-half_size + corner_r, -half_size + corner_r, 0), p8, 1.5708)

# --- 2. Extrude 2 mm (0.2 cm) ---

prof = sketch.profiles.item(0)

extrudes = comp.features.extrudeFeatures

extInput = extrudes.createInput(prof, adsk.fusion.FeatureOperations.NewBodyFeatureOperation)

extInput.setDistanceExtent(False, adsk.core.ValueInput.createByReal(0.2))

ext = extrudes.add(extInput)

body = ext.bodies.item(0)

body.name = "Rounded_100x100x2mm"

comp.name = "RoundedPlate"

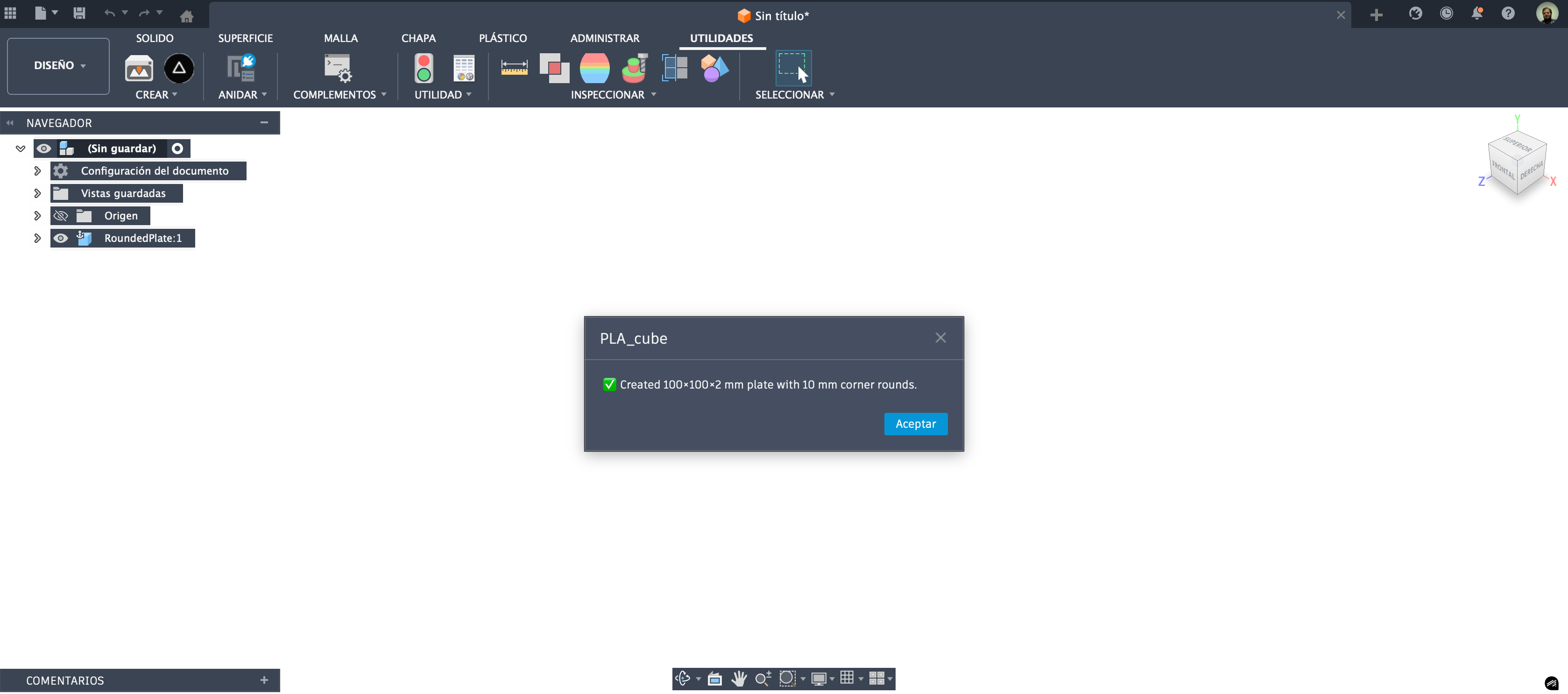

ui.messageBox("Created 100×100×2 mm plate with 10 mm corner rounds.")

except:

if ui:

ui.messageBox("Failed:\n{}".format(traceback.format_exc()))

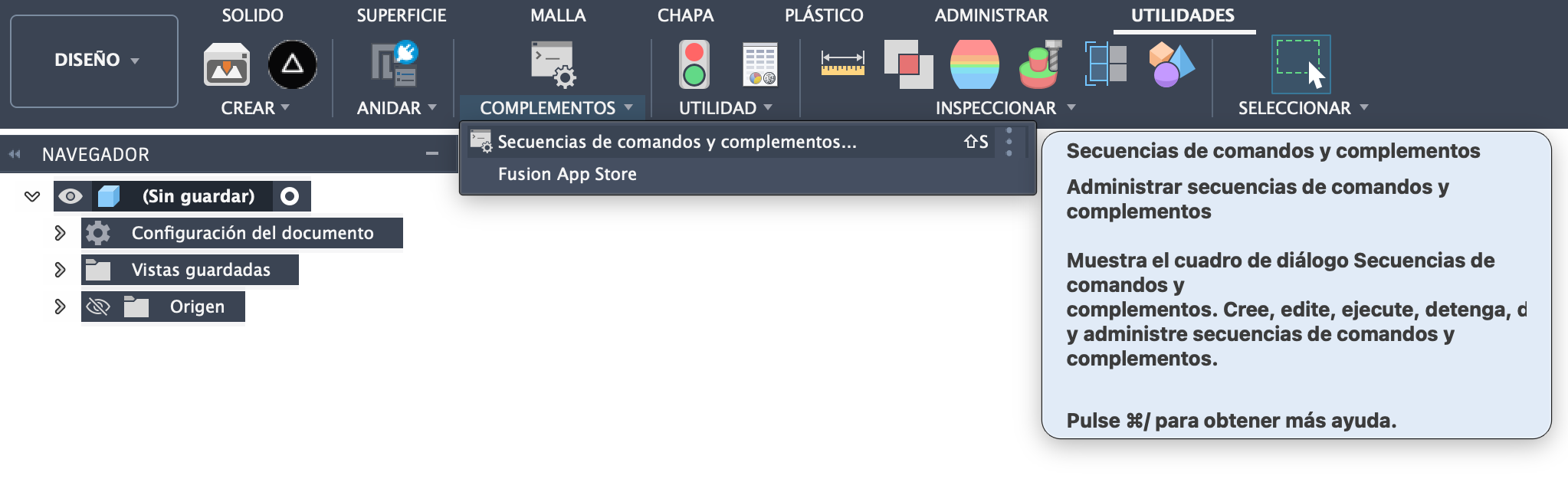

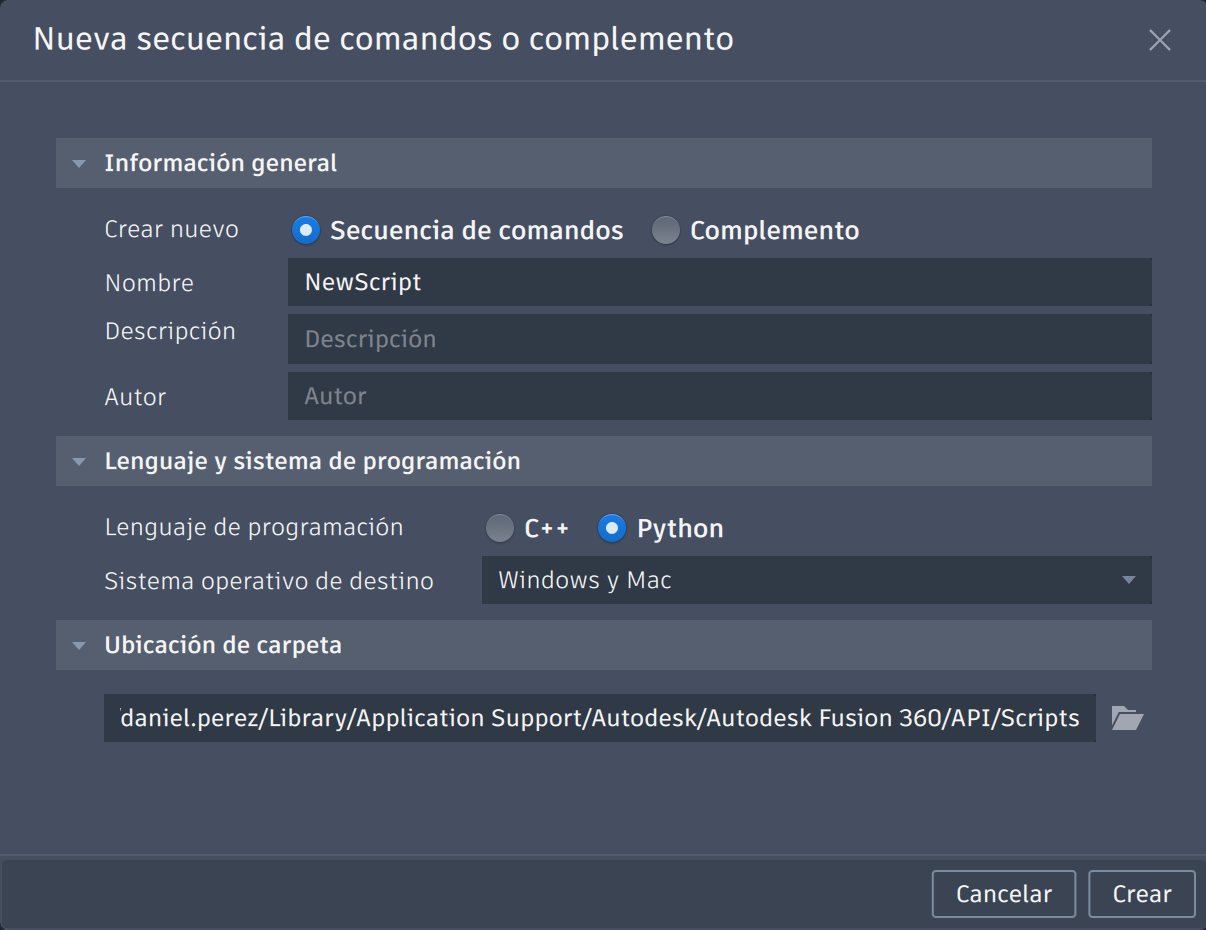

How to Upload and Run the Python Code in Fusion 360

- Go to: UTILITIES → ADD-INS → Scripts and Add-ins.

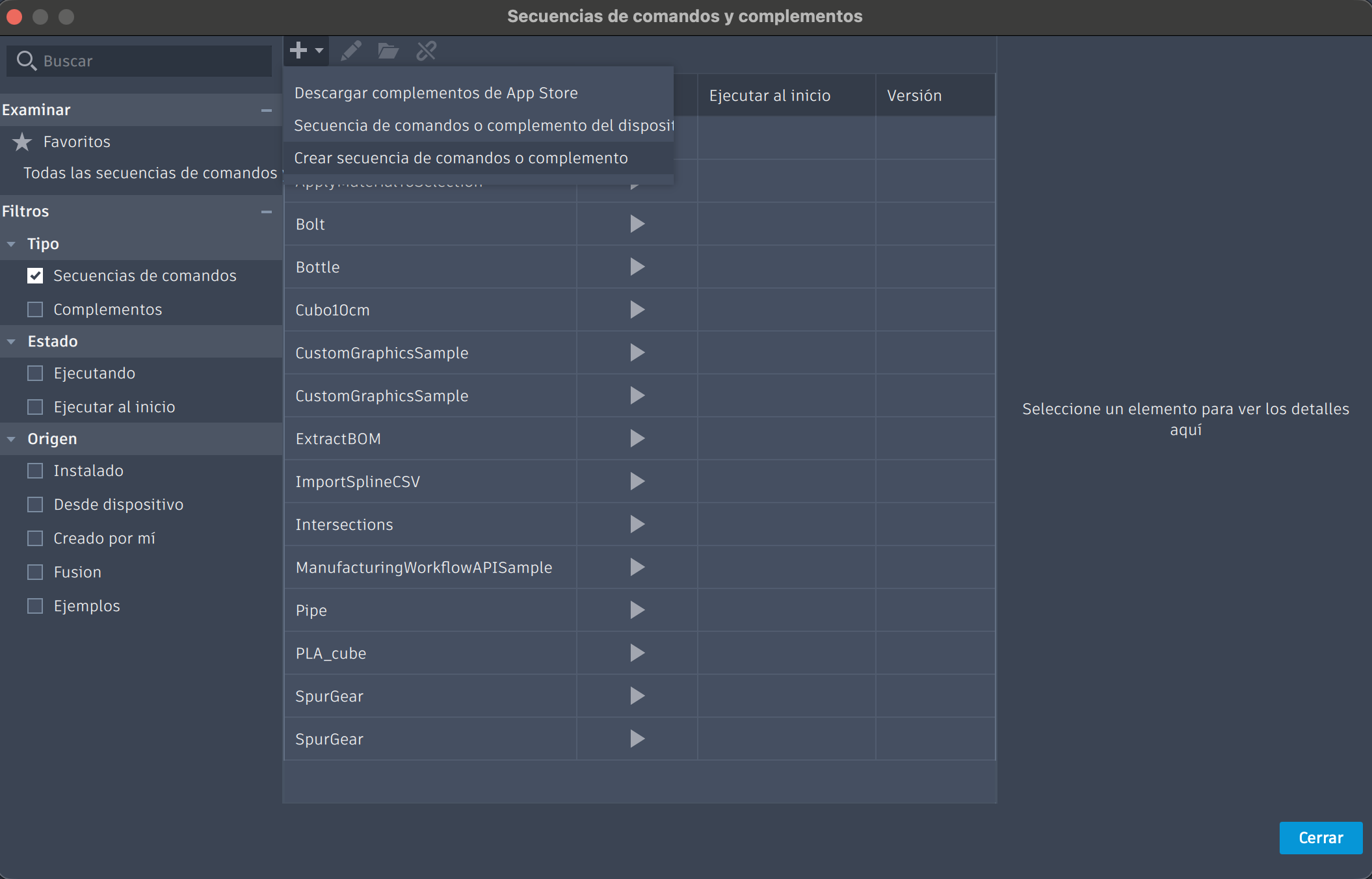

Yes, the interface is probably in Spanish — blame Autodesk, not me. - Click the + icon → choose Create new Script or Add-in.

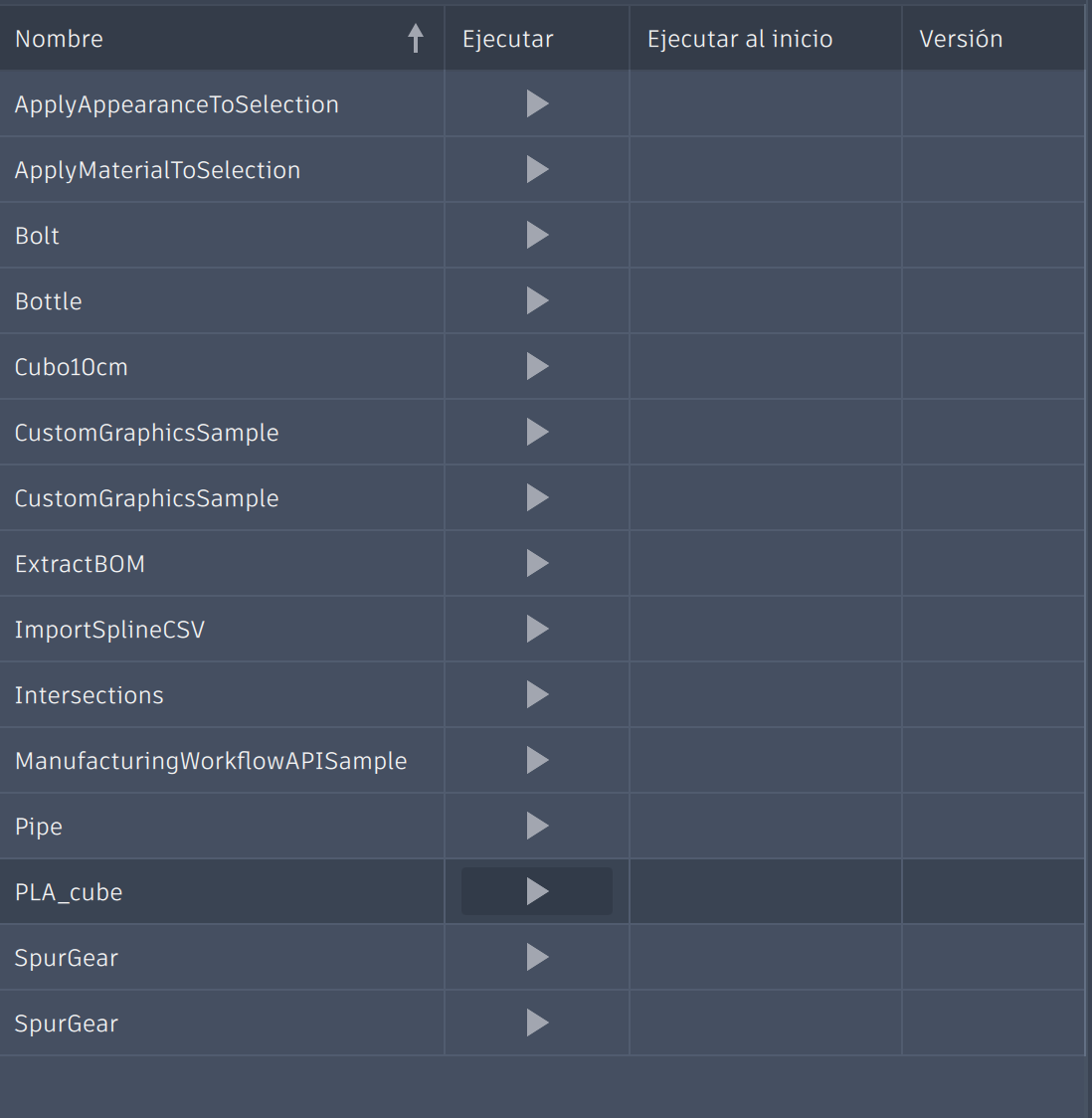

Name it something inspiring, like PLA_cube, and select Python as the language. - Tell Fusion where is your file by clicking in the folder icon.

- Go back to the Scripts and Add-ins window, find your new script in the list, select it, and click Run. If everything works, Fusion rewards you with a lovely confirmation message.

- Save or export the body as STL — ready to be sliced and abused in the Glowforge later.

That’s it. You now have a parametric, Python-born plate, and an inflated sense of power. Enjoy responsibly.

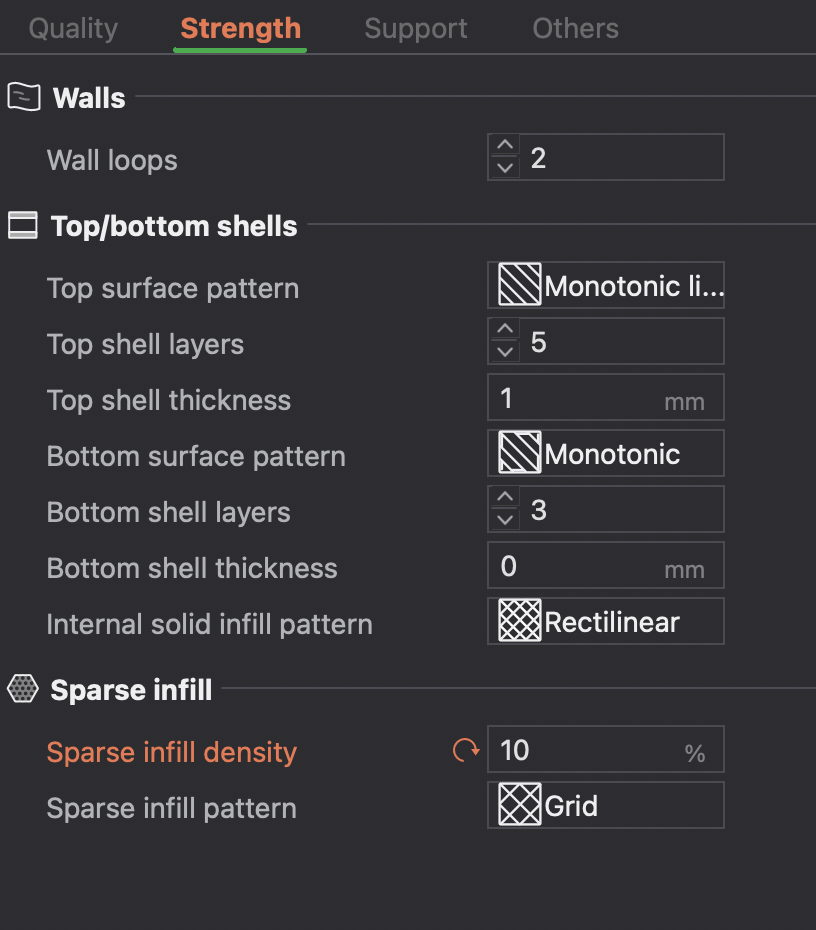

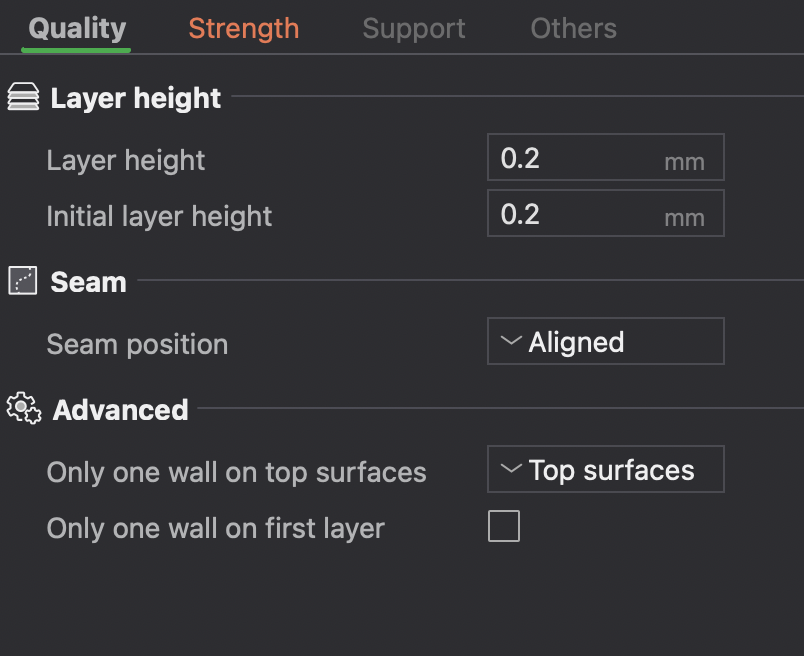

2) Slice and Print in Bambu Studio

Import STL, duplicate 4×, PLA yellow, 0.2 mm layer height, infill who cares (it’s a plate), and print. Two hours later: four rigid canvases ready for laser therapy.

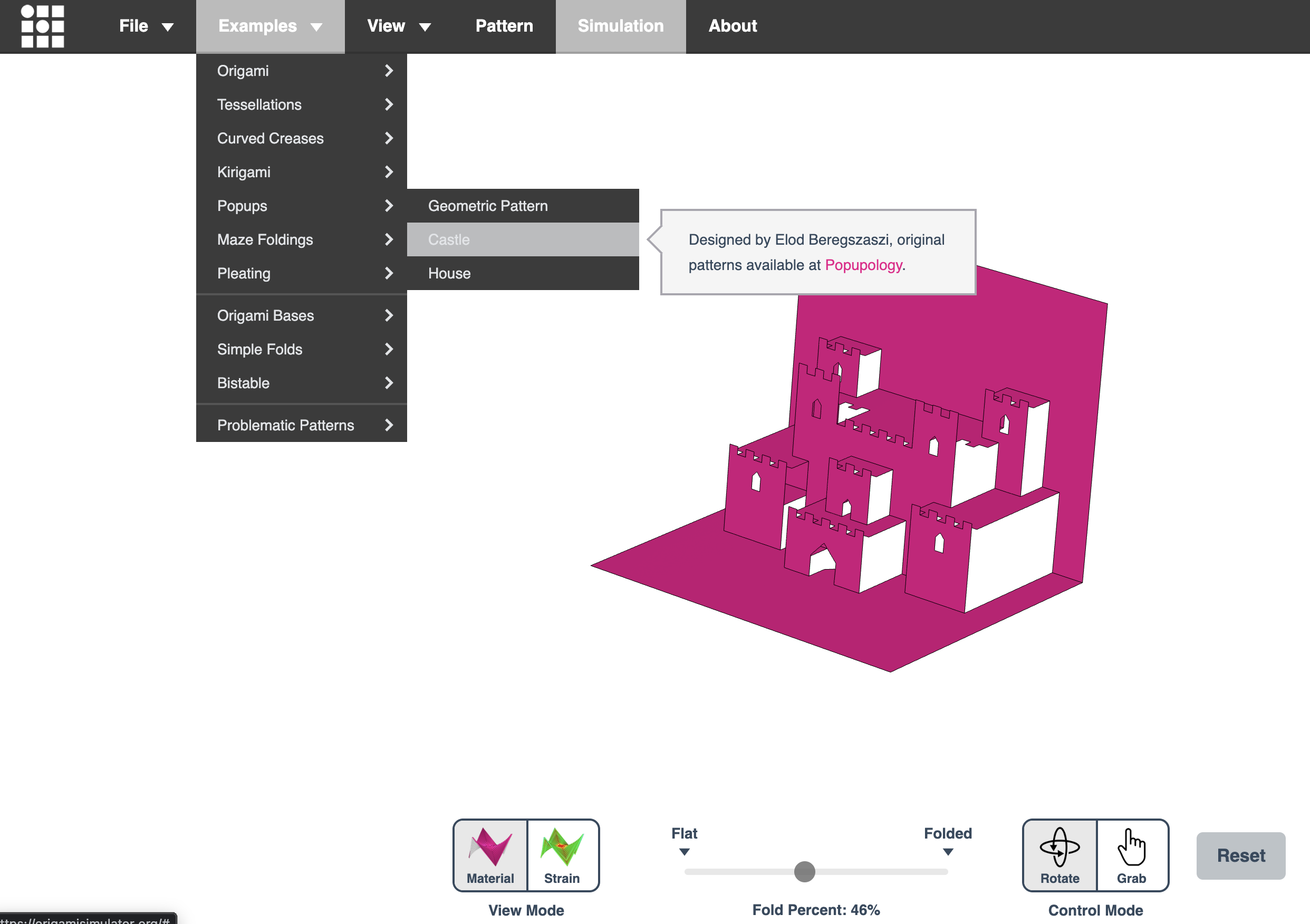

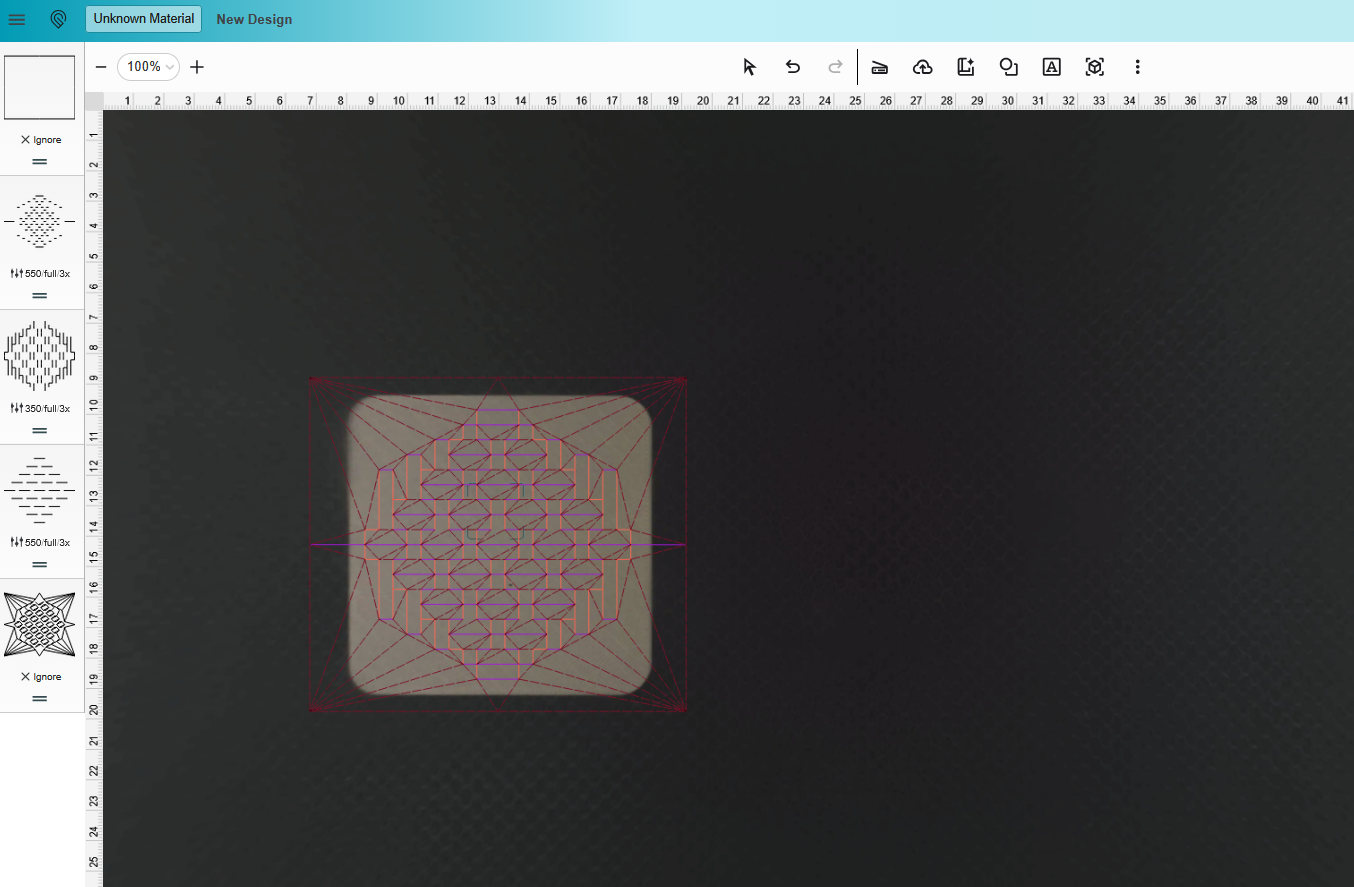

3) Origami Patterns from the Web

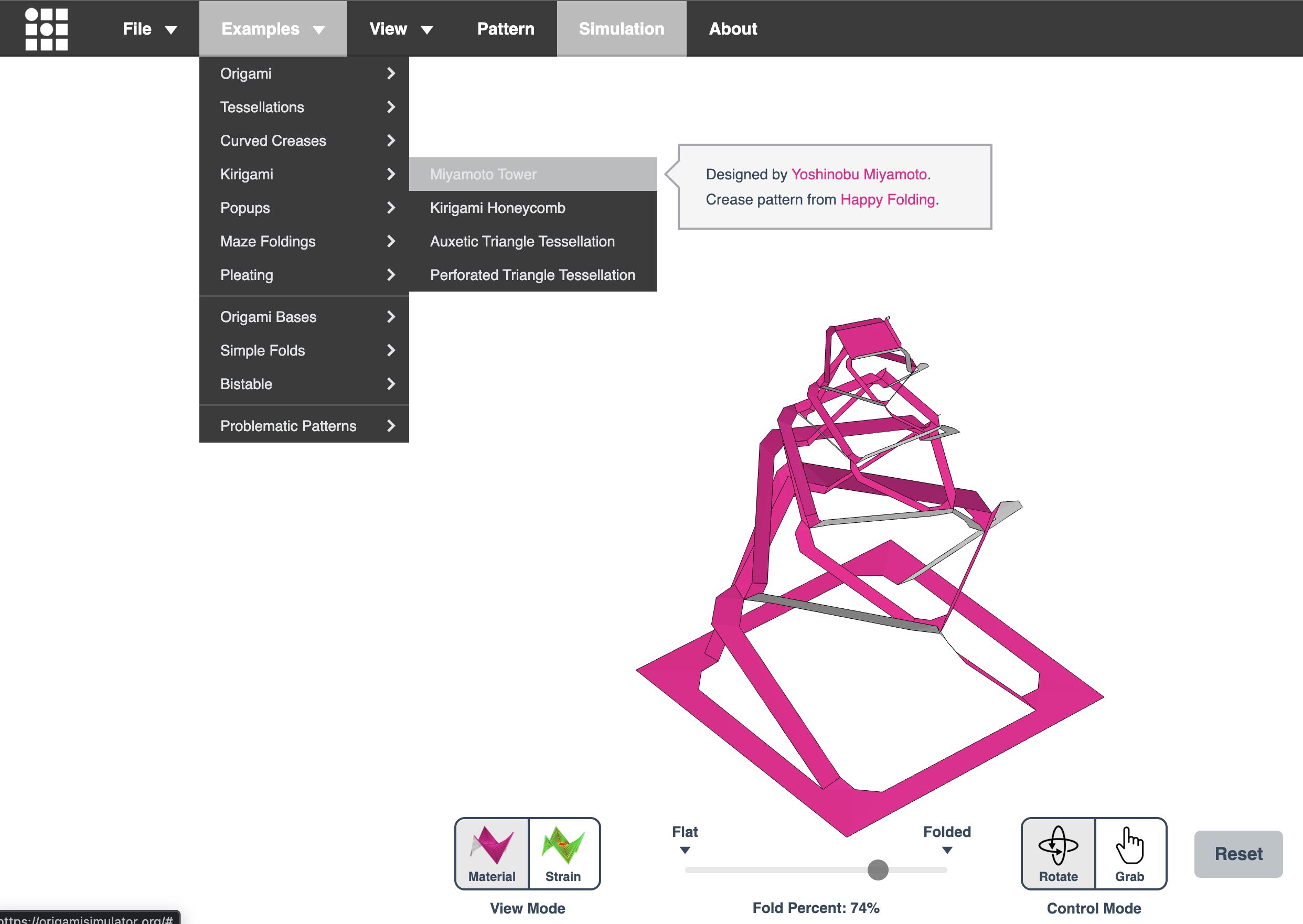

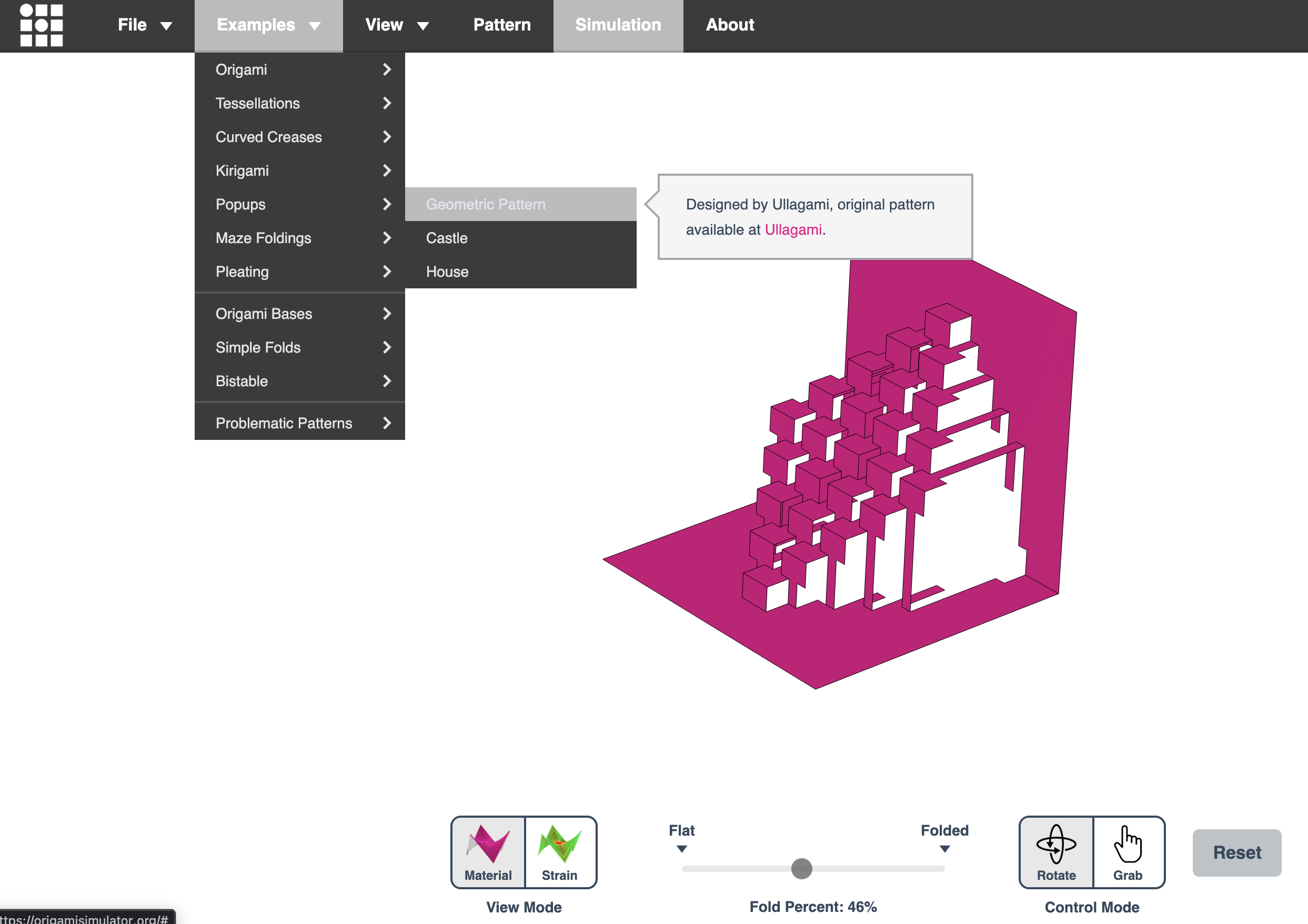

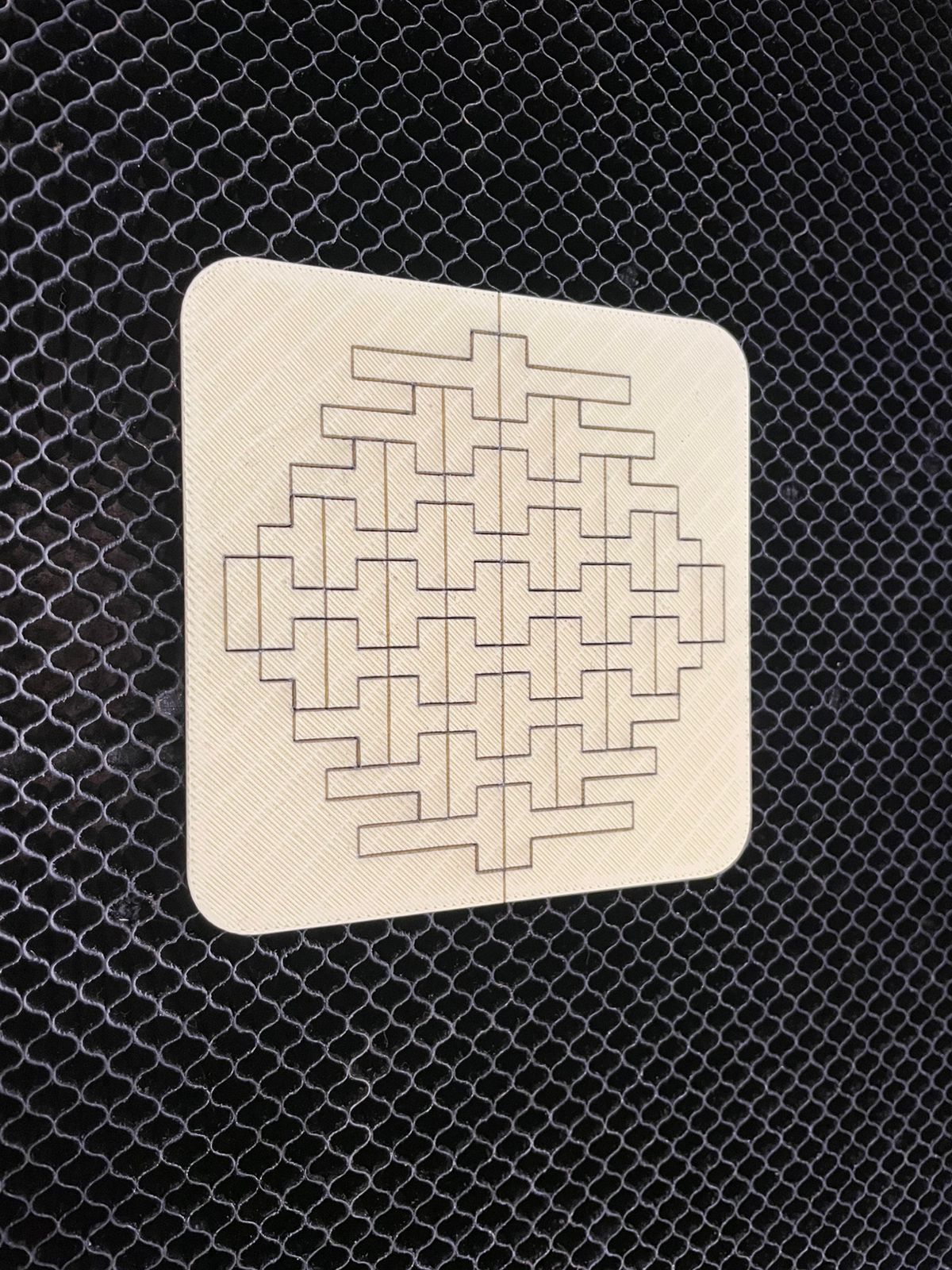

I grabbed SVGs from the delightful Origami Simulator. Because if you’re going to break rules, at least outsource the math.

- Popup Castle

- Geometric Pattern (the troublemaker)

- Kirigami Miyamoto Tower

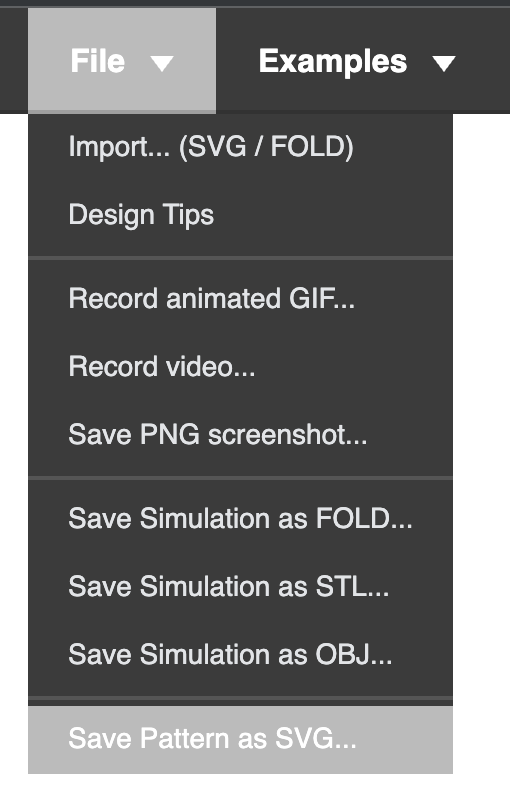

Choose your design and import the SVG files from the File menu.

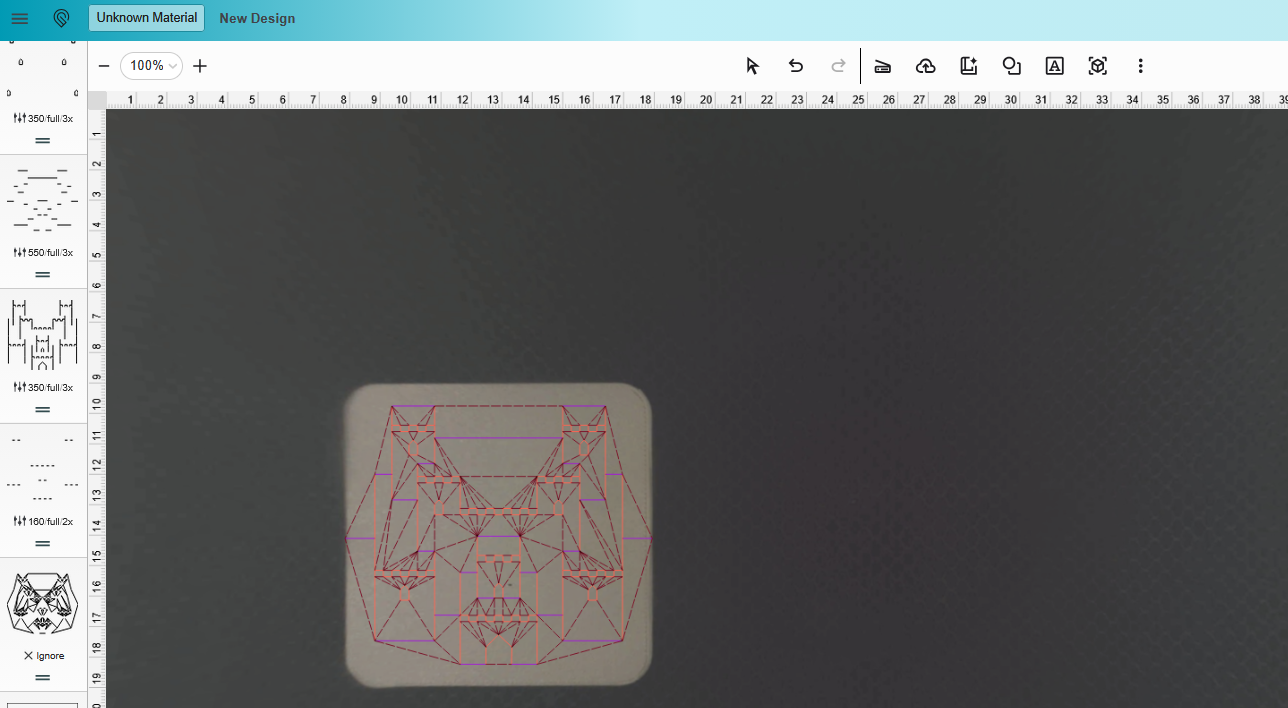

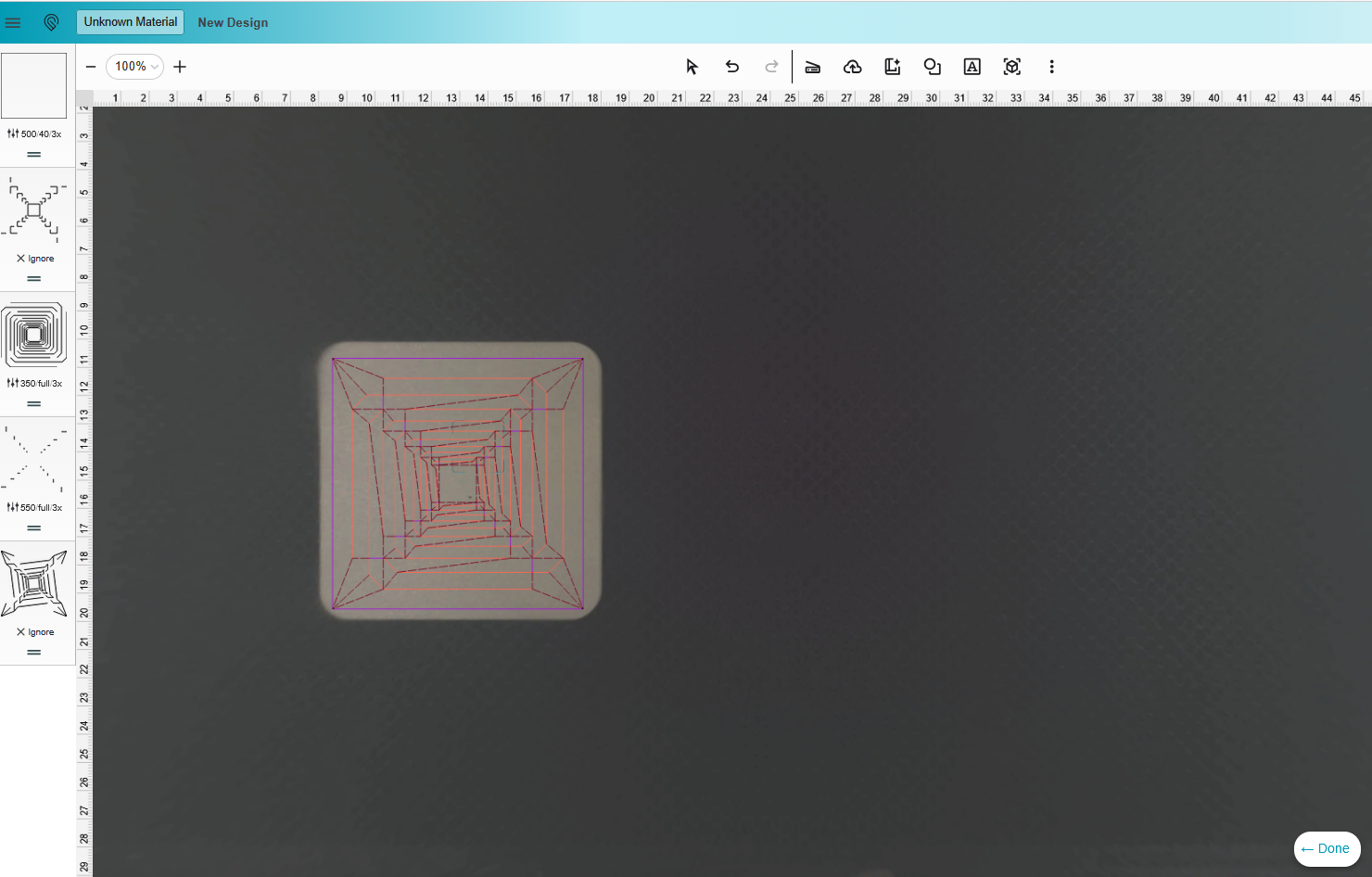

4) Glowforge: Scale, Assign, Blast

Import SVGs into Glowforge app, scale to the plate, assign operations: cut for outlines and score for fold lines. Then run the tuned settings:

- Cut: 350 speed, full power, 3 passes

- Score-for-bend: 500 speed, full power, 3 passes

Yes, I tested. No, I didn’t enjoy babysitting. But now it folds where I say it folds.

Results: The Good, The Cool, and The Ugly

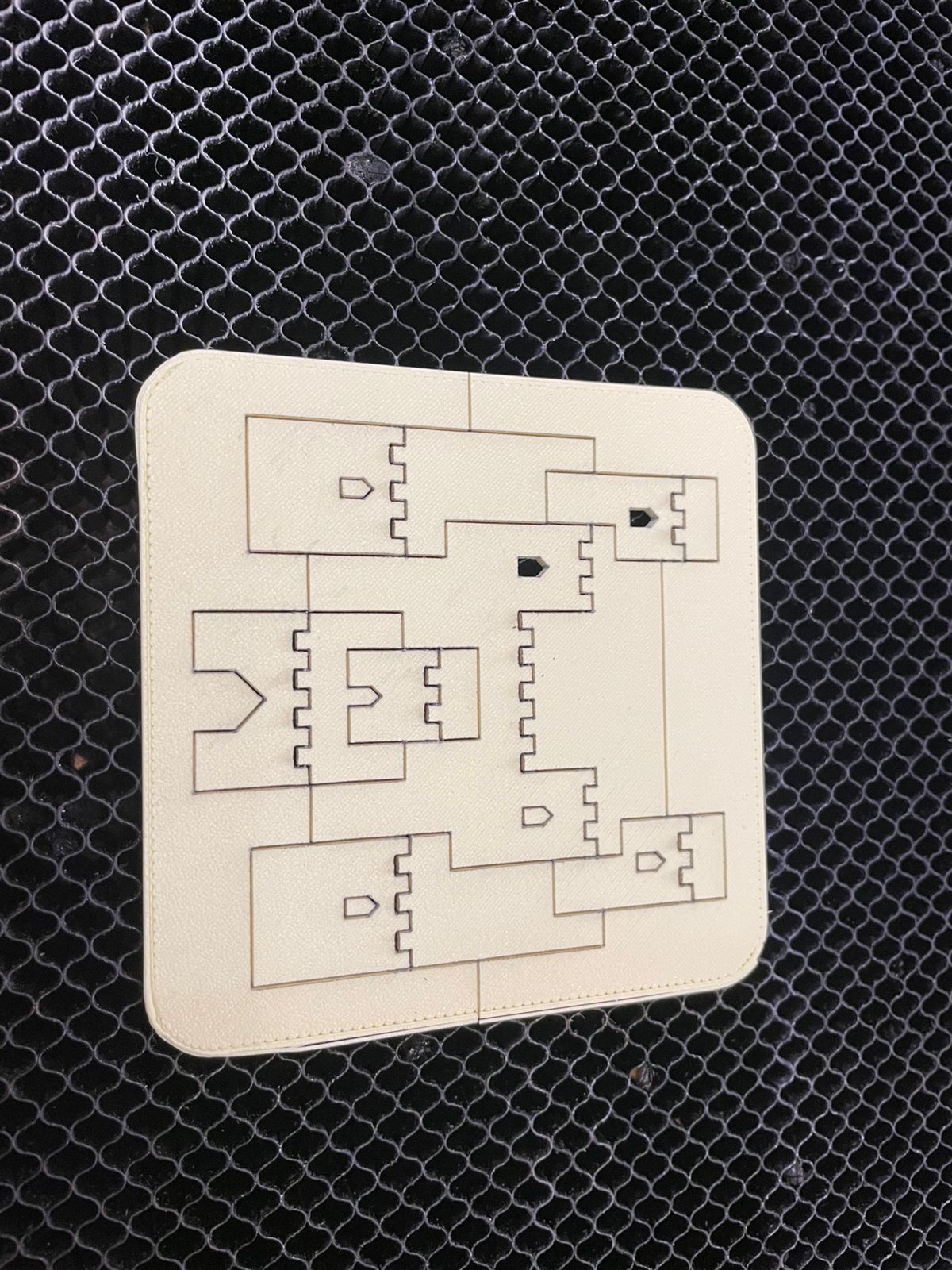

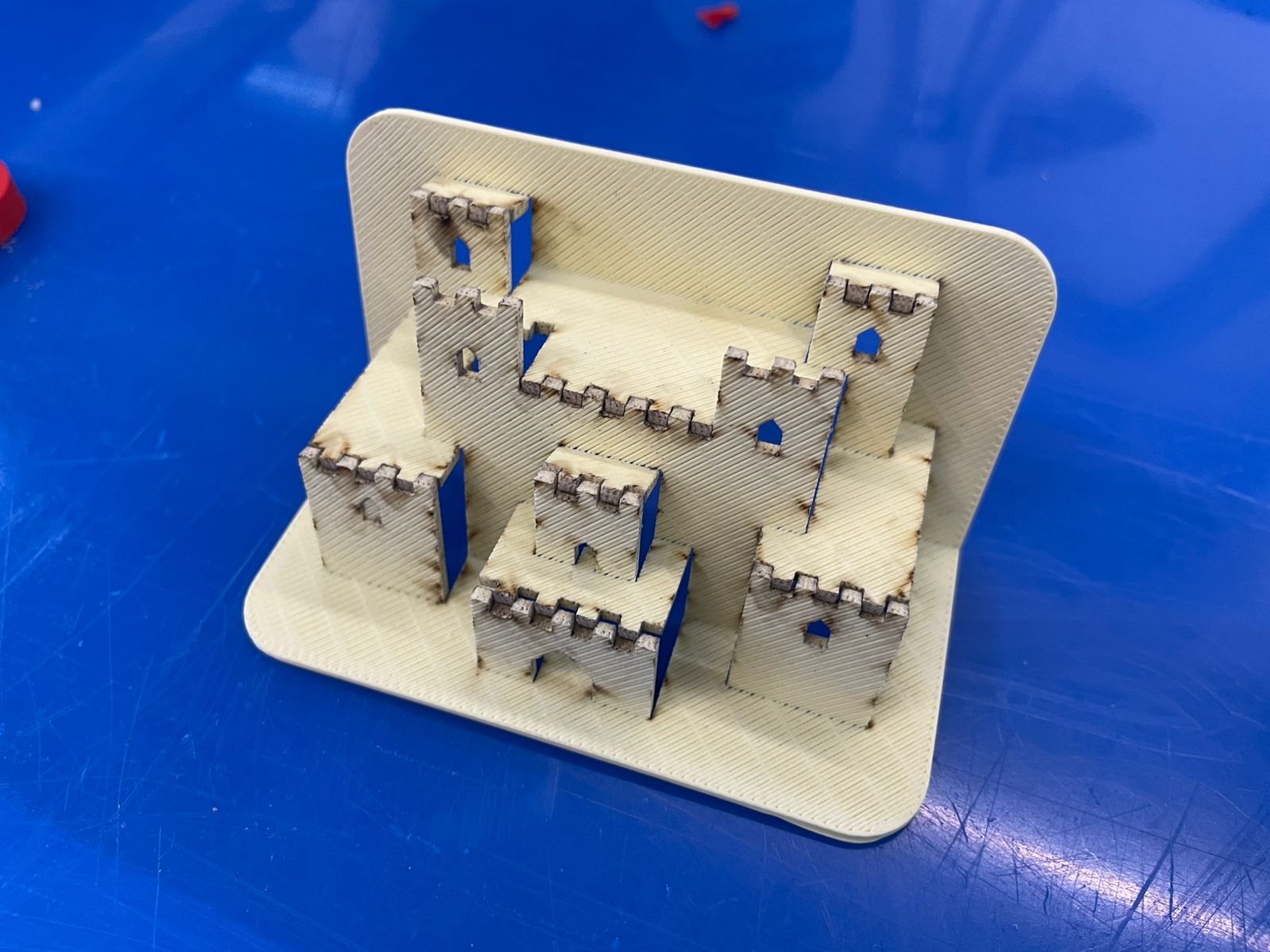

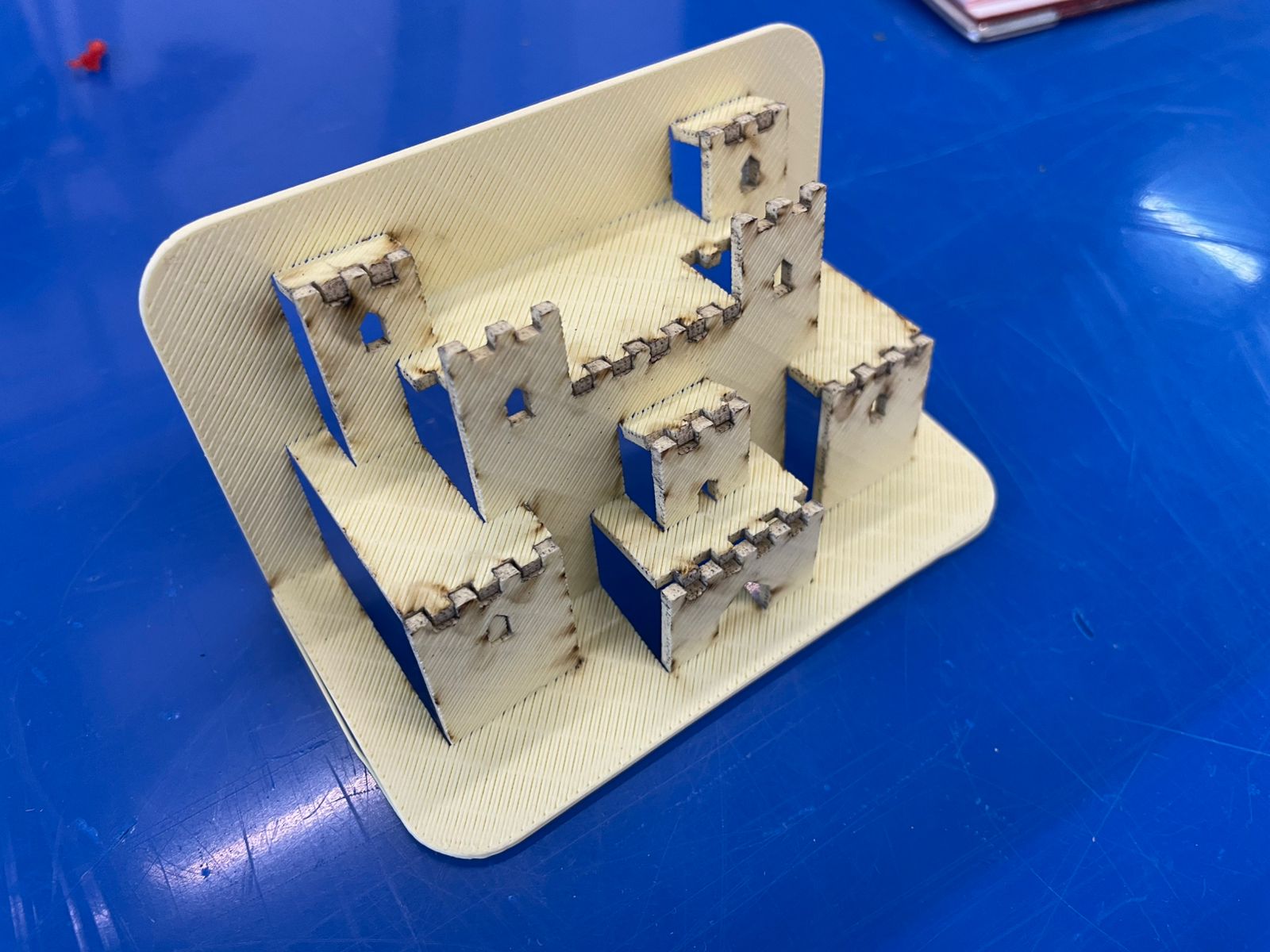

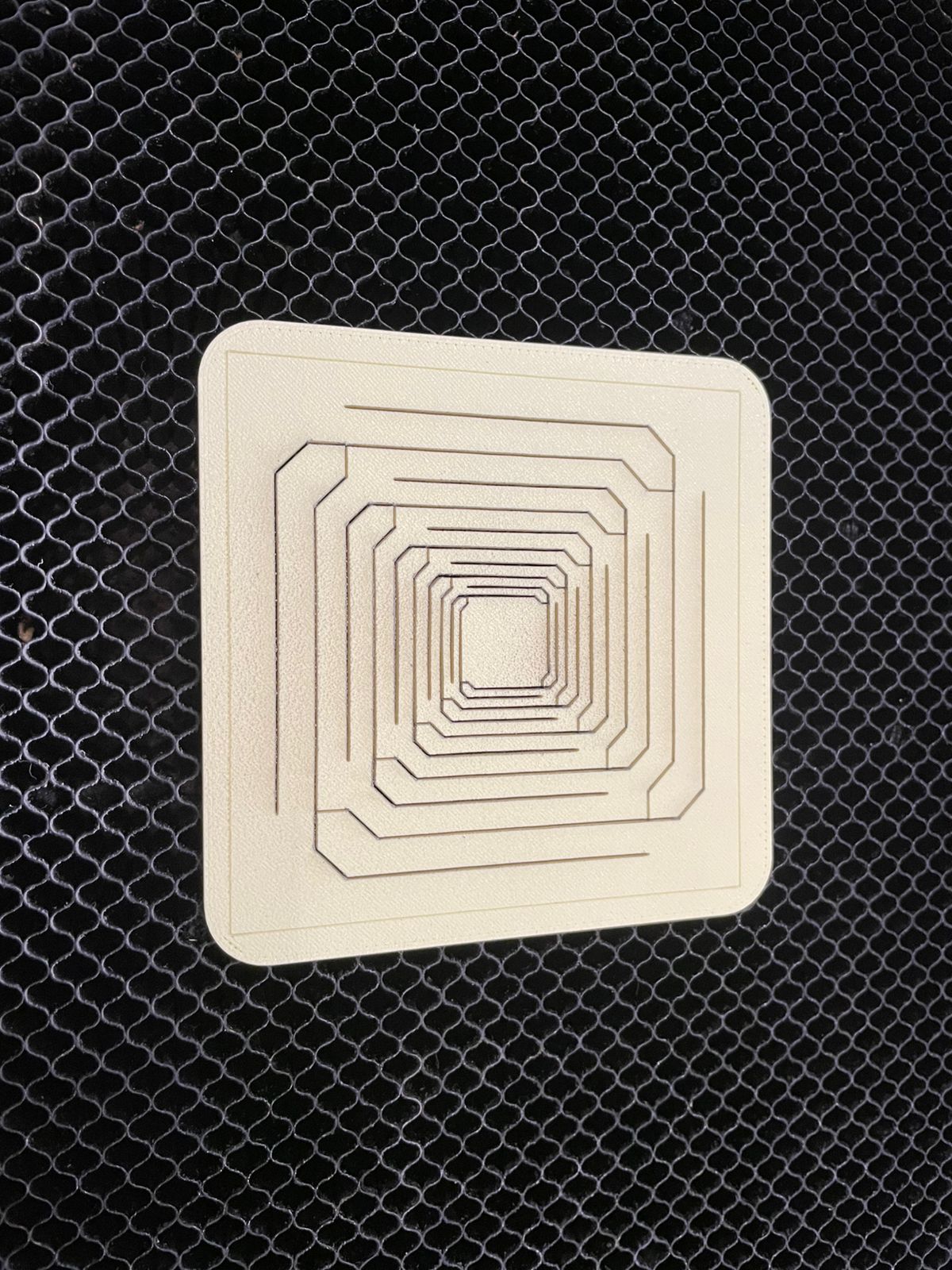

Popup Castle — surprisingly obedient

Folded cleanly, assembled fast, and popped like a budget theatre set. Would recommend.

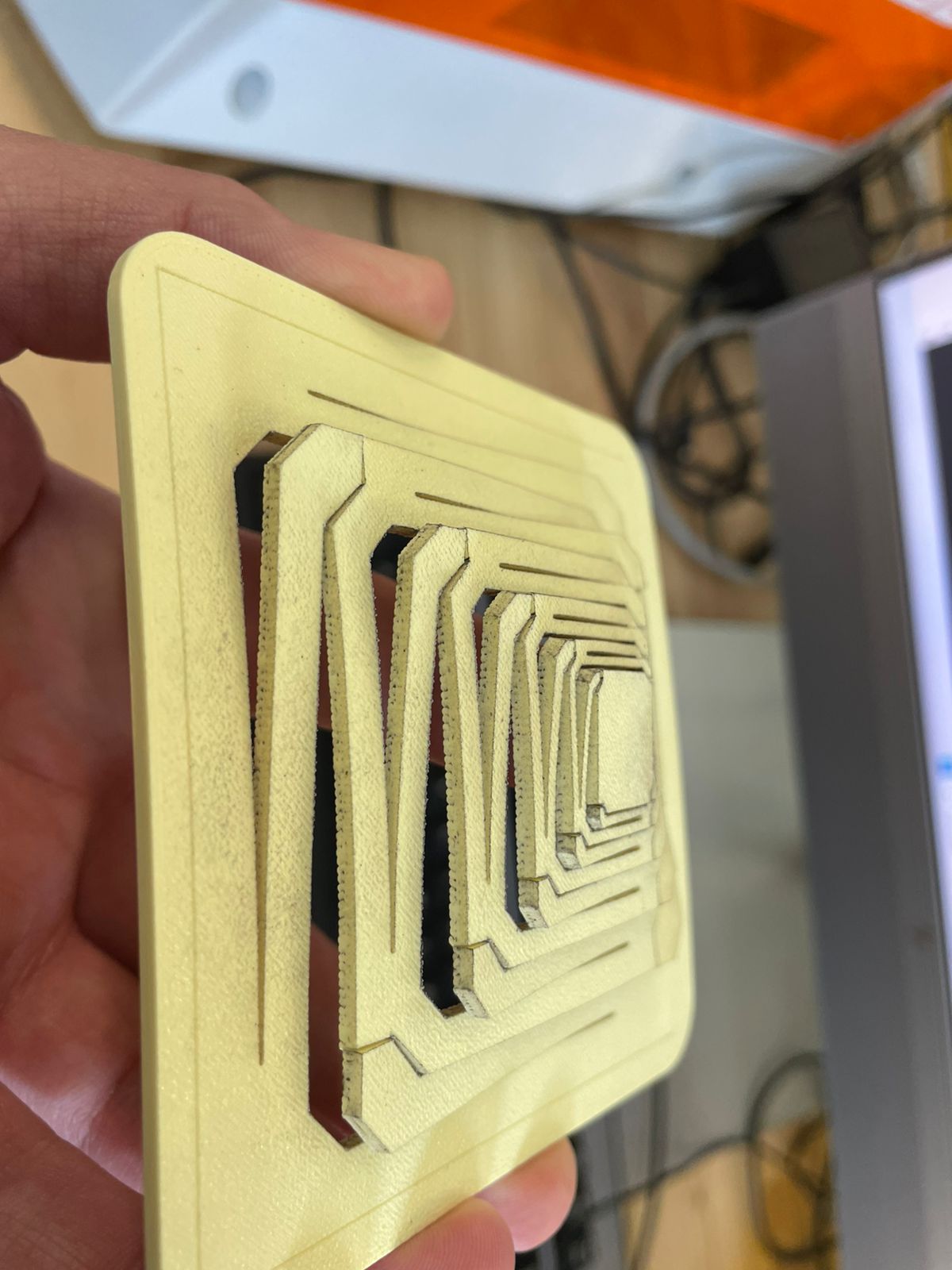

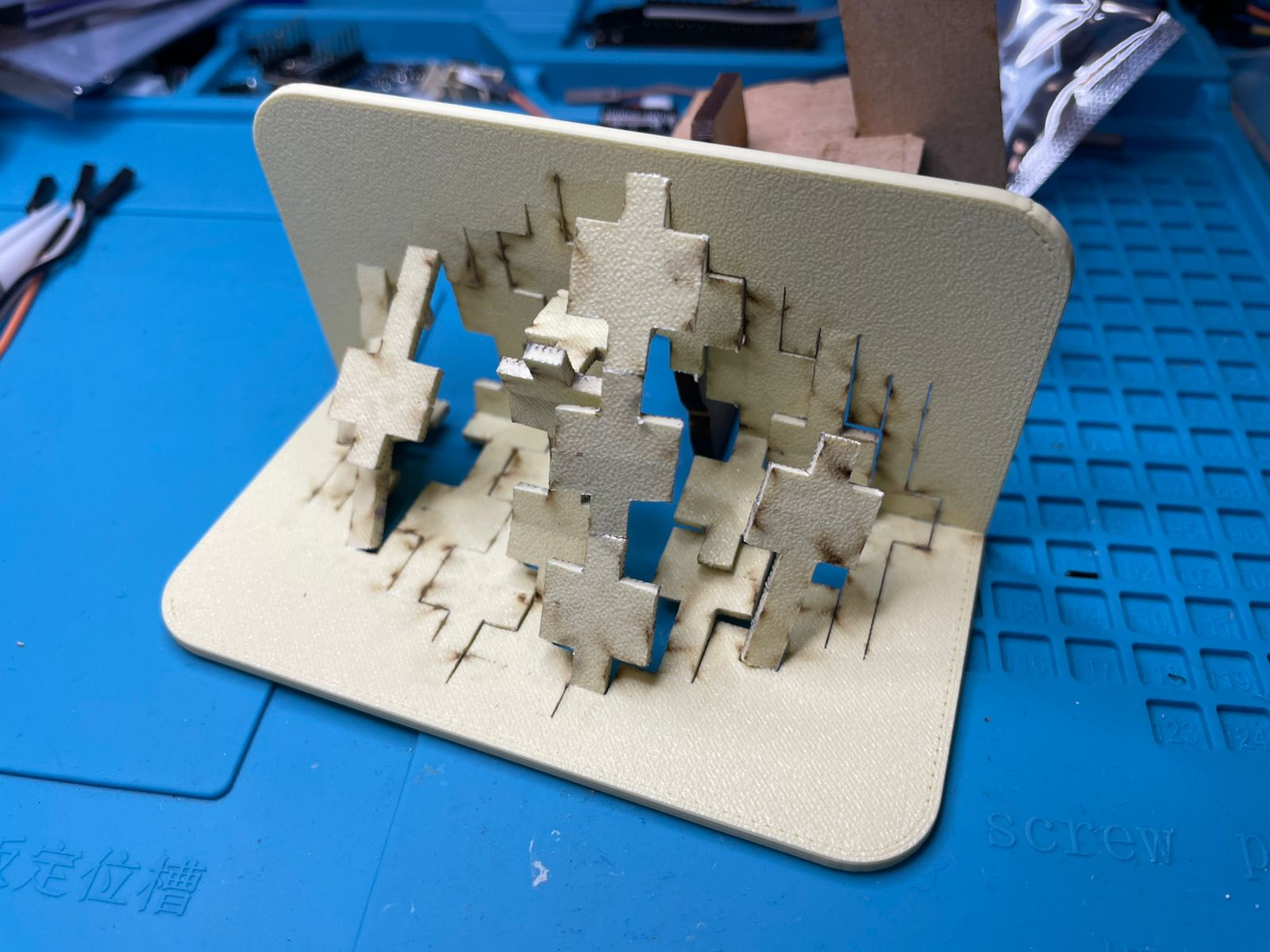

Kirigami Miyamoto Tower — bouncy and dramatic

Elastic and springy in a satisfying way. PLA actually did something artsy. We take those wins.

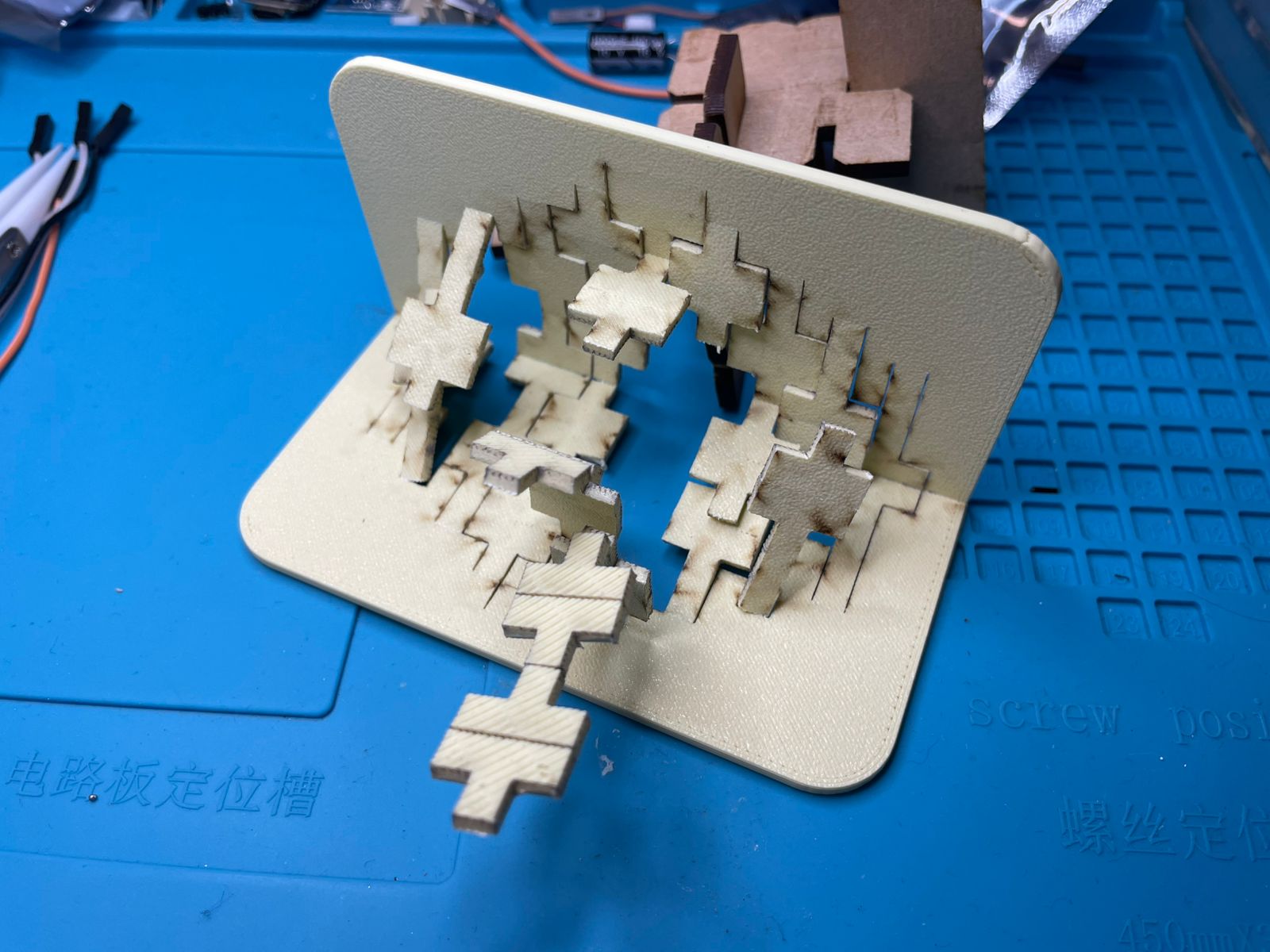

Geometric Pop-Up — expectations vs reality

Looked clever on screen, behaved like a grumpy hinge farm in real life. Not everything wants to be art.

Problems and Fixes

Fillets in Fusion throwing tantrums

Kernel got dramatic when filleting chains at sketch termini. Solution: apply fillets to stable edges post-extrude and don’t over-chain. If it still complains, split edges and fillet in multiple ops. Yes, it’s petty. No, I won’t apologize.

PLA Laser Behavior

Scoring must be deep enough to bend but not cut through. After iterations: 500 / full / 3 passes was the sweet spot. Your sheet, brand, and pigment will vary.

Disclaimer That Will Not Save Me

PLA isn’t the poster child for laser cutting. Ventilation on high, lid closed, brain on. I used scoring for bends and cutting for outlines. Don’t inhale victories or fumes.