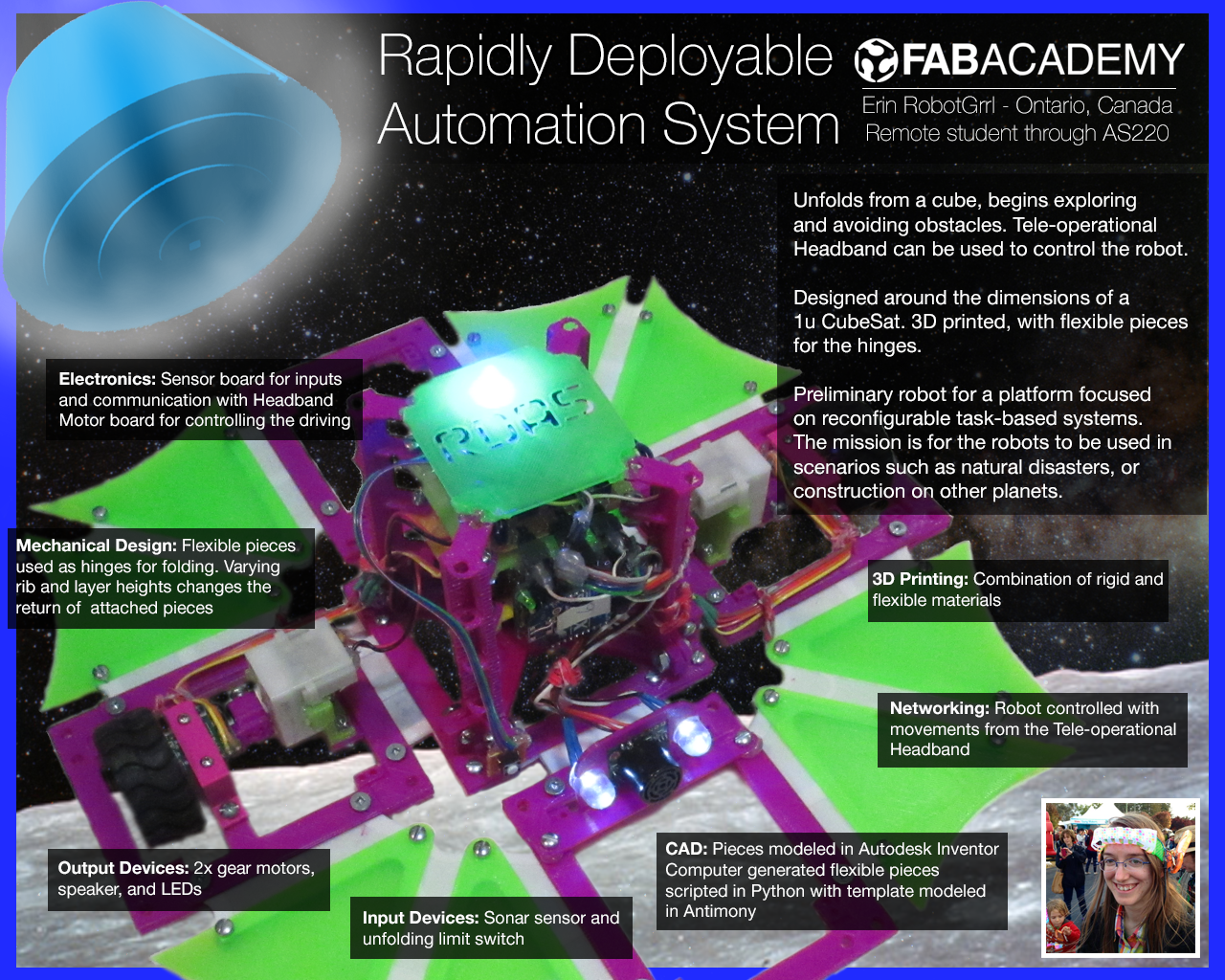

Rapidly Deployable Automation System

Overview

Clips of current robot functionality:

For my final project, the problem that we are trying to tackle is being able to rapidly deploy a system of automated movement. Instead of the objects around us being stationary, what if we could enable them to move around and interact with us. We could use robot modules that link together to combine steps of movements.

The moonshot of this idea is to eventually have it used in natural disaster settings during the humanitarian efforts. Imagine unpacking a backpack full of these robot modules, and assembling them to perform some task like distributing food through a line faster. Or sorting specific medical supplies to go to one area. Maybe even something as crazy as being able to dig out areas for standing water to flow away from temporary shelter locations.

Technical Description

First we will be creating two of the robot modules on a smaller scale (10cm). The robot modules will each be attached to a base that is an hexagon shape. This will allow lattice formations of the different modules together.

Their connection will be able to pass current, used for sending and receiving data, and ground. Each robot will be powered individually, since each one has a different purpose and will have different power requirements.

Overall communication with the modules will be using an interface board- either through buttons or gestures.

A web app will show details about the systems behaviours, and each of the module’s actions or senses.

Prior Art

Coming soon

Development Milestones

Mechanical- Octagon base design

- Robot 1

- Robot 2

- Robot controller (sensors and motors)

- Interface board (either buttons or gestures)

- Communication between robots and interface

- API

- Basic interface

- Tie it all together

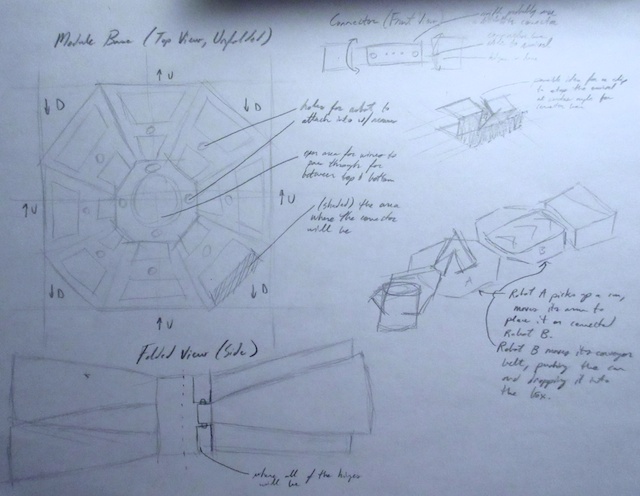

Preliminary Sketches

Shows the octagon base of the robot

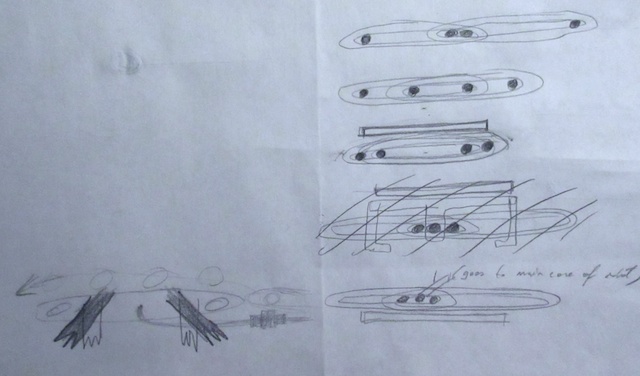

This is more of a scribble than a sketch. It’s supposed to be an expandable drive train for one of the robots. Kind of hard to draw.

Cardboard model of how the octagon folds up

Notes

There are some areas of concern with this idea that have not been entirely flushed out yet. For example, how will the system know what to do? If there are 10^9 modules, how does the overarching behaviour of what the user wants the system to do get split into clusters of the modules, and then to each individual module?

And even more questions- how will the system heal itself if one module breaks? How can sensors be added to the system to make the behaviour dynamic?