Drafts of the ideas of the final project.

Two of my ideas sprang from my previous projects conducted in Institute for Advanced Architecture of Catalonia. I motivation is to take them one step further and lift them form a research projects toward potentially becoming a tangible product.

Idea 1. Augmented reality device

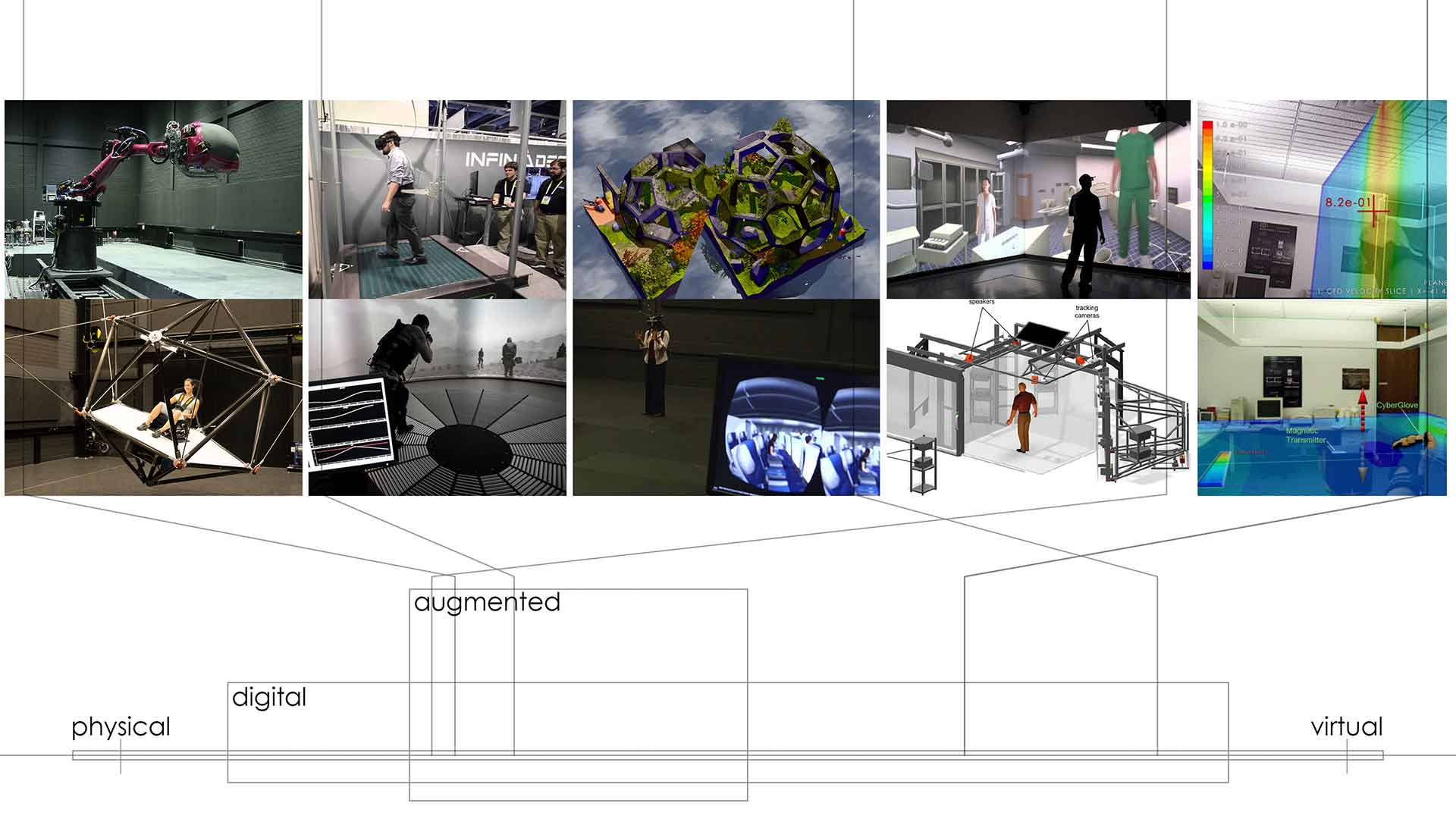

– idea 1: AR device: Mixed Realty spectrum by Paul Milgram –

– idea 1: AR device: Mixed Realty spectrum by Paul Milgram –

My thesis project was focused on creating a Mixed reality platform for interior spaces to extend saturation of virtual layers of the space. Mixed reality is a way of extending the notion of space and time we have into a digitally integrated one. In extremes it stretches in lots of variations from physical to virtual realities. Purely physical reality space would contain no additional meaning and be unaffected by humans. And purely virtual reality - , equally unattainable, - a system where no sensations come from real physical reality (Matrix). Augmented reality then is a blend of the two such that the on top of the physical we add digital information, but not trying to replace it.

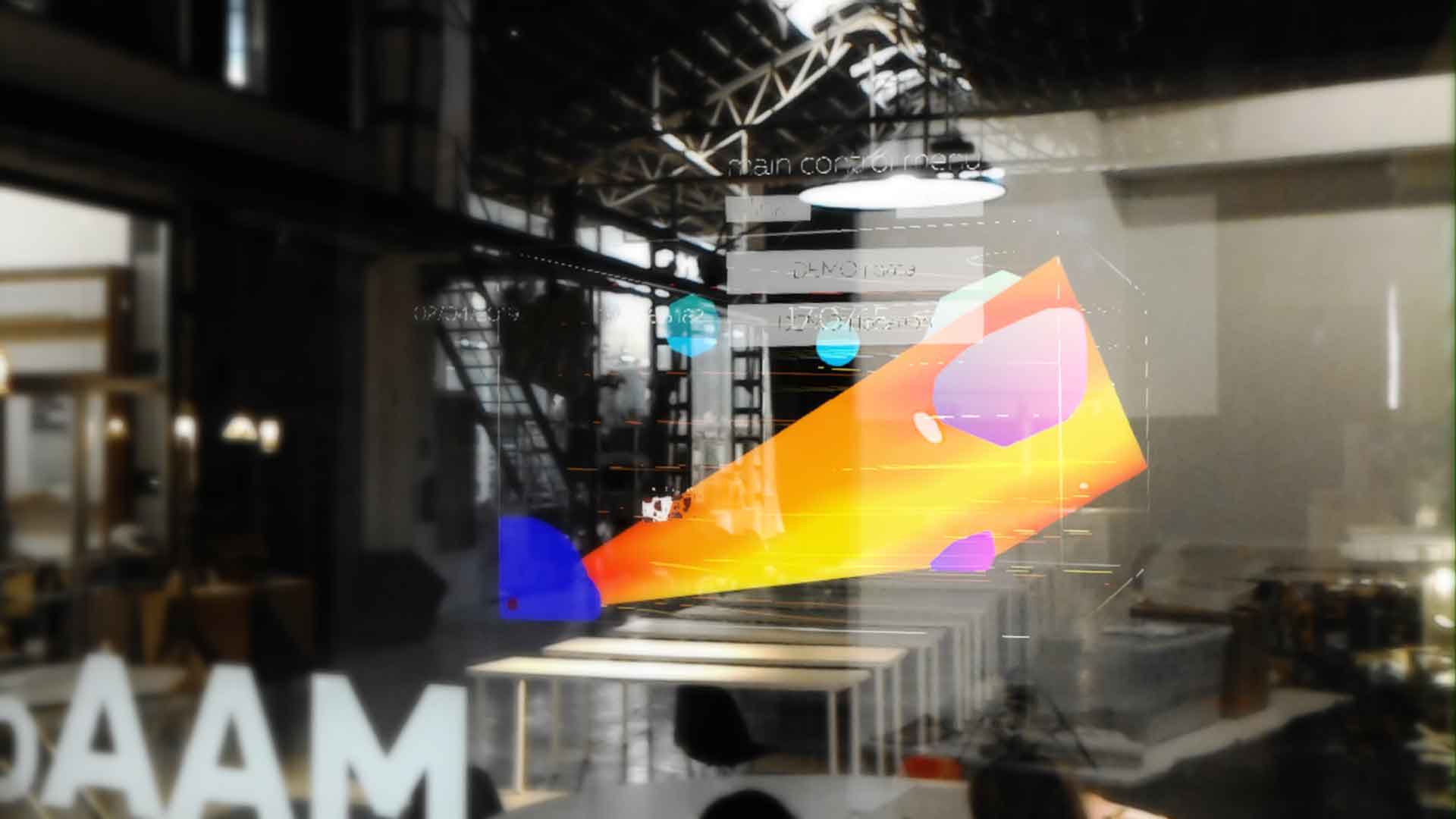

– idea 1: AR device: previous work: Intraspace: interior spatial data interface –

– idea 1: AR device: previous work: Intraspace: interior spatial data interface –

As a proposal for a project I would love to try creating a mixed reality environment myself. The project would consist of:

- semi-transparent device enabled with some spatial awareness, as well as having capacity to interact with the user and surrounding environment

- a constellation of sensors measuring environmental properties such as temperature, strength of wireless signals, etc. and enabled with network communication capacity

Depending on the complexity of the technical feasibility I would than vary the focus on wither side of the environment: the device itself or on the cluster of sensors and their integration.

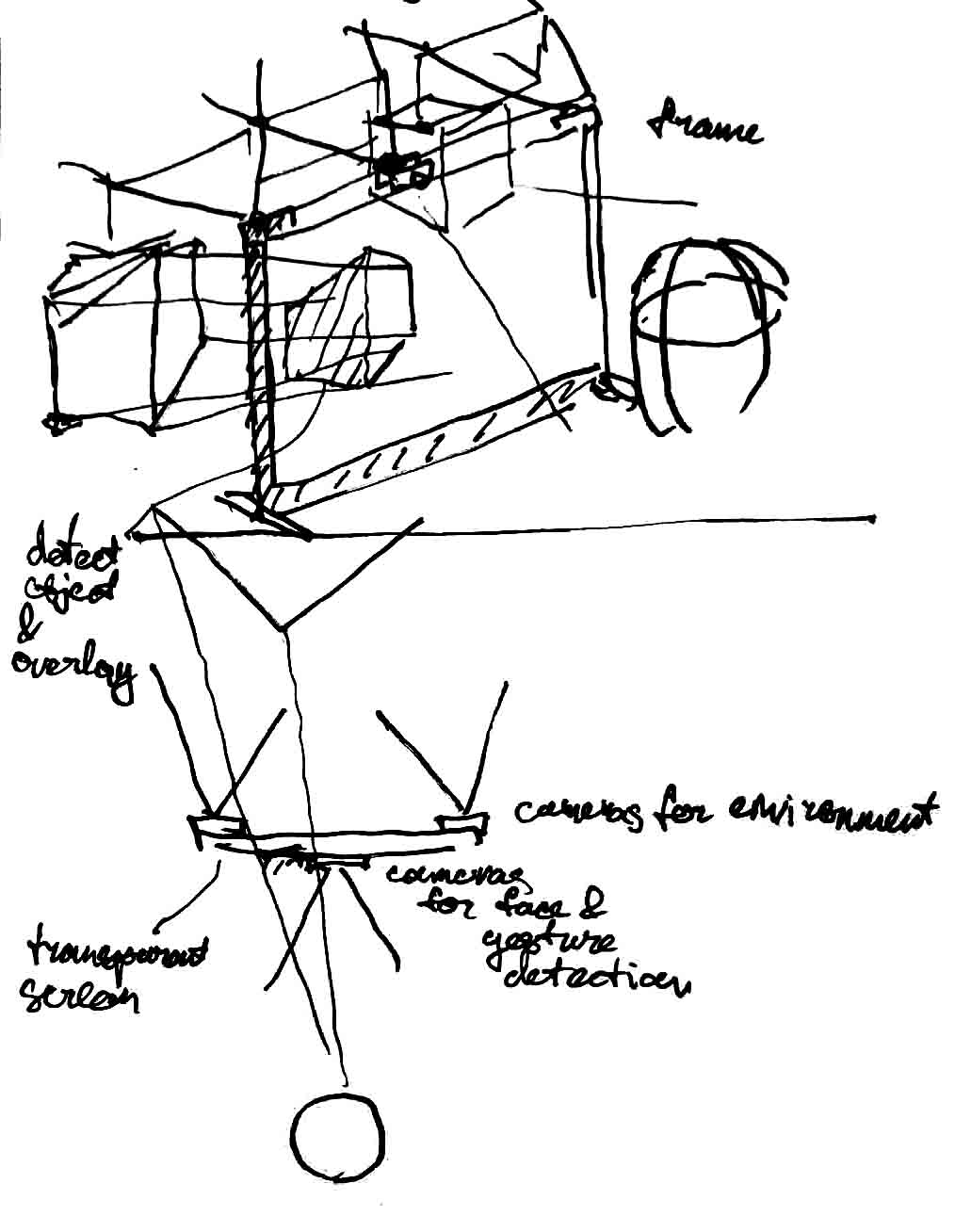

Device itself I imagine in two possible branches of development to be further selected are:

- (modify and) fabricate based on an opensource design for headset;

- develop a standalone screen with two cameras, one tracking users position and possibly look direction, and second to enable spatial awareness.

– idea 1: AR device: sketch –

– idea 1: AR device: sketch –

References:

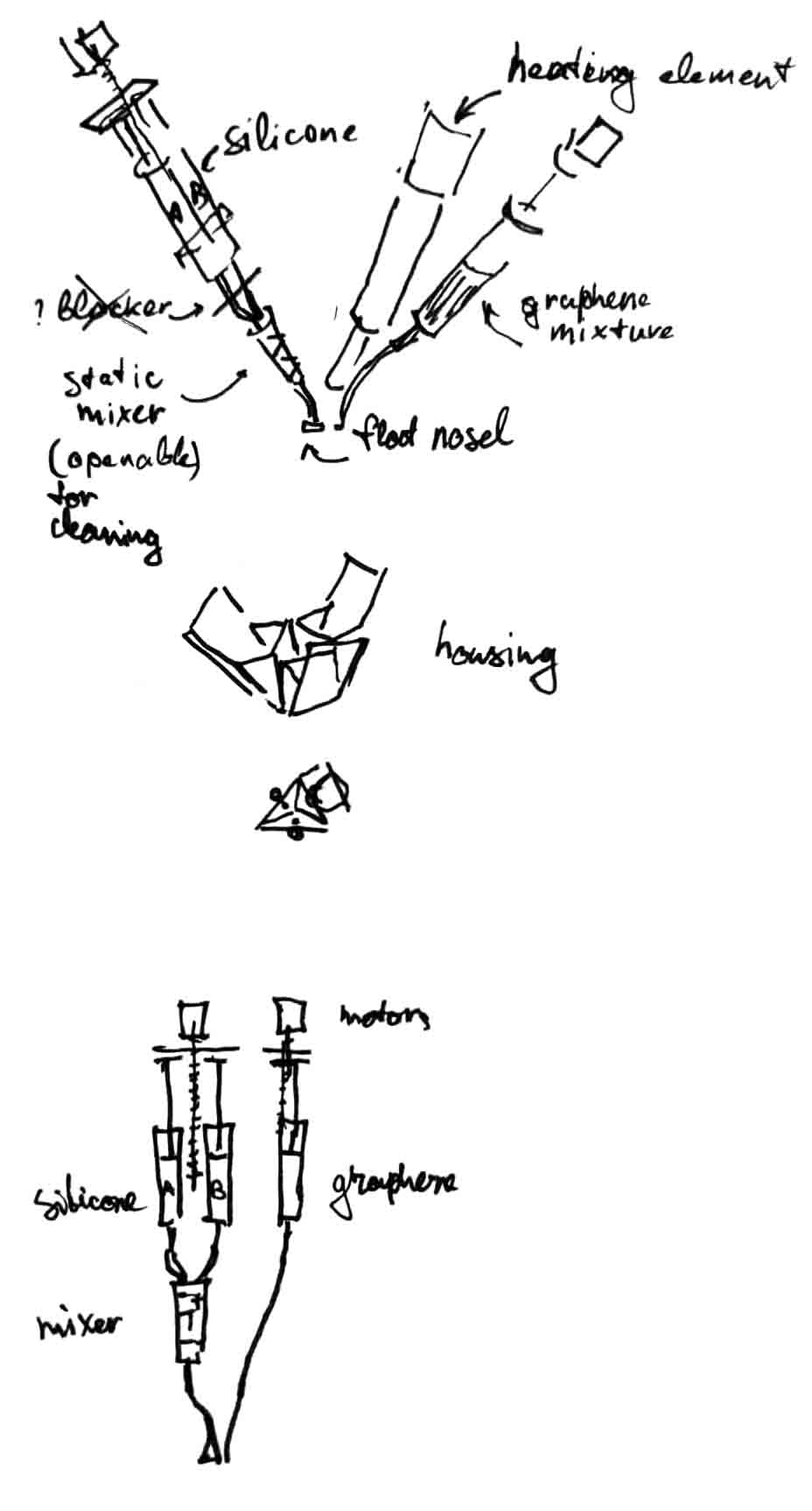

Idea 2. Multitool extruder for graphene membrane

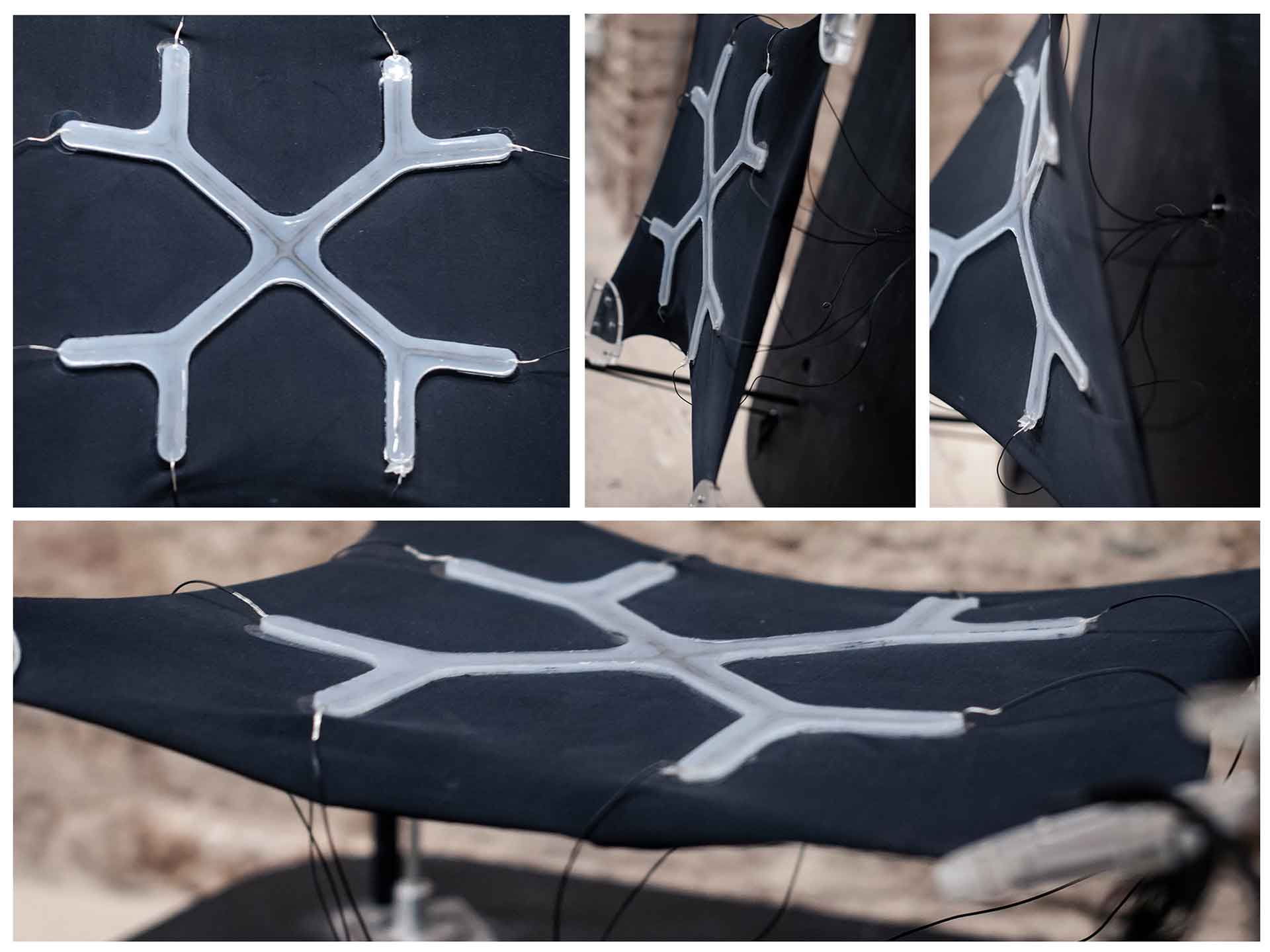

– idea 2: printer head: previous work: Alive - smart membrane –

– idea 2: printer head: previous work: Alive - smart membrane –

The project was developed here in IAAC in Digital Matter Studio. It is a flexible and stretchable membrane system, capable of detecting pre-trained deformations. As a test we successfully developed a prototype of about 30 by 40 cm detecting single point pressure points and an extent of it. The membrane is multilayered and consists of base material (fabric) and conductive pattern layer encapsulated in silicone (graphene nano-particles).

In order to train the membrane to detect a certain type of deformation we were parametrically designing the pattern to both minimize material use and maximize the sensitivity for material. However in order to produce these highly customizable patterns we had to produce single use molds.

In terms of project the focus was to establish feasibility of the concept. However the next step would be to have robotically fabricated custom liquid patterns.

For this reason I wanted to develop a tool for the robot to mix two components of silicone, deposit it with variable speed, deposit ink of graphene mixed with water - in four passes.

– idea 2: printer head: sketch –

– idea 2: printer head: sketch –

References:

Idea 3. Unity Controller Panel

Main of the projects I work with center around development platform and game engine Unity. It’s power is ability to mix external assets ranging from images to 3D models with scripting with C#. A problem that often comes up is that to integrate controllers or physical inputs requires complicated setup and depends a lot on the target platform the project is deployed to. Moreover, input devices have usually complex Software Development Kits. Especially in testing phases this is an overkill.

The idea of the project would be to package simple inputs and outputs into a self-contained device that would be synchronized with the main development environment, it would be connected wirelessly to enable more fluid interaction with the system. An analog of the device, in a sense, is a controller for robotic arm robots where one can review and select G-Code to be executed, one configures the device and the job at hand.

– idea 3: controller: sketch –

– idea 3: controller: sketch –

Project Selection

To select the best matching project for the course of the FabAcademy I decided to break down based on the schedule possible tasks and, thus, see with which project would I learn the most and apply the skills.

me>

Comparing ideas, the idea 3’s device has most direct application for me at the moment for multiple projects. Evaluating all aspects the idea would be as follows.

The device you can connect to a Unity deployment over WiFi probably using UDP protocol. The device would have certain physical inputs (to be defined in cyclical way: starting with vynil-cut touchpad, adding buttons, potentially camera?, etc.). These inputs are meant to connect to values in the project - providing you with a simple physical controls for the digital projects.

Additionally the device would have output system to create a backwards communication: allow you to see information from the project. This would also be handy for debugging purposes. This aspect I would also define cyclically, starting with and LCD screen, and adding status LEDs.

The body of the device can utilize 3D printing for complex sides, laser-cutting for simple faces, potentially casting for closing elements on sides.

Given all this, this project seems like a good opportunity to utilize all aspects of technologies and ideas learned in FabAcademy. Selected project is going to be based on the idea 3.