04 - Embedding Programming for microcontrollers

Group Assignment: Toolchains and Work flows for Embedded Programing Microcontollers

In this week’s group assignment we looked at how to program a variety of different microcontrollers from the USB compatible ESP32, RP2040 to the SAMD11 and ATTINYs which require a programmer to flash the USB drive onto the microcontoller. This week’s group assignment can be found using the following link: https://academany.fabcloud.io/fabacademy/2025/labs/barcelona/students/group-assignments/week4.html

Key takes from the week:

- choice of microcontroller determines the setup, code

- cost/benefit trade off once production becomes significant. ESP32, XIAO are much more expensive and can be overkill when the microcontroller only requires a particular job.

- Micropython can be used on Raspberry Pi, ESP32 boards. Rust can also be used but is very unforgiving, and C/C++ tends to be the most used across microcontrollers. Arduino IDE 2.3.4 is a great tool for embedding programs on the microcontrollers

- Datasheets are your best friend !

Different type of microcontrollers:

USB compatabile out of the box:

- XIAO

- RP2040

- ESP32 s3

- bArduino : 80 mb bArduino1 board developed for fab academy. bArduino2 developed around covid with more capability as the students couldn’t come into class. v2.4 used SAMD for programming. Several iterations 3.0 esp32s3 with usb capabilities to allow easy programming for IAAC students across multi-disciplines.

Micro-controllers where the computer connection need to be flashed using a programmer:

- SAMD11

- ATTINY

| XIAO/RP2040 | ESP32S3 | SAMD | ATTINY | |

|---|---|---|---|---|

| Voltage (V) | 3.3 | 3.3 | 3.3-1.8 | 5-1.8 |

| versions | 11, 21 | 412, 1614,3216 |

The specific work flows for some of these MCUs are given in the group assignment

Individual assignment: Barduino make it sing

As mentioned previously the Barduino was created to allow easy access to embedded programing. The documentation for the Barduino 4.0.2 can be found here https://fablabbcn-projects.gitlab.io/electronics/barduino-docs/

The documentation is important to inform the programmer the specific purpose and numeration of each of the pins. These are some available outputs on the board that uses different pins.

- LED: Connected to GPIO48.

- Buzzer: Connected to GPIO46.

- Neopixel: Connected to GPIO38.

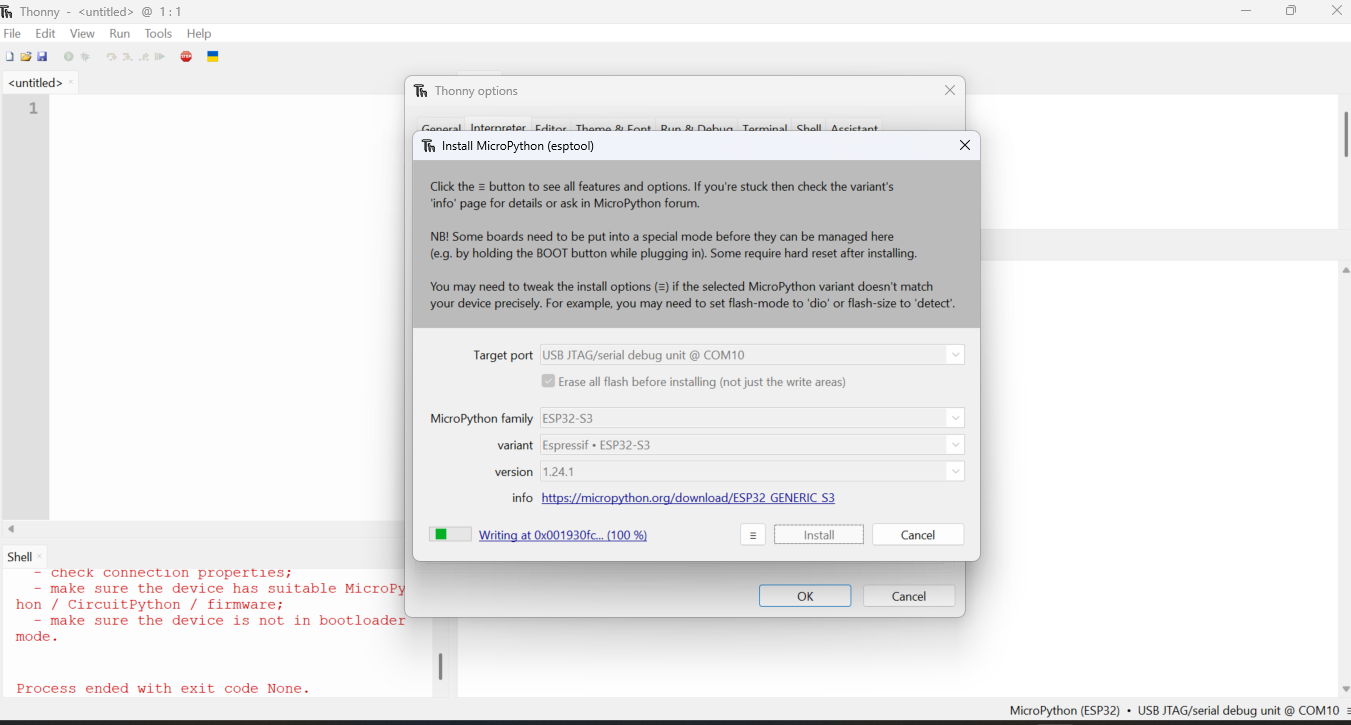

Installing Micropython to the Arduino on Thonny

Select: Tools —>Options —>Interpreter —>Port

Select the target port where the barduino is connected.

Click on the “Install or update MicroPython (esptoo)(UF2)” hyperlink in the bottom corner of the Tools/Options/Interpreter window.

Select the following options and install

| Target port | COM10 (whichever port the barduino is connected |

| MicroPython Family : | ESP32-S3 |

| variant | Espressif ESP32-S3 |

| version | 1.24.1 |

For the assuignment I’m going to record a little melody on my phone and convert it into notes to play on the barduino.

I recorded an incredibly simple and rough melody with my voice on my phone. It saved in m4a format so I first converted this to an mp3 file using https://www.zamzar.com/. Next I compressed the file using the https://www.freeconvert.com/mp3-compressor. Here is my lovely vocal file :

Next up I installed the librosa python library allows the user to convert mp3 to midi notes.

pip install librosaI started off with the example in the librosa tutorial and asked cursorai to modify it using the gpt-4o-mini model:

Which is the best way to extract sound from my mp3 file to use in the C++ beat_bard ?

import librosa

import numpy as np

# 1. Load the audio file

filename = './tune.mp3' # Update this line with the correct path to your audio file

# 2. Load the audio as a waveform `y`

# Store the sampling rate as `sr`

y, sr = librosa.load(filename)

# 3. Run the default beat tracker

tempo, beat_frames = librosa.beat.beat_track(y=y, sr=sr)

# Ensure tempo is a single float value

if isinstance(tempo, np.ndarray):

tempo = tempo[0] # Get the first element if it's an array

print('Estimated tempo: {:.2f} beats per minute'.format(tempo))

# 4. Convert the frame indices of beat events into timestamps

beat_times = librosa.frames_to_time(beat_frames, sr=sr)

# 5. Extract pitches from the audio

pitches, magnitudes = librosa.piptrack(y=y, sr=sr)

# 6. Convert pitches to MIDI notes

midi_notes = []

for t in range(pitches.shape[1]):

index = magnitudes[:, t].argmax() # Get the index of the max magnitude

pitch = pitches[index, t]

if pitch > 0: # Only consider positive pitches

midi_note = int(librosa.hz_to_midi(pitch))

midi_notes.append(midi_note)

print('Extracted MIDI notes:', midi_notes)

# 7. Calculate note durations based on beat times

note_durations = []

for i in range(1, len(beat_times)):

duration = (beat_times[i] - beat_times[i - 1]) * 1000 # Convert to milliseconds

note_durations.append(int(duration))

print('Extracted Note Durations:', note_durations)

# 8. Save the extracted data to a file

with open('extracted_notes_librosa.txt', 'w') as f:

f.write('MIDI Notes:\n')

f.write(', '.join(map(str, midi_notes)) + '\n')

f.write('Note Durations (ms):\n')

f.write(', '.join(map(str, note_durations)) + '\n')

print('Data saved to extracted_notes_librosa.txt')Explanation of the Code

Loading the Audio: The audio file is loaded using librosa.load().

- Loading the Audio: The audio file is loaded using librosa.load().

Beat Tracking: The librosa.beat.beat_track() function detects the beats in the audio.

- Beat Tracking: The librosa.beat.beat_track() function detects the beats in the audio.

Pitch Extraction: The librosa.piptrack() function extracts the pitches from the audio.

- Pitch Extraction: The librosa.piptrack() function extracts the pitches from the audio.

MIDI Conversion: The extracted pitches are converted to MIDI notes.

- MIDI Conversion: The extracted pitches are converted to MIDI notes.

Duration Calculation: The durations of the notes are calculated based on the timestamps of the detected beats.

- Duration Calculation: The durations of the notes are calculated based on the timestamps of the detected beats.

Saving Results: The MIDI notes and their durations are saved to extracted_notes_librosa.txt.

- Saving Results: The MIDI notes and their durations are saved to extracted_notes_librosa.txt.

Running the Code

Run the Script: Execute the script to extract the MIDI notes and note durations, and save them to extracted_notes_librosa.txt.

- Run the Script: Execute the script to extract the MIDI notes and note durations, and save them to extracted_notes_librosa.txt.

2. Check the Output File: Open extracted_notes_librosa.txt to verify that the data has been saved correctly.

Updating Your C++ Code

Once you have the extracted notes and durations, you can update your beat_bard C++ code with the new values. Make sure to convert the MIDI notes to frequencies using the formula:

Frequency=440×2^((n−69)12)

This produced a large number of midi notes and only a small number of note durations. It didn’t match up so I further asked to improve the model:

why are there so many midi notes and only 9 note durations. it doesnt match up to the mp3

The mismatch between the number of MIDI notes and the number of note durations is likely due to how the notes were extracted and how the durations were calculated. In music, especially in a piece with varying rhythms, it's common for multiple notes to share the same duration or for some notes to be held longer than others, which can lead to fewer unique durations than notes.

Understanding the Extraction Process

MIDI Notes Extraction: The MIDI notes are extracted based on the pitches detected in the audio. If the audio has many notes played in quick succession, the extraction process may capture all of them, resulting in a long list of MIDI notes.

- MIDI Notes Extraction: The MIDI notes are extracted based on the pitches detected in the audio. If the audio has many notes played in quick succession, the extraction process may capture all of them, resulting in a long list of MIDI notes.

2. Beat Tracking and Duration Calculation: The note durations are calculated based on the detected beats. If the beat tracking does not align perfectly with the notes (for example, if there are many notes played between beats), you may end up with fewer durations than notes.

Steps to Align Notes and Durations

To ensure that the MIDI notes and their durations match up correctly, you can take the following steps:

1. Recalculate Note Durations: Instead of relying solely on beat tracking, you can calculate the duration of each note based on the time between pitch detections. This will give you a more accurate representation of how long each note is held.

2. Use a More Detailed Pitch Tracking Method: You can use a method that tracks pitch changes more closely, allowing you to capture the start and end times of each note.

Example Code to Improve Duration Calculation

Here’s an updated version of the librosa_beat.py script that calculates note durations based on pitch changes:

import librosa

import numpy as np

# Load the audio file

filename = './tune.mp3' # Update this line with the correct path to your audio file

y, sr = librosa.load(filename)

# Extract pitches from the audio

pitches, magnitudes = librosa.piptrack(y=y, sr=sr)

# Initialize lists for MIDI notes and their durations

midi_notes = []

note_durations = []

# Iterate through the pitch frames

for t in range(pitches.shape[1]):

index = magnitudes[:, t].argmax() # Get the index of the max magnitude

pitch = pitches[index, t]

if pitch > 0: # Only consider positive pitches

midi_note = int(librosa.hz_to_midi(pitch))

midi_notes.append(midi_note)

# Calculate duration based on the next pitch frame

if t < pitches.shape[1] - 1:

next_index = magnitudes[:, t + 1].argmax()

next_pitch = pitches[next_index, t + 1]

if next_pitch > 0:

duration = (1 / sr) * (t + 1 - t) * 1000 # Convert to milliseconds

note_durations.append(int(duration))

# Print results

print('Extracted MIDI notes:', midi_notes)

print('Extracted Note Durations:', note_durations)

# Save the extracted data to a file

with open('extracted_notes_librosa.txt', 'w') as f:

f.write('MIDI Notes:\n')

f.write(', '.join(map(str, midi_notes)) + '\n')

f.write('Note Durations (ms):\n')

f.write(', '.join(map(str, note_durations)) + '\n')

print('Data saved to extracted_notes_librosa.txt')it's giving durations as 0. Is there a better way to extract the sound data for the c++ barduino

If the durations are being calculated as zero, it indicates that the method used to determine the duration of each note is not capturing the timing correctly. This can happen if the pitch detection is not aligned with the actual note events in the audio.

Alternative Approach to Extract Sound Data

Instead of relying solely on pitch tracking, you can use a combination of beat tracking and onset detection to get a more accurate representation of the notes and their durations. Here’s how you can do it:

1. Use Onset Detection: This will help you identify when a note starts.

Calculate Durations Based on Onsets: By detecting the onset of each note, you can calculate how long each note is held until the next onset.

- Calculate Durations Based on Onsets: By detecting the onset of each note, you can calculate how long each note is held until the next onset.

Updated Code Using Onset Detection

Here’s an updated version of the librosa_beat.py script that uses onset detection to extract notes and their durations:

import librosa

import numpy as np

# Load the audio file

filename = './tune.mp3' # Update this line with the correct path to your audio file

y, sr = librosa.load(filename)

# Use onset detection to find note starts

onset_frames = librosa.onset.onset_detect(y=y, sr=sr, units='frames')

onset_times = librosa.frames_to_time(onset_frames, sr=sr)

# Extract pitches from the audio

pitches, magnitudes = librosa.piptrack(y=y, sr=sr)

# Initialize lists for MIDI notes and their durations

midi_notes = []

note_durations = []

# Iterate through the onset frames to get MIDI notes and their durations

for i in range(len(onset_frames)):

frame = onset_frames[i]

index = magnitudes[:, frame].argmax() # Get the index of the max magnitude

pitch = pitches[index, frame]

if pitch > 0: # Only consider positive pitches

midi_note = int(librosa.hz_to_midi(pitch))

midi_notes.append(midi_note)

# Calculate duration based on the next onset

if i < len(onset_times) - 1:

duration = (onset_times[i + 1] - onset_times[i]) * 1000 # Convert to milliseconds

note_durations.append(int(duration))

else:

# If it's the last note, you can set a default duration or calculate it differently

note_durations.append(500) # Default duration for the last note

# Print results

print('Extracted MIDI notes:', midi_notes)

print('Extracted Note Durations:', note_durations)

# Save the extracted data to a file

with open('extracted_notes_librosa.txt', 'w') as f:

f.write('MIDI Notes:\n')

f.write(', '.join(map(str, midi_notes)) + '\n')

f.write('Note Durations (ms):\n')

f.write(', '.join(map(str, note_durations)) + '\n')

print('Data saved to extracted_notes_librosa.txt')This results in a nice simple collection of notes and note durations:

MIDI Notes:

66, 67, 66, 69, 67, 61, 65, 66, 69, 68, 68, 67, 67, 68, 71

Note Durations (ms):

255, 301, 232, 417, 325, 441, 208, 325, 208, 464, 743, 232, 301, 696, 500

I also tried to use the aubio library but I ran into problems with the numpy package and python.h file, surely due to me using a more recent version of python 3.10. I’ve already wasted hours trying to fix a similar problem, so I decided to give up after 30 minutes and stick to the librosa set up.

Once I had the midi notes and durations, I added them to a C++ file that I uploaded to the Barduino using the Arduino IDE app

I defined the pin numbers in a C++ file librosa_beat_bard on Arduino and added the MIDI notes and Note Durations and asked CursorAI to complete the C++ code so that the LED and Buzzer blink and sing to the notes

To update your librosa_beat_bard C++ code with the extracted MIDI notes and their durations from extracted_notes_librosa.txt, you will need to follow these steps:

1. Read the Extracted Data: Copy the MIDI notes and their corresponding durations from the extracted_notes_librosa.txt file.

2. Update the C++ Code: Replace the existing melody and noteDurations arrays in your librosa_beat_bard code with the new values.

Extracted Data from extracted_notes_librosa.txt

Based on the content you provided, here are the extracted MIDI notes and their durations:

MIDI Notes:

66, 67, 66, 69, 67, 61, 65, 66, 69, 68, 68, 67, 67, 68, 71

Note Durations (ms):

255, 301, 232, 417, 325, 441, 208, 325, 208, 464, 743, 232, 301, 696, 500

// Define pin numbers

const int ledPin = 48; // LED connected to pin 1

const int buzzerPin = 46; // Buzzer connected to pin 7

// Function to convert MIDI note to frequency

float midiToFrequency(int midiNote) {

return 440.0 * pow(2.0, (midiNote - 69) / 12.0);

}

// Define the melody notes (Hz) - converted from MIDI notes

float melody[] = {

midiToFrequency(66), midiToFrequency(67), midiToFrequency(66), midiToFrequency(69),

midiToFrequency(67), midiToFrequency(61), midiToFrequency(65), midiToFrequency(66),

midiToFrequency(69), midiToFrequency(68), midiToFrequency(68), midiToFrequency(67),

midiToFrequency(67), midiToFrequency(68), midiToFrequency(71)

};

// Define note durations (milliseconds) - extracted from your file

int noteDurations[] = {

255, 301, 232, 417, 325, 441, 208, 325, 208, 464, 743, 232, 301, 696, 500

};

void playMelody() {

for (int i = 0; i < sizeof(melody) / sizeof(melody[0]); i++) {

tone(buzzerPin, melody[i], noteDurations[i]); // Play note

digitalWrite(ledPin, HIGH); // LED on

delay(noteDurations[i]); // Wait for the note duration

digitalWrite(ledPin, LOW); // LED off

noTone(buzzerPin); // Stop the note

delay(50); // Short gap between notes

}

}

void setup() {

pinMode(ledPin, OUTPUT);

pinMode(buzzerPin, OUTPUT);

}

void loop() {

playMelody(); // Play melody

delay(1000); // Pause before repeating

} Next I compiled and uploaded the code from the Arduino IDE software directly to the board, and here is the end result:

It hasn’t quite replicated the sound of the initial recording, but it’s making some tune.