Proposal

Touching the untouchable (interactive zone)

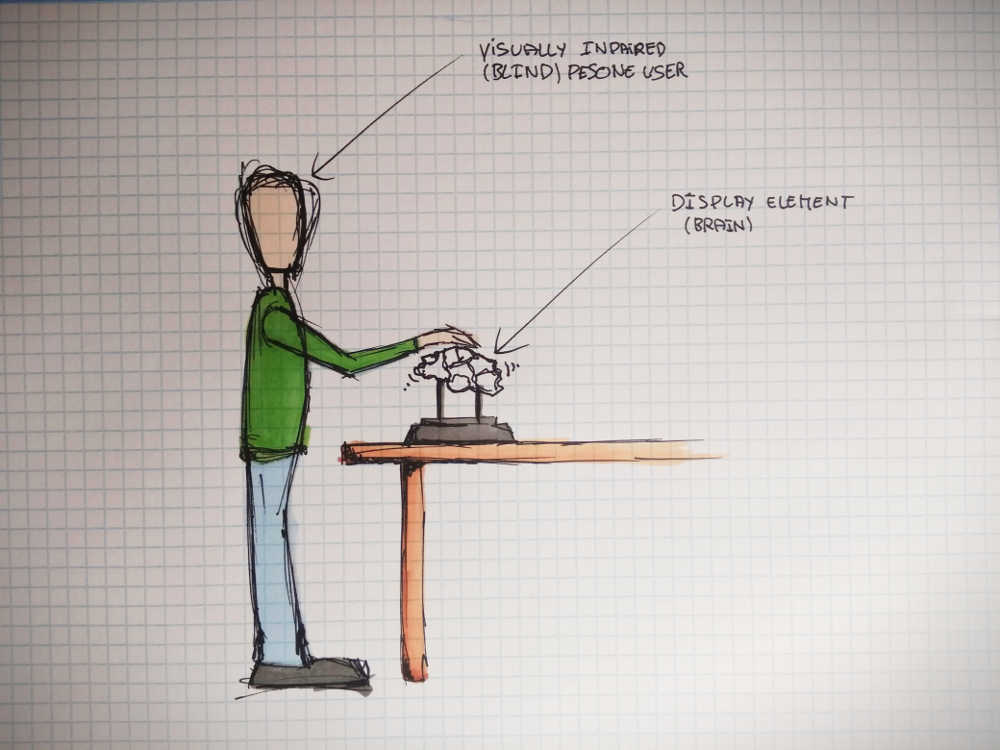

“Hoy toca neurociencia” is an interactive exhibition project designed for the visually impaired community. Although it is developed focusing specially on the visually impaired community as the principal user target, it is inclusive and should be accessible to everyone. This project educates on how the human body is made inside, more specificly in this case, on the functioning of the human brain.

This assignment proposal will finalise the development made so far and focuses on one element of this exposition. The aim is to further develop a representation of the brain that will provide a unique experience for the persons that can not see. Each individual parts of the element presented will vibrate and light up (to be confirmed, depending on the time left) while the user explores by touching the representation model. Furthermore, it will be implemented by adding an informative audio track that will describe the part vibrating.

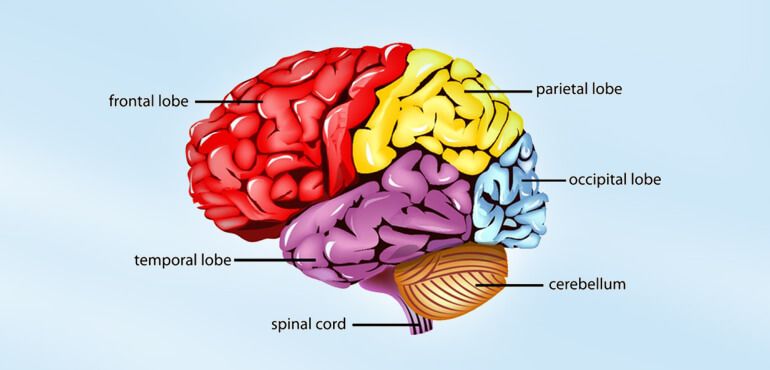

The Cerebrum (Cortex) is made out of the 4 largest parts of the humain brain:

- Frontal Lobe

- Parietal Lobe

- Occipital Lobe

- Temporal Lobe

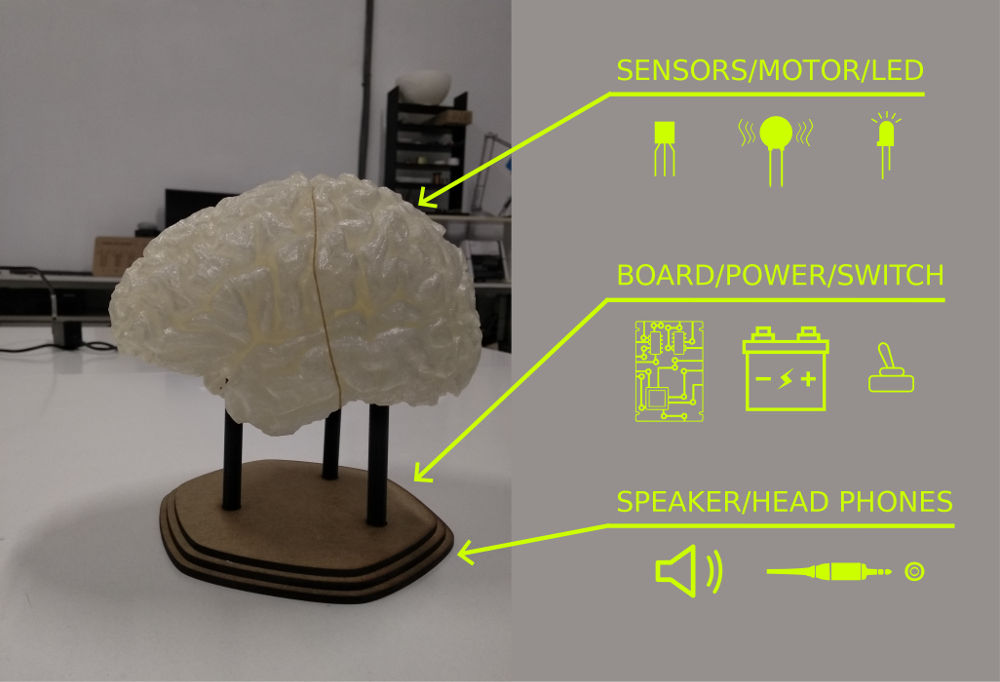

So far this first prototype version is printed out of PETG (brain), PLA (legs) and laser cut MDF (base). I am hoping to replace the part of the brain that will be touch with some type of silicon and to make the base heavier, maybe cast it in concrete/cement or resine (it could also be milled in wood).

I am thinking to use different materials in order to produce the brain, I am hoping to make a mold from a 3d printed model (SLA) using alginate (alga cast/body cast first layer) reinforced with plaster (plaster rolls reinforced with textile). There should be a riggid center part (FDM) where I hope to put some of the electronics, it will also serve as an access point to incert the motor through once the silicone has set. I am not too sure of how to make the base yet, maybe wood or concret to make it heavy. It also needs to include the controls...

The user can read the instructions and activate a routine.Each motors + LED (?) will follow that routine along with the audio, while listening to the information, the user can touch the representation model (brain) and feel where the different vibrations are located. The vibrating area changes to point out the different regions of the brain.