Assignment

Week12: Interface and application programming

Table of content

- Assignment

- My Work

- Group Assignment

- Idea of Image Processing

- Experiment prepration

- Point of coding idea

- Experiment outcomes

- Step 1 : Stream gray-color

- Step 2 : Make mosaic pattern

- Step 3 : Make gray mosaic pattern

- Step 4 : Stream with gray mosaic patterm

- Step 5 : Stream gray mosaic pattern with receiving value

- Step 6 : Step 5 + change mosaic rate, switch gray/color

- Files

- Lessons Learned

- References

Assignment

- individual assignment

- write an application that interfaces a user with an input &/or output device that you made

- group assignment

- compare as many tool options as possible

My Work

Group Assignment

compare as many tool options as possible(link )

Idea of Image Processing

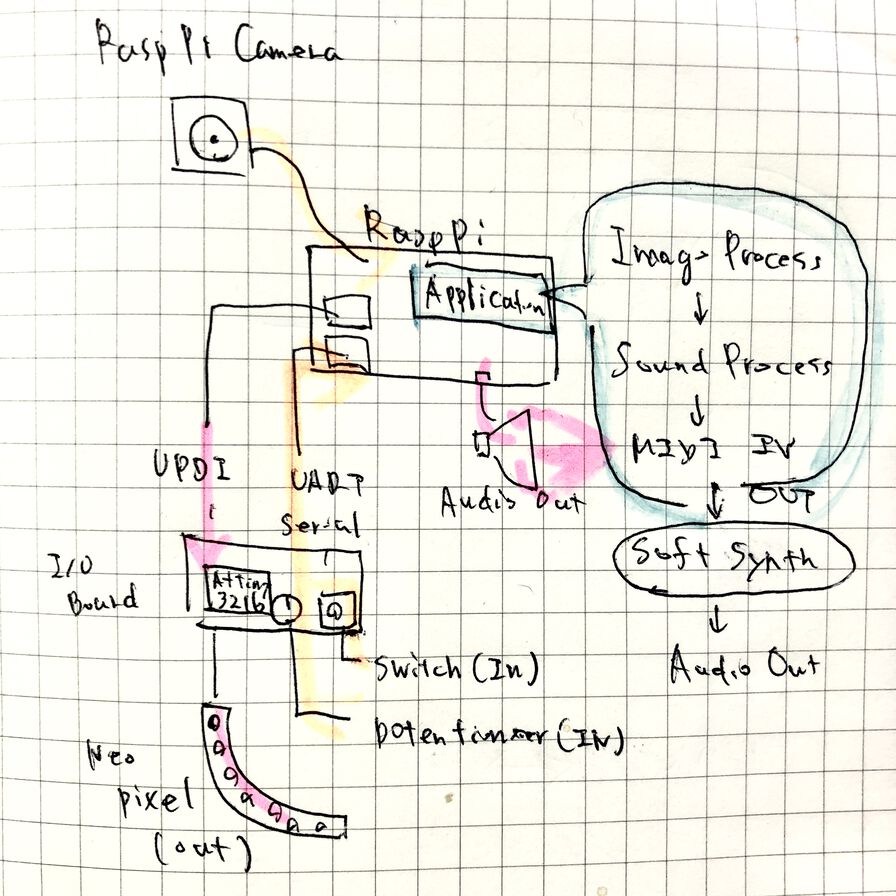

As I'm doing image processing and sound generation in my final project, I want to convert image information into numeric array, then convert it into MIDI note. This week, I tried minimal implementation of image processing using Raspberry Pi.

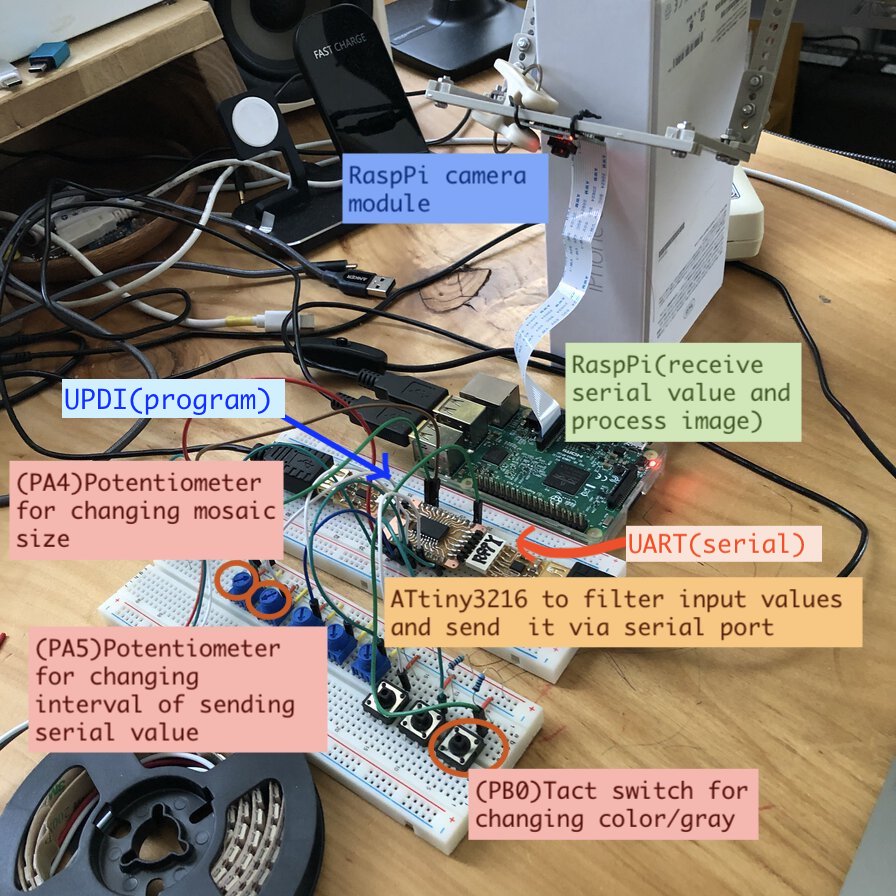

My idea is validating python codebases for capturing image, receiving JSON format value from ATtiny3216 over serial communication and utilize the value to process image. Thorough these work, I want to walk through not only the basic of image process, serial communication but also the other necessary process like exception handling or finalization.

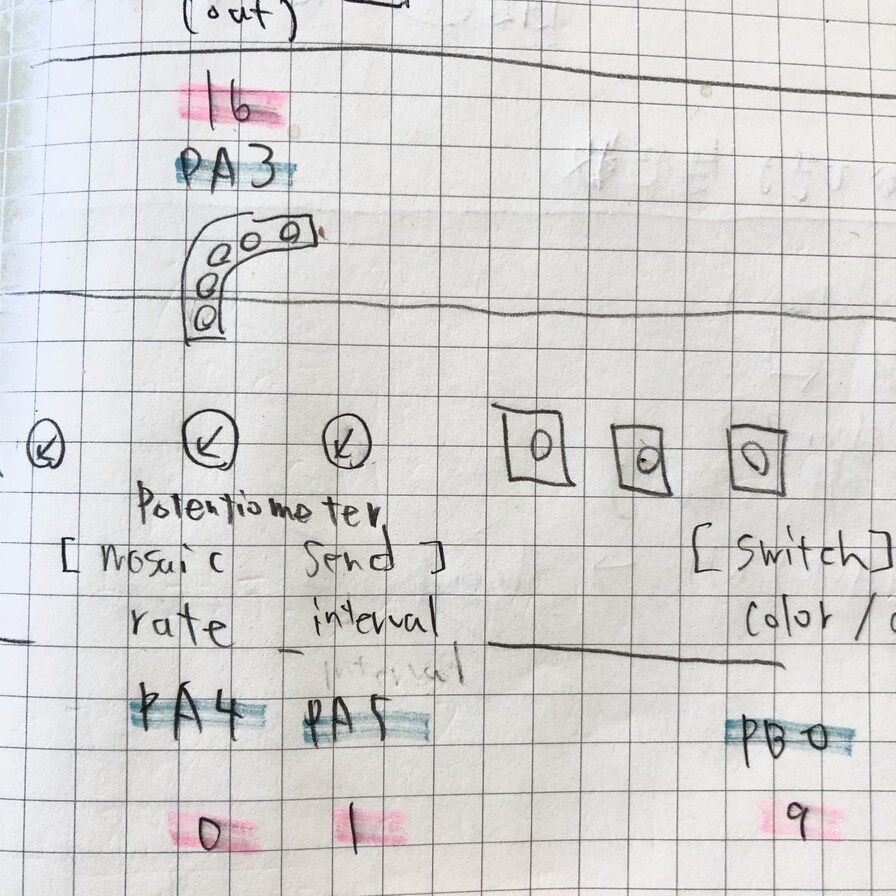

Using input/output breakout board that I made in week11, I assigned values of potentiometer and switch to parameters on Open CV

Experiment prepration

Setup Raspberry Pi

I setup Raspberry Pi in terms of OS setup, network, python and camera module. Memo for configuration related in RaspPi (in detail, please see linked RaspPi Tips pages)

Install Open CV

As a conclusion, I could run OpenCV on python 3.5.3 in my Rapsberry Pi successfully. I could not run OpenCV on python 3.8.2, 3.7.7, 3.7.5 and 3.7.2 in virtual environment.

I setup pyenv for opencv and tried to run it on virtual environment for related libraries. Though I tried to setup pyenv for python 3.8.2, 3.7.7, 3.7.5 and 3.7.2, some of installament failed and the other occurs error when I run Open CV. I took note for that procedure in Tips of RaspPi - Install Open CV and install OpenCV on pyenv

Connection

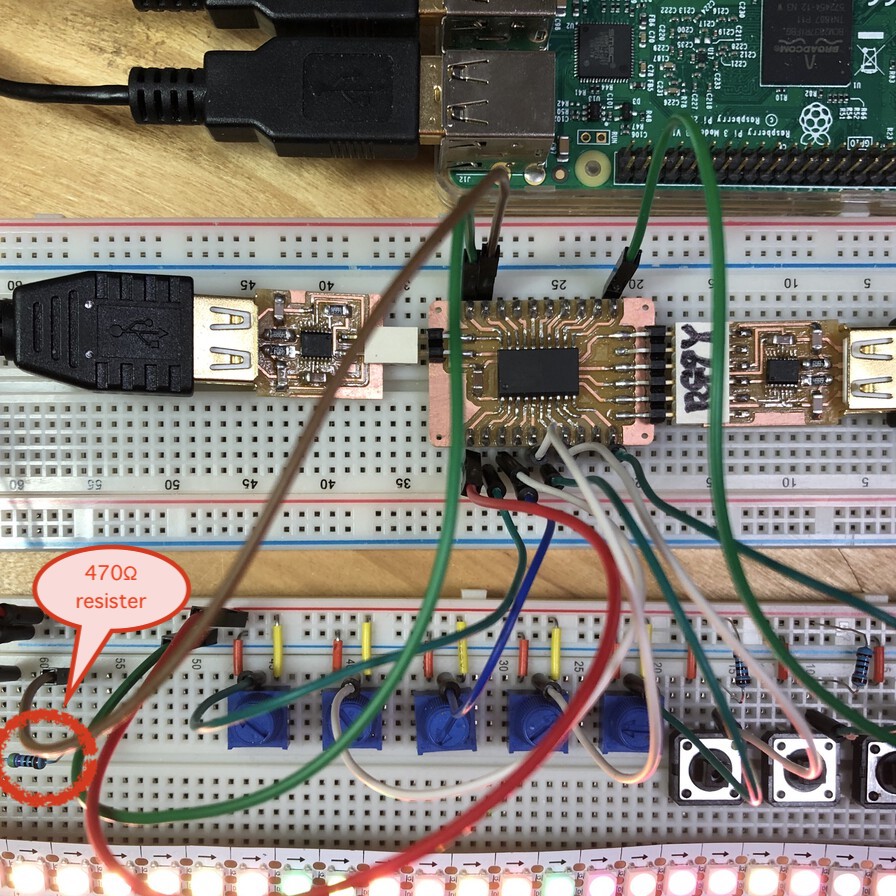

I setup RaspPi(with camera module), Input/Output board(Attiny3216 breakout board + potentiometers, tact switches and NeoPixel on breadboard).

For writing program, I used raspberry pi too as

For operating (keyboard and mouse pointer input and display) in Rasperry Pi, I used VNC viewer from mac client.

Point of coding idea

I implemented image processing application by python3 on Raspberry Pi that interfaces with ATtiny3216 input-output board that I made in week11 (Output device). I have small experience playing with python, but I'm new at image processing, serial communication or thread programming etc. Learning many things from internet, I built codebase at least for an experimental use. Followings are points of coding ideas that I tried.

- Incremental development

-

I development source code step by step like:

- Step 1 : Stream gray-color image from camera

- Step 2 : Make mosaic pattern from image file

- Step 3 : Make gray mosaic pattern from image file

- Step 4 : Stream gray mosaic pattern from camera

- Step 5 : Stream gray mosaic pattern image from camera with receiving serial from another board

- Step 6 : Stream image from camera, change mosaic rate and switch color between gray/rgb by receiving serial from another board

- Generate JSON format data in arduino(ATtiny3216) and parse it in python(RaspPi)

-

As a data format for transmitting input values between board, I selected JSON.

Merrit using JSONarduino - ArduinoJson library (this needs installation by package manager on Arduino IDE) (Extract of InOutSerial_v1.ino)- JSON can store nested arrays and objects, so even the most complex data structures can be performed

- Simple (easy to learn) and run lightly

- Can hold NULLs, Boolean values and objects as values, not just numbers and strings

- Can be used in any environment

#include <ArduinoJson.h> ~ StaticJsonDocument<200> doc; doc["m_rate"]=m_rate; doc["interval"]=interval; doc["color"]=color;

Firstly, i used String-typed value against "color" key. However, itt was difficult to get value in staticJsonDocument stably. When it's continuously updated, string or char* value returns to null after 39 times of update by serial communication.

Checking documentation for Arduinojson, I found that string can be used with "copy" storage. This means it needs much bigger memory allocation to use Json object.

string or charactor - ArduinoJson Refs. memohttps://arduinojson.org/v6/example/string/ "Use String objects sparingly, because ArduinoJson duplicates them in the JsonObject. Prefer plain old char[], as they are more efficient in term of code size, speed, and memory usage."

https://arduinojson.org/v6/example/generator/

"// You can set a String to a JsonObject or JsonArray:

// WARNING: the content of the String will be duplicated in the JsonDocument.

obj["sensor"] = sensor;"

Serialization tutorial, p.127 4.6 Duplication of strings

https://arduinojson.org/v6/doc/serialization/

"4.6 Duplication of strings Depending on the type, ArduinoJson stores strings ether by pointer or by copy. If the string is a const char*, it stores a pointer; otherwise, it makes a copy. This feature reduces memory consumption when you use string literals. "

"As usual, the copy lives in the JsonDocument, so you may need to increase its capacity depending on the type of string you use."

"Avoid Flash strings with ArduinoJson Storing strings in Flash is a great way to reduce RAM usage, but remember that

ArduinoJson copies them in the JsonDocument. If you wrap all your strings with F(), you’ll need a much bigger JsonDocument. Moreover, the program will waste much time copying the string; it will be much slower than with conventional strings.I plan to avoid this duplication in a future revision of the library, but it’s not on the roadmap yet."

In python - json package(this does not need additional package installation)(Extract of cv2_6_video_serial_json.py)

import json ~ try: json_item = json.loads(item) if (json_item != None): ratio = 1 / (json_item['m_rate']/10) color = json_item['color'] interval = json_item['interval'] except json.decoder.JSONDecodeError: print("video_stream(): JSONDecodeError:%s" % item) print(traceback.format_exc()) except EOFError: print("video_stream(): EOFError:%s" % item) print(traceback.format_exc()) except: print("error") print(traceback.format_exc()) finally: ~ - Serialize data in arduino(ATtiny3216) and deserialize it in python(RaspPi)

-

For transmitting data by serial communication over UART(USB), data need to be serialized (need to convert to byte charactors)

Arduino - ArduinoJson package (extract of InOutSerial_v1.ino)

#include <ArduinoJson.h> void setup() { Serial.begin(115200); } serializeJson(doc, Serial); Serial.println();python - PySerial package (extract of cv2_5_mosaic_gray_video_serial.py)

import serial from subprocess import getstatusoutput ~ quit_recv = False try: s = init_serial() if (s == None): print("Serial port cannot be opened\n") exit(1) while not quit_recv: byte_item = s.readline() item = byte_item.decode('utf8') # use deserialized item ~ except: print(traceback.format_exc()) finally: s.close exit(0) def init_serial(): status, outputs = getstatusoutput("ls -1 /dev/ttyUSB*") for output in outputs.split('\n'): try: port = output baudrate=115200 s = serial.Serial(port=port, baudrate=baudrate) print("Serial is opened over %s in %i bps\n" % (port, baudrate)) return s except: print(traceback.format_exc()) print("No device found\n") - Concurrent worker thread and internal queue handling

-

Receiving data from another board requires consideration about performance. Especially, I need to process image and sound independently even though the transmitting interval over serial port is low. (In my application, "interval" in sender (input board of ATtiny3216) is cordinated with refresh rate of image streaming. However, image (and sound in the future) should not be getting delayed when serial receiving interval is low.

My idea is that using concurrent worker thread for receiving values from serial port and put the bytes to internal queue. When main thread of image processing gets data from queue, that thread get every queued data and use the latest one for catching up to sender's "interval".

I used

In python code(extract of cv2_6_video_serial_json.py)concurrent.futureandqueuefor realizing above.

import concurrent.futures import queue import serial serial_q = queue.Queue() # this function is executed from main thread def video_stream(): try: while True: while not serial_q.empty(): byte_item = serial_q.get() # deserialize, json parse and image process application ~ except: print("error") print(traceback.format_exc()) finally: ~ # this function is executed from concurrent thread def recv_serial(s): global serial_q global quit_recv while not quit_recv: data = s.readline() serial_q.put("Stop") def main(): global serial_q global quit_recv try: s = init_serial() if (s == None): print("Serial port cannot be opened\n") exit(1) # start recv_serial as concurrent executor quit_recv = False executor = concurrent.futures.ThreadPoolExecutor(max_workers=1) receiver = executor.submit(recv_serial, s) # start video stream video_stream() except concurrent.futures.CancelledError: print(traceback.format_exc()) print("executor is cancelled\n") except: print(traceback.format_exc()) finally: print("main is waiting") quit_recv = True # wait until executor(receiver) finishes while not receiver.done(): time.sleep(1) s.close print("main finished") exit(0)

Experiment outcomes

Step 1 : Stream gray-color image from camera

Step 2 : Make mosaic pattern from image file

Step 3 : Make gray mosaic pattern from image file

Step 4 : Stream gray mosaic patterm from camera

Step 5 : Stream gray mosaic pattern from camera with receiving value from another board over serial

This application processes image with receiving values over serial port (but do not use that value for application. just receiving). This version get only the first data in remaining queue. So, when image process application is swamped, the video stream is delayed.

cv2_5_mosaic_gray_video_serial.py

InOutSerial_v1.ino

Step 6 : Stream image from camera, change mosaic rate and switch color between gray/rgb by receiving serial from another board

This worked. Movie does not delay even the interval is low or high.

There might be no meaning of queue(First-in first-out) in this design as this only acquires the latest data at that point. However, it's good to have architecture with concurrent thread as I also need to implement sound process as well. I think it's better to make receiver, sender, image process and sound process separated concurrent worker therad for minimizing unexpected bottleneck of communication and processing.

cv2_6_video_serial_json.py

InOutSerial_v1.ino

Files

Lessons Learned

- Using OpenCV, we can retrieve numeric array from image easily.

- Regarding to serial communication and heavy application process, concurrent thread is powerful.

- I do not think my application code is clean - I need to add logger, delete ore revise unnecessary procedure and comment for investigation and clean up exception handling. Also, I need to tune values assigning to parameters of OpenCV. Potentiometer value is easily affected by the other potentiometers or resister. So, I will work on that continuously

- If possible, I want to port my application to smaller board or microcontroller tip like Raspberry Pi zero or ESP32 kit. I put priority to finalize my first cycle (with making sound), then consider minimizing in other board or microcontroller.

References

- How to install open cv to linux

- 行列による画像処理 基礎編&目次 ~Python画像処理の再発明家~

- For python, install hdf5/netcdf4

- OpenCV3 API reference

- Convert bytes to a string

- 【Python入門】JSONをパースする方法

- ArduinoJson - StaticJsonDocument

- ArduinoJson - examples

- Pythonの例外処理(try, except, else, finally)

- PythonのPyserialでインタラクティブなシリアルコンソールを実装する

- Pythonの並列・並行処理サンプルコードまとめ

- concurrent.futures -- 並列タスク実行

- 並列処理を含むプログラムを強制終了させたい

- スレッドでキューを使用する