Seeing the world!¶

Note

Find today’s code in the gitlab repo

Warning

Many of the examples from today, and many many more can be found at the official docs

Open CV¶

OpenCV (Open Source Computer Vision Library: http://opencv.org) is an open-source BSD-licensed library that includes several hundreds of computer vision algorithms.

Installation¶

You need to compile OpenCV from source form the master branch on github in order to get the python bindings.

Building from source

- Official: https://docs.opencv.org/4.3.0/df/d65/tutorial_table_of_content_introduction.html

- Guide for MAC: https://www.learnopencv.com/install-opencv-4-on-macos/

- Guide for Ubuntu: https://www.pyimagesearch.com/2017/09/25/configuring-ubuntu-for-deep-learning-with-python/

- General for Linuxes (including Pi): https://github.com/jayrambhia/Install-OpenCV

OpenCV-Python¶

OpenCV-Python is a library of Python bindings designed to solve computer vision problems.

Compared to languages like C/C++, Python is slower. That said, Python can be easily extended with C/C++, which allows us to write computationally intensive code in C/C++ and create Python wrappers that can be used as Python modules. This gives us two advantages: first, the code is as fast as the original C/C++ code (since it is the actual C++ code working in background) and second, it easier to code in Python than C/C++. OpenCV-Python is a Python wrapper for the original OpenCV C++ implementation.

OpenCV-Python makes use of Numpy, which is a highly optimized library for numerical operations with a MATLAB-style syntax. All the OpenCV array structures are converted to and from Numpy arrays. This also makes it easier to integrate with other libraries that use Numpy such as SciPy and Matplotlib.

Overwhelmed?

Choose how you want to use openCV. There are various frameworks (not only with python):

- There is an unofficial Python package using:

pip install opencv-python

But this is not maintained officially by OpenCV.org. See this post if you are running into problems.

You can also use:

- Processing (Java): https://github.com/atduskgreg/opencv-processing or Processing.py although it is not yet fully supported

- openFrameworks (C++): https://openframeworks.cc/documentation/ofxOpenCv/

Basics of image processing¶

- Image alignment: align images in pictures

- Object detection: detect object in images (where something is, knowing what we are looking for) - solvable with DL, but ML works too

- Identification: identify if one person matches another

Descriptors¶

When dealing with image processing problems, a common approach is to divide the image in subimages or patches. Each of the patches is a small portion of the original image which can then be used and compared to other patches of other images and extract conclusions. Are the objects the same? Are the objects of the same type? Ideally, we would need of a method to determine if a patch is the same to another one, and that this method is independent of image changes: rotation, lighting, noise… Welcome descriptors.

A descriptor is some function that is applied on the patch to describe it in a way that is invariant to all the image changes that are suitable to our application (e.g. rotation, illumination, noise etc.). A descriptor is “built-in” with a distance function to determine the similarity, or distance, of two computed descriptors. So to compare two image patches, we’ll compute their descriptors and measure their similarity by measuring the descriptor similarity, which in turn is done by computing their descriptor distance.

Source: GilCV Blog

More in wikipedia

https://en.wikipedia.org/wiki/Visual_descriptor

Some types

- HOG: Histogram of Oriented Gradients. Nice wrap-up here

- SIFT (non free)

- SURF (non free)

-

Binary descriptors: Are a way of representing a patch as a binary string, using only comparison of intensity (in separated channels if necessary).

- BRIEF

- ORB

- BRISK

- FREAK

- …

They are based on the following workflow. 1. Sampling pattern definition: where to sample points in the region around the descriptor. 2. Orientation compensation: some mechanism to measure the orientation of the keypoint and rotate it to compensate for rotation changes. 3. Sampling pairs: the pairs to compare when building the final descriptor.

Tutorial

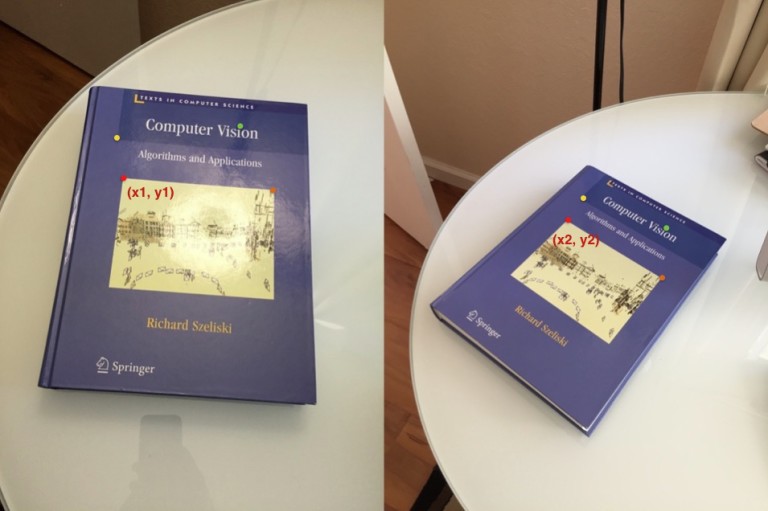

Homography¶

Homography is a transformation (a matrix) that maps the points in one image to the corresponding points in the other image.

Read about it in much nicer way

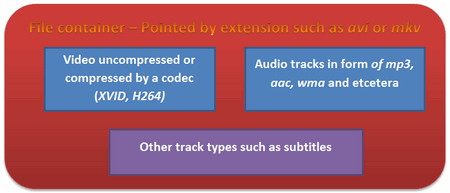

Video¶

For start, you should have an idea of just how a video file looks. Every video file in itself is a container. The type of the container is expressed in the files extension (for example avi, mov or mkv). This contains multiple elements like: video feeds, audio feeds or other tracks (like for example subtitles). How these feeds are stored is determined by the codec used for each one of them. In case of the audio tracks commonly used codecs are mp3 or aac. For the video files the list is somehow longer and includes names such as XVID, DIVX, H264 or LAGS (Lagarith Lossless Codec). The full list of codecs you may use on a system depends on just what one you have installed.

Tracking movement¶

Ultimately, we can try to detect things in a video, and identify them as different classes. We can find objects in a video by tracking color, pixel changes, or trying to build more complex classifiers, all the way up to deep learning algorithms.

Color tracking¶

We pick a color and track it’s centroid.

Example

Optical Flow¶

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene.

Tutorial

Identifying things¶

Haar Cascade Classifiers¶

OpenCV Face Detection: Visualized from Adam Harvey on Vimeo.

Source

Welcome to YOLO¶

YOLO performs object detection by creating a rectangular grid throughout the entire image. Then creates bounding boxes based on (x,y) coordinates. Class probability gets mapped by using random color assignment. To filter out weak detections, a 50% confidence is being used (this can change) which helps eliminate unnecessary boxes.

Note

The paper: https://arxiv.org/abs/1804.02767 The source: https://pjreddie.com/darknet/yolo/

Installing

Options: A) Install Darknet: https://pjreddie.com/darknet/install/ A.1) For weird image formats, use it with OPENCV (optional). For this, modify Makefile.

B) If using opencv and opencv-python, it comes already with darknet accessible:

Weights and cfg

Get the weights from here:

wget https://pjreddie.com/media/files/yolov3.weightsGet the yolov3.cfg from here:

wget https://github.com/pjreddie/darknet/blob/master/cfg/yolov3.cfgCheck in the Darknet github for more cfgs.

COCO dataset

COCO dataset is trained on 200,000 images of 80 different categories (person, suitcase, umbrella, horse etc…). It contains images, bounding boxes and 80 labels. COCO can be used for both object detection, and segmentation, we are using it for detecting people.

Note

We will be using the COCO dataset. You will find coco.names in the repository

References¶

- COCO - Common Objects in Context

- Learning Computer Vision Basics in Excel

- Corner Detector

- Real time person removal

- Introduction to descriptors and Tutorial on descriptors

- GilCV Code Examples

- Viola-Jones face detection and tracking explained

- Using non-free detectors

Model Zoos¶

PIL/Pillow¶

These are libraries for managing images in python (you can still use opencv) and achieve similar functions.

Note

Installation instructions here

Working on a Pi¶

- Rpi camera do’s and dont’s

- Optimizing opencv for the pi

- Face Recognition

- Object Detection with DL

- Facial landmarks