Contents

1. Objective

2. Tkinter

3. Framework

4. Tkinkter and OpenCV

5. Reference

6. Download

1. Objective

So far, everything we have done has been text based. In fact the game that we play on our home computer would not just based on a text. This chapter shows you how to create a proper graphical user interface (GUI).

2. Tkinter

It is the most commonly used tool for creating a GUI for Python.

2.1 Hello World

To start with a Graphical User Interface, let's begin in a tradion way to just show you how it works by showing a display massage of "Hello World".

Code:

from tkinter import *

root = Tk() # making a blank window

# creating a label(where will it be, insert text)

# pack is to pack your widget into the the "root" window

Label(root,text="Hello World").pack()

#mainloop is to run the window to be able to constaly display the window

root.mainloop()

3. Framework

The use of class with Tkinter is to represent each application windows normally. Our first step is to make a framework in our application. Think that a frame is like a container.

Code:

from tkinter import *

class App:

def __init__(self, master):

frame = Frame(master)

frame.pack()

Label(frame,text='Cammera').grid(row=0, column=0)

Label(frame, text='Properties').grid(row=0, column=1)

Label(frame, text="USB port :").grid(row=1, column=1)

buttonUpdate = Button(frame,text="Update")

buttonUpdate.grid(row=2, column=1, columnspan=2)

buttonUpdate.bind("<Button-1>", printName) # <Button-1> means a left click

Label(frame, text="X :").grid(row=3, column=1)

Label(frame, text="y :").grid(row=4, column=1)

def convert(self):

print("Not implemented")

def printName(event):

print("Hello")

root = Tk()

root.wm_title("Tracker")

app = App(root)

#mainloop is to run the window to be able to constaly display the window

root.mainloop()

Window:

3.1 Layout

The particular problem you will encounter is controlling the GUI when the window is resized. For example, keeping some widgets int the same place and at the same size or allowing other widgets to expand.

Code:

frame.pack(fill=BOTH, expand=1)

Ensure that the frame fills the enclosing root window. The root window and the frame change in size respectively.

3.2 Anchor

https://www.tutorialspoint.com/python/tk_anchors.htm

Code:

Label(root, text= "text", anchor=SW)

4. Tkinkter and OpenCV

Tkinter GUI application that can display images loaded via OpenCV

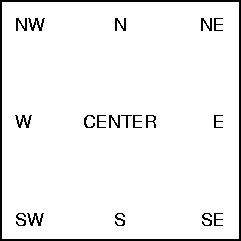

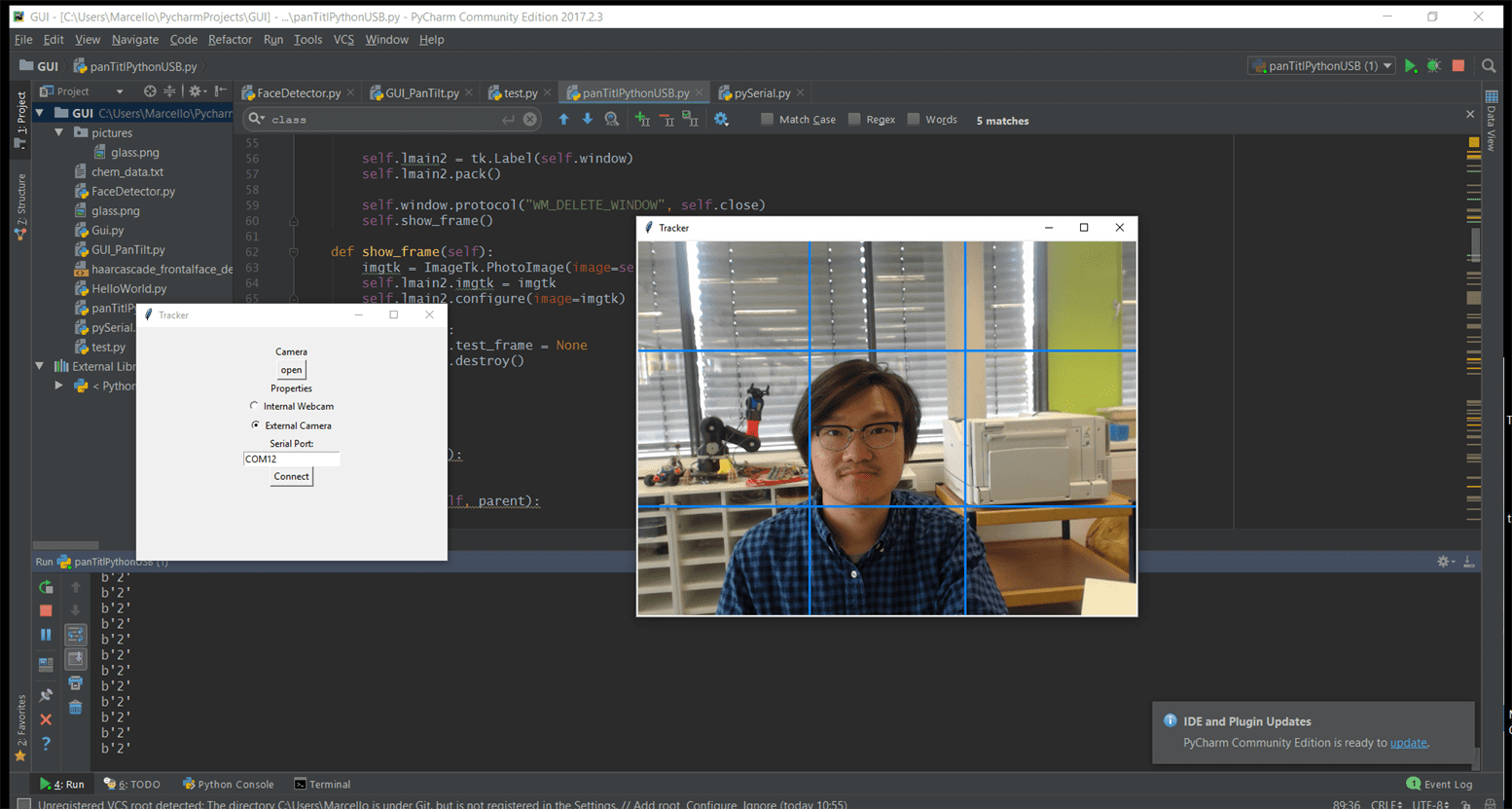

GUI

Architecture

5.3 Python

This code is the algorithm to map your face and send the command to the microcontroller to run a motor.

import cv2

import numpy as np

from IPython.display import clear_output

import IPython

# Set camera resolution.

cameraWidth = 640

cameraHeight = 480

face_classifier = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

# calculate the middle screen

midScreenX = int(cameraWidth / 2)

midScreenY = int(cameraHeight / 2)

midScreenWindow = 100

def face_detector(img):

# Convert image to grayscale

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Clasify face from the gray image

faces = face_classifier.detectMultiScale(gray, 1.3, 5)

if faces is ():

return img, []

for (x, y, w, h) in faces:

cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 255), 2)

# find the midpoint of the first face in the frame.

xCentre = int(x + (w / 2))

yCentre = int(y + (w / 2))

cv2.circle(img, (xCentre, yCentre), 5, (0, 255, 255), -1)

localization = [xCentre, yCentre]

# print(localization)

return img, localization

# Open Webcam

cap = cv2.VideoCapture(0) # 0 is camera device number, 0 is for internal webcam and 1 will access the first connected usb webcam

# Set camera resolution. The max resolution is webcam dependent

# so change it to a resolution that is both supported by your camera

# and compatible with your monitor

cap.set(cv2.CAP_PROP_FRAME_WIDTH, cameraWidth)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, cameraHeight)

while True:

# Capture frame-by-frame

ret, frame = cap.read()

# mirror the frame

frame = cv2.flip(frame, 1)

image, face = face_detector(frame)

# cv2.line(image, starting cordinates, ending cordinates, color, thickness)

# left vertical line

cv2.line(image, ((midScreenX - midScreenWindow), 0), ((midScreenX - midScreenWindow), cameraHeight), (255, 127, 0),2)

# right vertical line

cv2.line(image, ((midScreenX + midScreenWindow), 0), ((midScreenX + midScreenWindow), cameraHeight), (255, 127, 0),2)

# up horizontal line

cv2.line(image, (0, (midScreenY - midScreenWindow)), (cameraWidth, (midScreenY - midScreenWindow)), (255, 127, 0),2)

# down horizontal line

cv2.line(image, (0, (midScreenY + midScreenWindow)), (cameraWidth, (midScreenY + midScreenWindow)), (255, 127, 0),2)

# only if the face is recoginize

if len(face) == 2:

midFaceX = face[0]

midFaceY = face[1]

# Find out if the X component of the face is to the left of the middle of the screen.

if (midFaceX < (midScreenX - midScreenWindow)):

cv2.putText(image, "Move Left", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0, 255, 0), 2)

# if(servoPanPosition >= 5):

# servoPanPosition -= stepSize; #Update the pan position variable to move the servo to the left.

if (midFaceX > (midScreenX + midScreenWindow)):

cv2.putText(image, "Move Right", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0, 255, 0), 2)

if (midFaceY < (midScreenY - midScreenWindow)):

cv2.putText(image, "Move Up", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0, 255, 0), 2)

if (midFaceY > (midScreenY + midScreenWindow)):

cv2.putText(image, "Move Down", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0, 255, 0), 2)

# print(x)

# print(y)

# Display the resulting frame

cv2.imshow('Video', frame)

# Press Q on keyboard to exit

if cv2.waitKey(25) & 0xFF == ord('q'):

break

# When everything is done, release the capture

# video_capture.release()

app = IPython.Application.instance()

app.kernel.do_shutdown(True) # turn off kernel

clear_output() # clear output

cv2.destroyAllWindows()

Arduino IDE

The code is listening the computer depending where your face is and then send a command to the motor to run.

const int stepPin1 = 12;

const int dirPin1 = 10;

int state_camera = 0;

int serialInput;

// the setup function runs once when you press reset or power the board

void setup() {

// initialize digital pin LED_BUILTIN as an output.

pinMode(LED_BUILTIN, OUTPUT);

// initialize serial communication

Serial.begin(115200); // use the same baud-rate as the python side

while (!Serial);

Serial.println("Serial is ready!");

pinMode(stepPin1,OUTPUT);

pinMode(dirPin1,OUTPUT);

}

// the loop function runs over and over again forever

void loop() {

if (Serial.available()) {

//Serial.println(state_camera);

serialInput = Serial.read();

switch (serialInput){

case 50:

moveUp();

break;

case 56:

moveDown();

break;

}

}

}

void moveUp(){

digitalWrite(LED_BUILTIN, HIGH); // turn the LED on (HIGH is the voltage level)

digitalWrite(dirPin1,HIGH); // Enables the motor to move in a particular direction

//Serial.println("moveUp");

//move one step

digitalWrite(stepPin1,HIGH);

delayMicroseconds(700);

digitalWrite(stepPin1,LOW);

delayMicroseconds(700);

}

void moveDown(){

digitalWrite(LED_BUILTIN, LOW); // turn the LED off by making the voltage LOW

digitalWrite(dirPin1,LOW); // Enables the motor to move in a particular direction

//Serial.println("moveDown");

//move one step

digitalWrite(stepPin1,HIGH);

delayMicroseconds(700);

digitalWrite(stepPin1,LOW);

delayMicroseconds(700);

}

The usage of the GUI basically is to select the COM port to connect the microcontroller and then open and choose a camera.

The camera vision will send the command to the microcontroller where to move the motor in this week I tested with one motor to move clockwise and counter clockwise.

This is the hardware that we use for our experiment: a stepper motor diver a4988, stepper motor, microcontroller from Satshakit, a stepper motor,a laptop and a webcam.

The video shown you how to set up the system with the GUI that I made with the help of my Instructor Daniela Ingrassia.

Finally, I could move my stepper motor according to the location of my face respective to the camera images.

5. Reference:

https://www.pyimagesearch.com/2016/05/30/displaying-a-video-feed-with-opencv-and-tkinter/

6. Download

Python

Arduino IDE