Interface and Application Programming

For the task of this week, we had to create an interface to interact with a device entry or exit. For this task I used the programming language Processing and the Fabduino. Fabduino:

For the task of this week, we had to create an interface to interact with a device entry or exit. For this task I used the programming language Processing and the Fabduino. Fabduino:

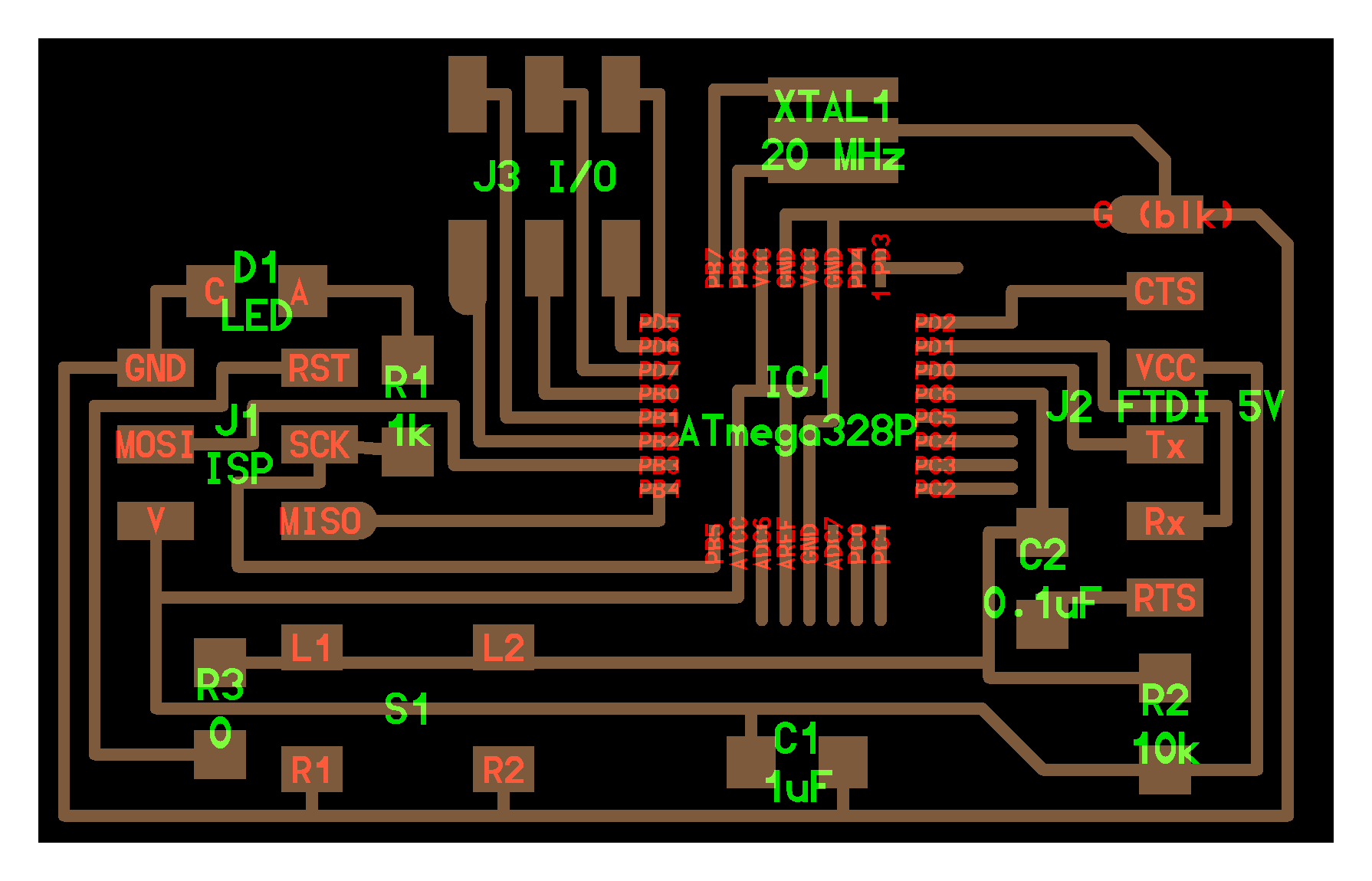

The manufacture of Fabduino was a little problematic, because initially I tried with an Atmega 328 to 20 Mhz, but I had several problems with the weld on the microcontroller. The other problem I had was in the time to load the bootloader, the fuses that I found in the internet did not work, then I had to do the Fabduino on page of the Academy. The manufacturing was made in the Roland Modela MDX20. The materials used are listed below: Materials: Atmega 328p A resistor of 10KΩ A resistor of 1KΩ A resistor of 0Ω A capacitor of 0.1 uF A capacitor of 1 uF A led SMD A button Headers

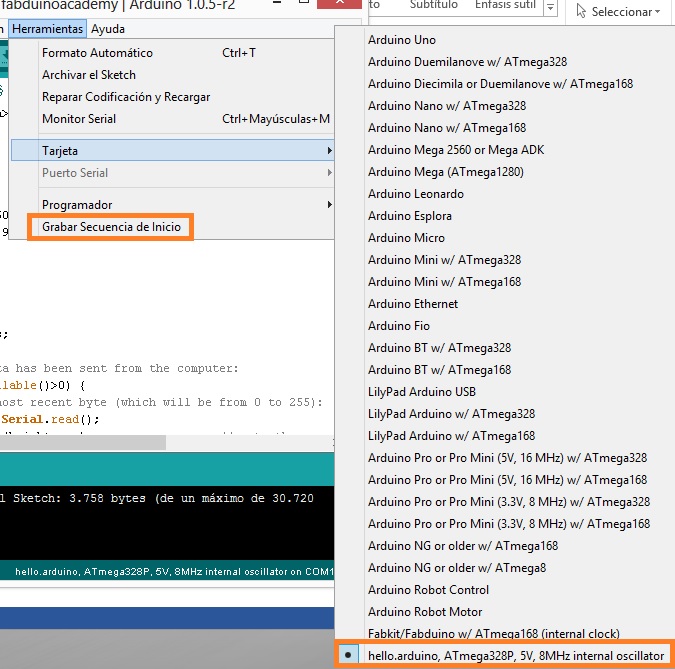

Programming: To burn the bootloader in the microcontroller, we have to use the FabISP and an FTDI cable. We copy the text of the following link in the text file: boards.txt, located in the direction "Arduino/hardware/arduino": boards.txt Finally, we open the Arduino IDE, select the card: hello.arduino, ATmega328P, 5V, 8MHz internal oscillator And in tools we click to "save boot sequence"

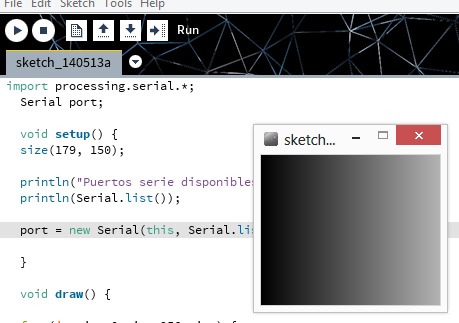

Processing: It is a programming language based on Java, it is usually used to develop interfaces and generative art, but it is very versatile and allows us to develop both desktop applications such as mobile applications and web applications. https://processing.org/download/ Development of interface: First, I developed a program to communicate my Fabduino with Processing to move a servo motor using serial communication. When you move the cursor in the display window, we vary the sweep angle of the servo motor.

Arduino code:

#include <Servo.h> Servo myservo; void setup() { Serial.begin(9600); myservo.attach(9); } void loop() { byte brightness; if (Serial.available()>0) { brightness = Serial.read(); myservo.write(brightness); delay(15); } }

Processing code:

import processing.serial.*; Serial port; void setup() { size(179, 150); println("Puertos serie disponibles:"); println(Serial.list()); port = new Serial(this, Serial.list()[0], 9600); } void draw() { for (int i = 0; i < 256; i++) { stroke(i); line(i, 0, i, 150); } port.write(mouseX); }

The next thing I did, was to develop a program to detect the movement of my right hand, for this I used Kinect and an algorithm based on the recognition of skeleton. To move his right hand, I can control the sweep angle of the servo motor.

Arduino code: It's the same code. Processing code:

import SimpleOpenNI.*; import processing.serial.*; Serial port; /*--------------------------------------------------------------- Variables ----------------------------------------------------------------*/ // create kinect object SimpleOpenNI kinect; // image storage from kinect PImage kinectDepth; // int of each user being tracked int[] userID; // user colors color[] userColor = new color[]{ color(255,0,0), color(0,255,0), color(0,0,255), color(255,255,0), color(255,0,255), color(0,255,255)}; // postion of head to draw circle PVector headPosition = new PVector(); // turn headPosition into scalar form float distanceScalar; // diameter of head drawn in pixels float headSize = 200; // threshold of level of confidence float confidenceLevel = 0.5; // the current confidence level that the kinect is tracking float confidence; // vector of tracked head for confidence checking PVector confidenceVector = new PVector(); float ellipseSize, ellipseSize1; PVector rightHand = new PVector(); PVector leftHand = new PVector(); /*--------------------------------------------------------------- Starts new kinect object and enables skeleton tracking. Draws window ----------------------------------------------------------------*/ void setup() { println("Ports"); println(Serial.list()); // start a new kinect object kinect = new SimpleOpenNI(this); // enable depth sensor kinect.enableDepth(); // enable skeleton generation for all joints kinect.enableUser(); // draw thickness of drawer strokeWeight(3); // smooth out drawing smooth(); // create a window the size of the depth information size(kinect.depthWidth(), kinect.depthHeight()); port = new Serial(this, Serial.list()[0], 9600); } // void setup() /*--------------------------------------------------------------- Updates Kinect. Gets users tracking and draws skeleton and head if confidence of tracking is above threshold ----------------------------------------------------------------*/ void draw(){ // update the camera kinect.update(); // get Kinect data kinectDepth = kinect.depthImage(); // draw depth image at coordinates (0,0) image(kinectDepth,0,0); // get all user IDs of tracked users userID = kinect.getUsers(); // loop through each user to see if tracking for(int i=0;i<userID.length;i++) { // if Kinect is tracking certain user then get joint vectors if(kinect.isTrackingSkeleton(userID[i])) { // get confidence level that Kinect is tracking head confidence = kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_HEAD,confidenceVector); // if confidence of tracking is beyond threshold, then track user if(confidence > confidenceLevel) { // change draw color based on hand id# stroke(userColor[(i)]); // fill the ellipse with the same color fill(userColor[(i)]); // draw the rest of the body drawSkeleton(userID[i]); } //if(confidence > confidenceLevel) } //if(kinect.isTrackingSkeleton(userID[i])) } //for(int i=0;i<userID.length;i++) //saveFrame("frames/####.tif"); } // void draw() /*--------------------------------------------------------------- Draw the skeleton of a tracked user. Input is userID ----------------------------------------------------------------*/ void drawSkeleton(int userId){ kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_RIGHT_HAND,rightHand); kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_LEFT_HAND,leftHand); kinect.convertRealWorldToProjective(rightHand, rightHand); kinect.convertRealWorldToProjective(leftHand, leftHand); ellipseSize = map(rightHand.z, 700, 2500, 50, 1); ellipseSize1 = map(leftHand.z, 700, 2500, 50, 1); ellipse(rightHand.x, rightHand.y, ellipseSize, ellipseSize); //draw circle in right hand ellipse(leftHand.x, leftHand.y, ellipseSize1, ellipseSize1); //draw circle in left hand port.write((int)map(rightHand.x,100,700,0,179)); //sending data } // void drawSkeleton(int userId) /*--------------------------------------------------------------- When a new user is found, print new user detected along with userID and start pose detection. Input is userID ----------------------------------------------------------------*/ void onNewUser(SimpleOpenNI curContext, int userId){ println("New User Detected - userId: " + userId); // start tracking of user id curContext.startTrackingSkeleton(userId); } //void onNewUser(SimpleOpenNI curContext, int userId) /*--------------------------------------------------------------- Print when user is lost. Input is int userId of user lost ----------------------------------------------------------------*/ void onLostUser(SimpleOpenNI curContext, int userId){ // print user lost and user id println("User Lost - userId: " + userId); } //void onLostUser(SimpleOpenNI curContext, int userId) /*--------------------------------------------------------------- Called when a user is tracked. ----------------------------------------------------------------*/ void onVisibleUser(SimpleOpenNI curContext, int userId){ } //void onVisibleUser(SimpleOpenNI curContext, int userId)

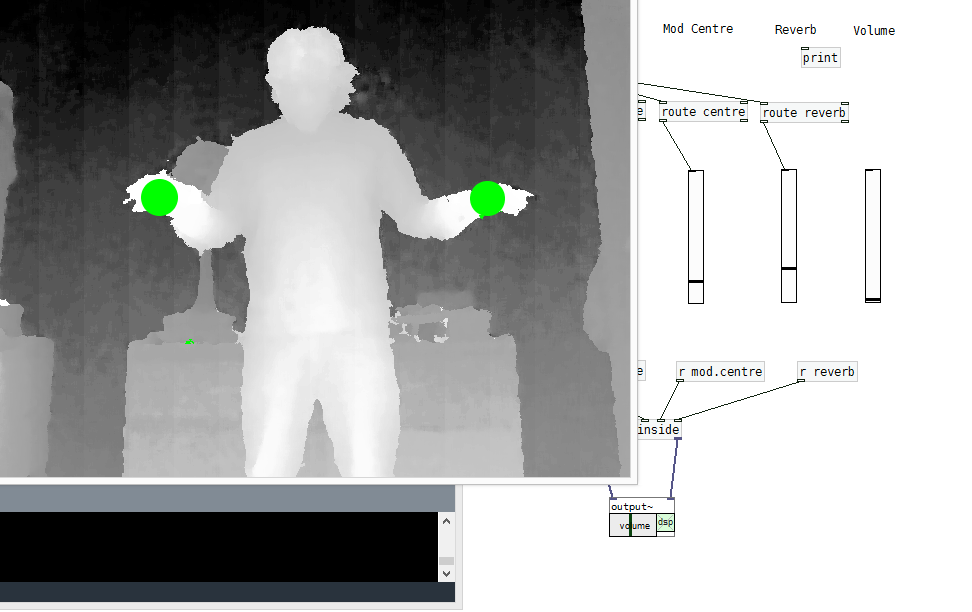

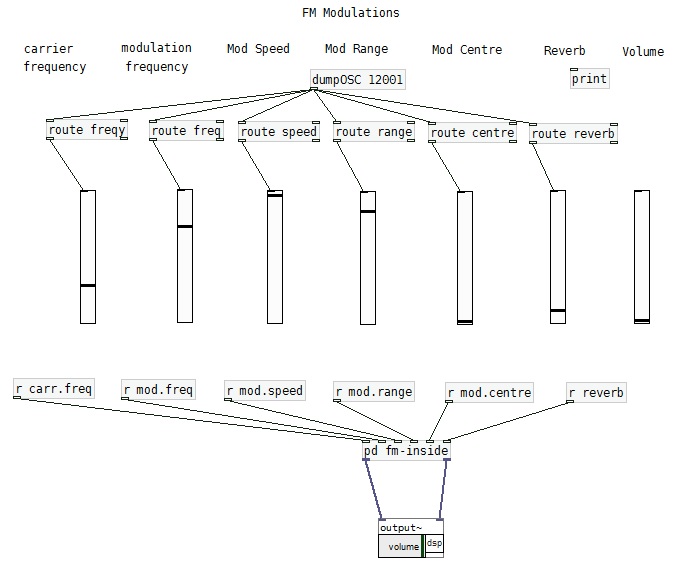

Finally, using Pure data and techniques in the synthesis of sound, I could create a virtual instrument that generates sound taking the position of my two hands in space, with which I obtain 6 parameters for generate sound.

Processing code:

import SimpleOpenNI.*; import oscP5.*; import netP5.*; /*--------------------------------------------------------------- Variables ----------------------------------------------------------------*/ // create kinect object SimpleOpenNI kinect; OscP5 oscP5; NetAddress myRemoteLocation; // image storage from kinect PImage kinectDepth; // int of each user being tracked int[] userID; // user colors color[] userColor = new color[]{ color(255,0,0), color(0,255,0), color(0,0,255), color(255,255,0), color(255,0,255), color(0,255,255)}; // postion of head to draw circle PVector headPosition = new PVector(); // turn headPosition into scalar form float distanceScalar; // diameter of head drawn in pixels float headSize = 200; // threshold of level of confidence float confidenceLevel = 0.5; // the current confidence level that the kinect is tracking float confidence; // vector of tracked head for confidence checking PVector confidenceVector = new PVector(); float ellipseSize, ellipseSize1; PVector rightHand = new PVector(); PVector leftHand = new PVector(); /*--------------------------------------------------------------- Starts new kinect object and enables skeleton tracking. Draws window ----------------------------------------------------------------*/ void setup() { oscP5 = new OscP5(this,12000); myRemoteLocation = new NetAddress("127.0.0.1",12001); // start a new kinect object kinect = new SimpleOpenNI(this); // enable depth sensor kinect.enableDepth(); // enable skeleton generation for all joints kinect.enableUser(); // draw thickness of drawer strokeWeight(3); // smooth out drawing smooth(); // create a window the size of the depth information size(kinect.depthWidth(), kinect.depthHeight()); } // void setup() /*--------------------------------------------------------------- Updates Kinect. Gets users tracking and draws skeleton and head if confidence of tracking is above threshold ----------------------------------------------------------------*/ void draw(){ // update the camera kinect.update(); // get Kinect data kinectDepth = kinect.depthImage(); // draw depth image at coordinates (0,0) image(kinectDepth,0,0); // get all user IDs of tracked users userID = kinect.getUsers(); // loop through each user to see if tracking for(int i=0;i<userID.length;i++) { // if Kinect is tracking certain user then get joint vectors if(kinect.isTrackingSkeleton(userID[i])) { // get confidence level that Kinect is tracking head confidence = kinect.getJointPositionSkeleton(userID[i], SimpleOpenNI.SKEL_HEAD,confidenceVector); // if confidence of tracking is beyond threshold, then track user if(confidence > confidenceLevel) { // change draw color based on hand id# stroke(userColor[(i)]); // fill the ellipse with the same color fill(userColor[(i)]); // draw the rest of the body drawSkeleton(userID[i]); } //if(confidence > confidenceLevel) } //if(kinect.isTrackingSkeleton(userID[i])) } //for(int i=0;i<userID.length;i++) //saveFrame("frames/####.tif"); } // void draw() /*--------------------------------------------------------------- Draw the skeleton of a tracked user. Input is userID ----------------------------------------------------------------*/ void drawSkeleton(int userId){ // get 3D position of hands kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_RIGHT_HAND,rightHand); kinect.getJointPositionSkeleton(userId, SimpleOpenNI.SKEL_LEFT_HAND,leftHand); kinect.convertRealWorldToProjective(rightHand, rightHand); kinect.convertRealWorldToProjective(leftHand, leftHand); ellipseSize = map(rightHand.z, 700, 2500, 50, 1); ellipseSize1 = map(leftHand.z, 700, 2500, 50, 1); ellipse(rightHand.x, rightHand.y, ellipseSize, ellipseSize); ellipse(leftHand.x, leftHand.y, ellipseSize1, ellipseSize1); OscMessage m = new OscMessage("freqy"); //m.add(convertedRightHand.x); m.add(map(rightHand.x,100,700,50,10000)); OscMessage m1 = new OscMessage("speed"); //m1.add(convertedRightHand.y); m1.add(map(rightHand.y,0,470,10,10000)); OscMessage m2 = new OscMessage("centre"); //m2.add(convertedRightHand.z); m2.add(map(rightHand.z,1070,2800,0,10000)); oscP5.send(m, myRemoteLocation); oscP5.send(m1, myRemoteLocation); oscP5.send(m2, myRemoteLocation); OscMessage n = new OscMessage("freq"); //n.add(convertedLeftHand.x); n.add(map(leftHand.x,0,380,10,10000)); OscMessage n1 = new OscMessage("range"); //n1.add(convertedLeftHand.y); n1.add(map(leftHand.y,0,480,100,10000)); OscMessage n2 = new OscMessage("reverb"); //n2.add(convertedLeftHand.z); n2.add(map(leftHand.z,800,2800,0,1)); oscP5.send(n, myRemoteLocation); oscP5.send(n1, myRemoteLocation); oscP5.send(n2, myRemoteLocation); } // void drawSkeleton(int userId) /*--------------------------------------------------------------- When a new user is found, print new user detected along with userID and start pose detection. Input is userID ----------------------------------------------------------------*/ void onNewUser(SimpleOpenNI curContext, int userId){ println("New User Detected - userId: " + userId); // start tracking of user id curContext.startTrackingSkeleton(userId); } //void onNewUser(SimpleOpenNI curContext, int userId) /*--------------------------------------------------------------- Print when user is lost. Input is int userId of user lost ----------------------------------------------------------------*/ void onLostUser(SimpleOpenNI curContext, int userId){ // print user lost and user id println("User Lost - userId: " + userId); } //void onLostUser(SimpleOpenNI curContext, int userId) /*--------------------------------------------------------------- Called when a user is tracked. ----------------------------------------------------------------*/ void onVisibleUser(SimpleOpenNI curContext, int userId){ } //void onVisibleUser(SimpleOpenNI curContext, int userId)