<<<

The topic of the week is the fabacademy Application progamming : we must make an application interfacing input and/or output device

I choose to try to make a software to transform a movie to a physical object, via 3D printing :

input is a movie, and output is a reprap, so some Gcode.

The movie is split in frames that they are individualy :

- vectorised

- transformed to STL

after a global gcode is genrated.

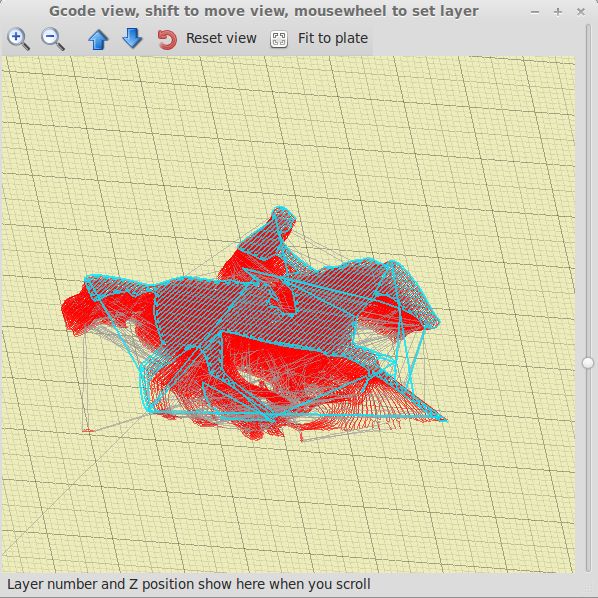

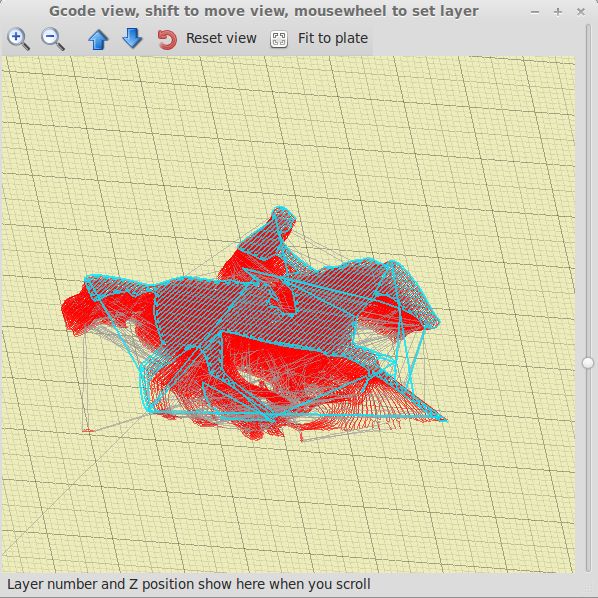

The result is a gcode with one layer for each frame.

optionaly, the gcode can be directly send to the reprap.

The bigger difficulty is the conversion from mages sequence to 3D mesh.

I tryed the fabmodule's gi_stl tool, but this tool had a little bug : if images have different numbers of "plain" pixels, holes appears in the mesh.

This inconvenient isn't so important for a small amount of images, because it's possible to fix the mesh,

but with a very large number of images (up to 1000) manual corrections are impossible.

So I examined the possibility to use openSCAD to build a stack of each images extruded.

But I find that quickly, openscad use a lot of ressources (particulary memory) for extruding a large amount of complex dxfs.

I fail to generate a large stack in a raisonable time and memory usage.

It's finaly why I decide to generate one stl for each frame, then one gcode, and stack the gcodes.

Finaly, the result is conceptualy interesting because I'm able to build a physical object without geting the mesh of it :

I only produce the gcode for the printing.

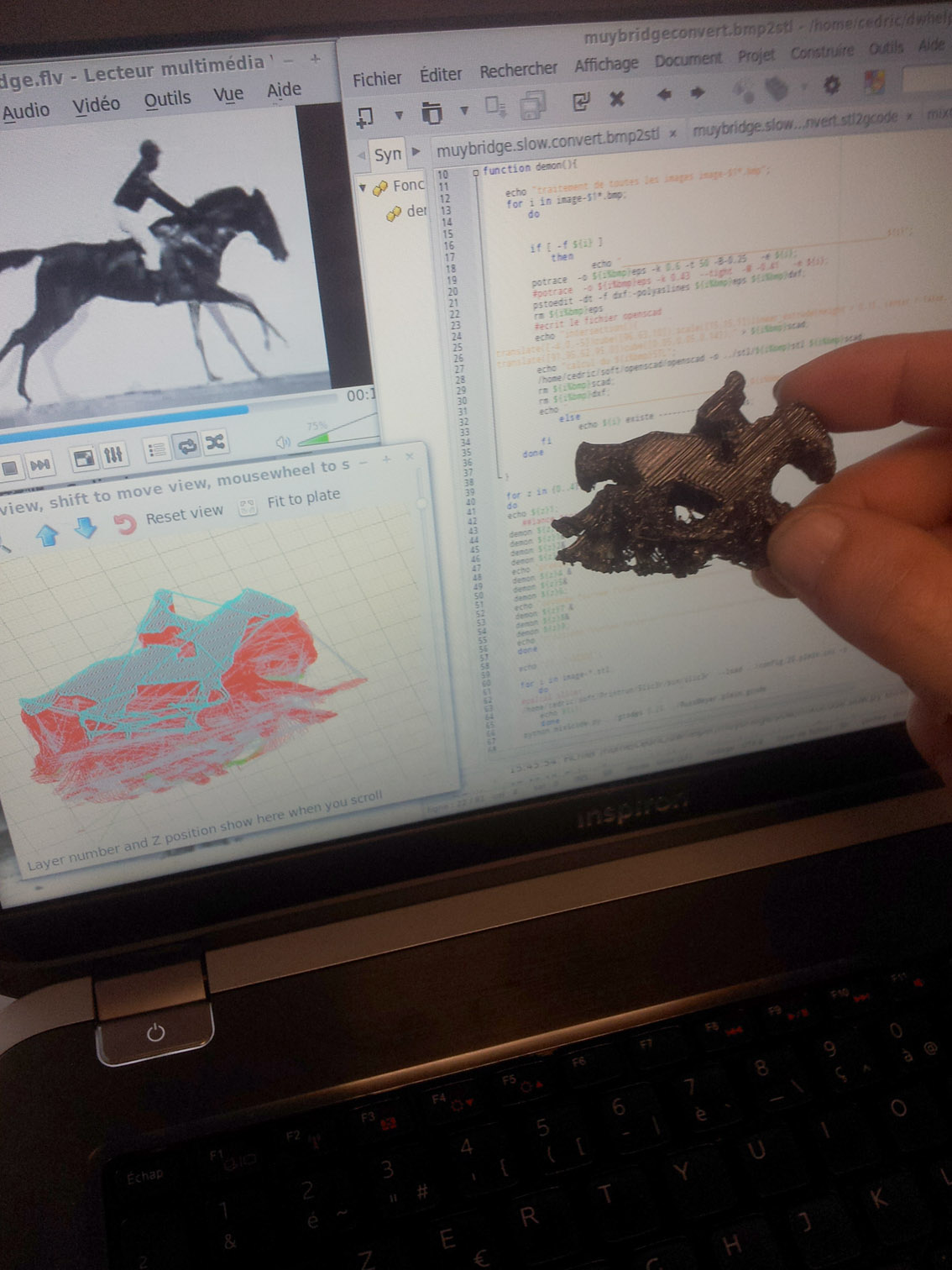

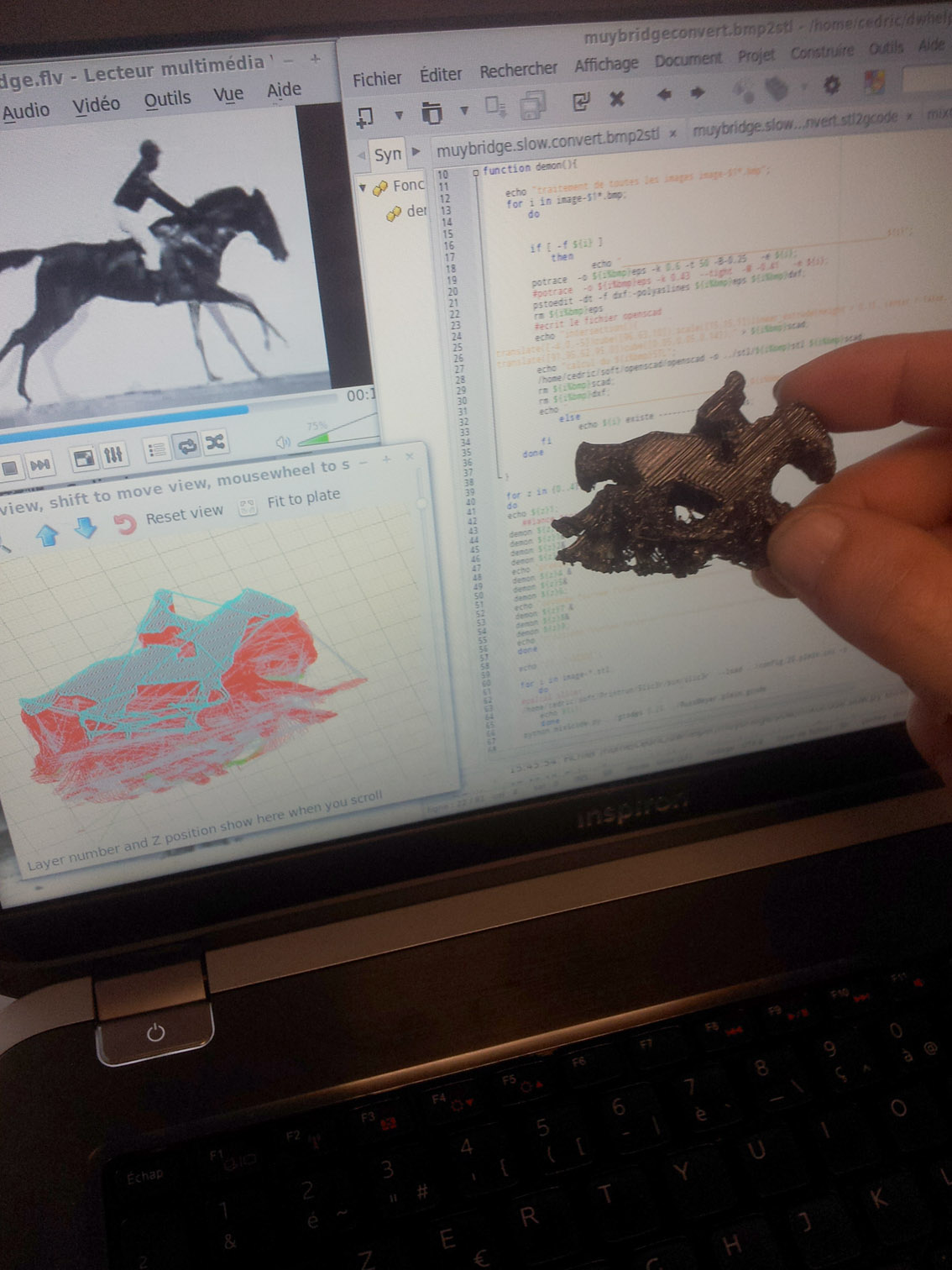

I begin by writing a draft program, to validate the toolchain.

I choose using bash because I need to use several different softwares

first, it's necessary to extract each frames of the movie.

for this I use ffmpeg :

fmpeg -i RaceHorseMuybridge.flv images/image-%3d.bmp

Then I build a loop to process each image individualy.

function demon(){

echo "traitement de toutes les images image-$1*.bmp";

for i in image-$1*.bmp;

do

if [ -f ${i} ]

then

echo "_________________________________________________________${i}";

potrace -o ${i%bmp}eps -k 0.6 -t 50 -B-0.25 -e ${i};

#potrace -o ${i%bmp}eps -k 0.43 --tight -M -0.41 -e ${i};

pstoedit -dt -f dxf:-polyaslines ${i%bmp}eps ${i%bmp}dxf;

rm ${i%bmp}eps

#ecrit le fichier openscad

echo "intersection(){

translate([-4,0,-5])cube([96,63,10]);scale([15,15,1])linear_extrude(height = 0.15, center = false, convexity = 10) import (file = \"${i%bmp}dxf\");}translate([-4,0,0])cube(0.05);

translate([91.95,62.95,0])cube([0.05,0.05,0.14]);" > ${i%bmp}scad;

echo "calcul du ${i%bmp}STL";

/home/cedric/soft/openscad/openscad -o ../stl/${i%bmp}stl ${i%bmp}scad;

rm ${i%bmp}scad;

rm ${i%bmp}dxf;

echo "_______________________________________${i%bmp}STL ok";

else

echo ${i} existe -----------------pas;

fi

done

}

For defining filled and empty zones from color (or black and white) of a picture, I vectorise it with potrace

potrace -o ${i%bmp}eps -k 0.6 -t 50 -B-0.25 -e ${i};

Potrace is able to output in DXF format, but this variant isn't lisible by openscad,

it's why I use pstodedit to convert EPS to DXF format (wich this variant is well formated for openSCAD).

pstoedit -dt -f dxf:-polyaslines ${i%bmp}eps ${i%bmp}dxf;

For each image, I build a openscad file containing :

intersection(){

translate([-4,0,-5])cube([96,63,10]);

scale([15,15,1])

linear_extrude(height = 0.15, center = false, convexity = 10)

import (file = "${i%bmp}dxf");

}translate([-4,0,0])cube(0.05);

translate([91.95,62.95,0])

cube([0.05,0.05,0.14]);

Then I produce the STL file executing openscad :

openscad -o ../stl/${i%bmp}stl ${i%bmp}scad;

Openscad isn't optimised for multiprocessor processing, and it take a long time to process each image:

for the muybridge horse example image, about 20 sec/image

It's why I decide to force multithreading, to use the total computing power of my computer (wich is a quad core) and reduce the processing time.

For the draft program, I simply use the "&" option of terminal :

for z in {0..4}

do

echo ${z}1;

##lance trois demons sur différentes parties des images

demon ${z}0&

demon ${z}1&

demon ${z}2&

demon ${z}3;

echo "premiere fournee finie====================================================="

demon ${z}4 &

demon ${z}5&

demon ${z}6;

echo "seconde fournee finie====================================================="

demon ${z}7 &

demon ${z}8&

demon ${z}9;

echo "troisieme fournee finie====================================================="

done

this strategy isn't very clean because the end of process isn't correctly detected, but it's enought for a draft.

They are generated with Slic3r.

Because this program is multithread optimised, I processed it separatly that stls loop

for i in image-*.stl;

do

#calcul slicer

slic3r --load ../config.25.plein.ini -o ../gcodes/${i%stl}gcode ${i}

echo ${i};

done

python mixGcode.py

The last command : "MixGcodes.py" is a python script that's concatenate gcodes, adding a z ofset fofr each layer.

The draft programm is here : [file:Movie2gode.draft.zip]

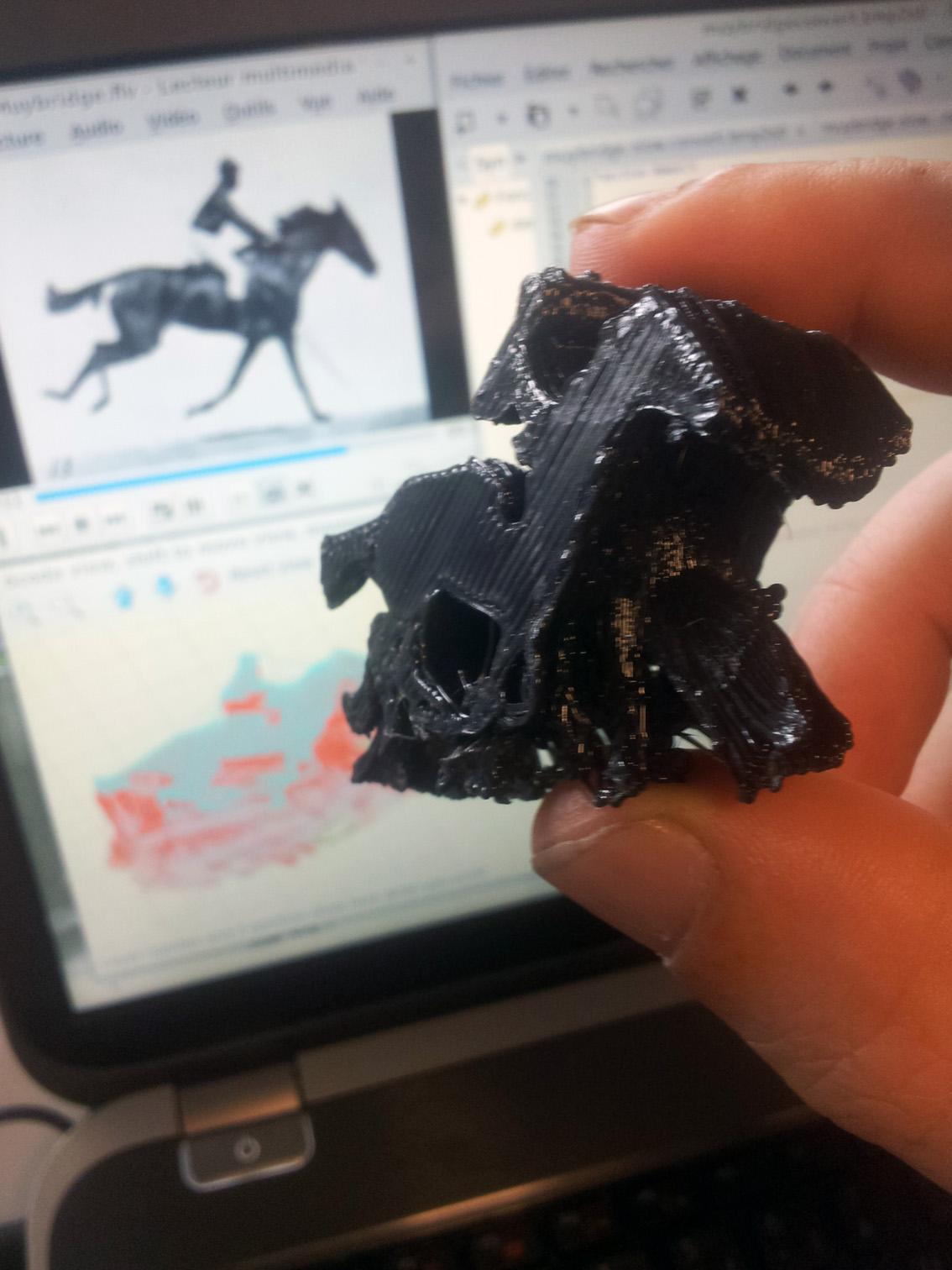

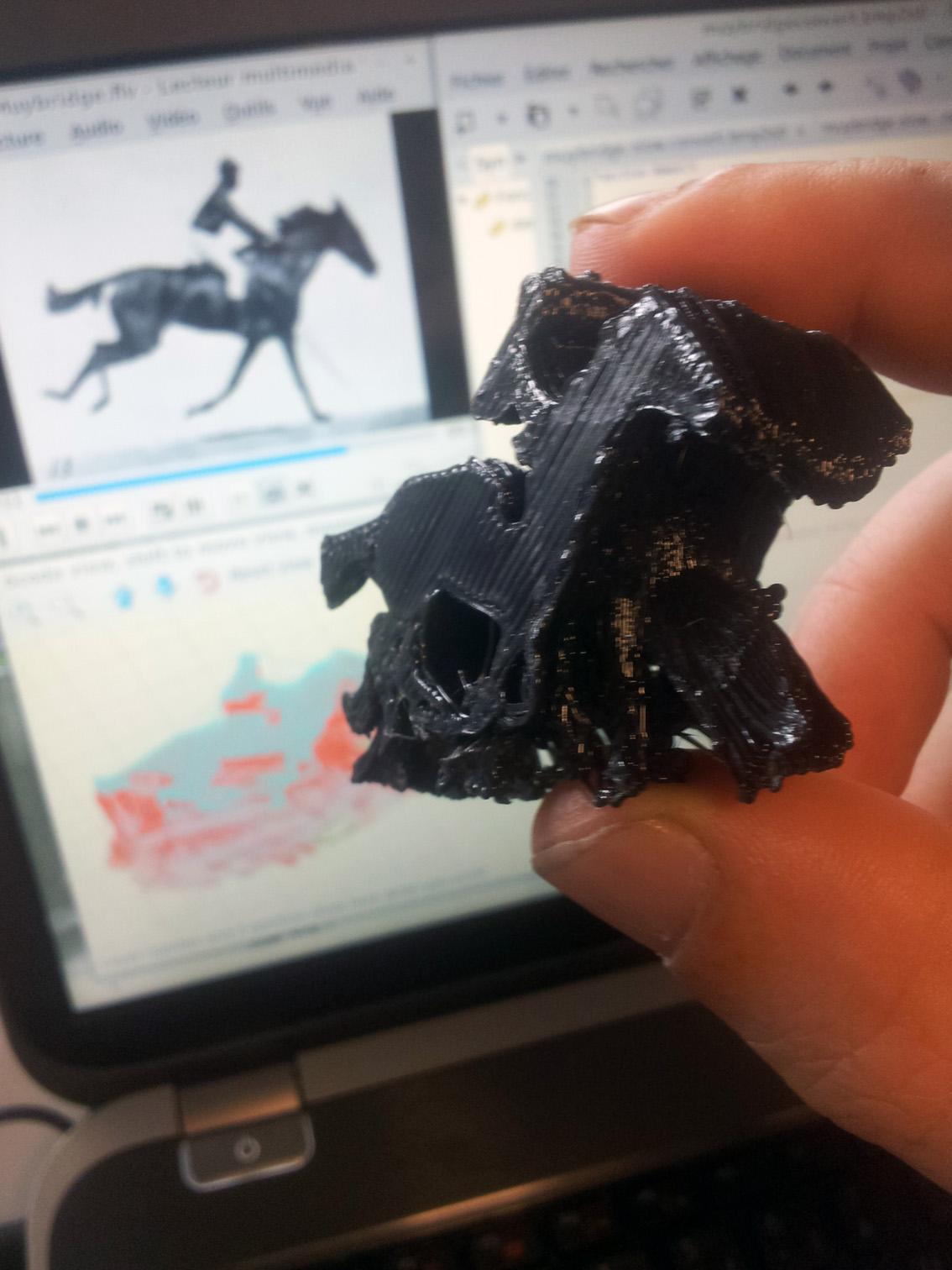

As my program is modest part in the history of cinema, I try it with the Muybridge horse that is one of the first movies :

By this test, I validate that it's possible to get a physical object from a movie, with time translated to z axe.

The result is quite good, but some details can be improved :

Because the principe of generation (and in some particular images cases), slic3r is unable to process support material.

So it's necessary to anticipe this by using images with only small differences between each (in other words slow motions) :

In this case, the previous image layer can support the next.

For this particular example, I used slomovideo software to generate extra intervaling images.

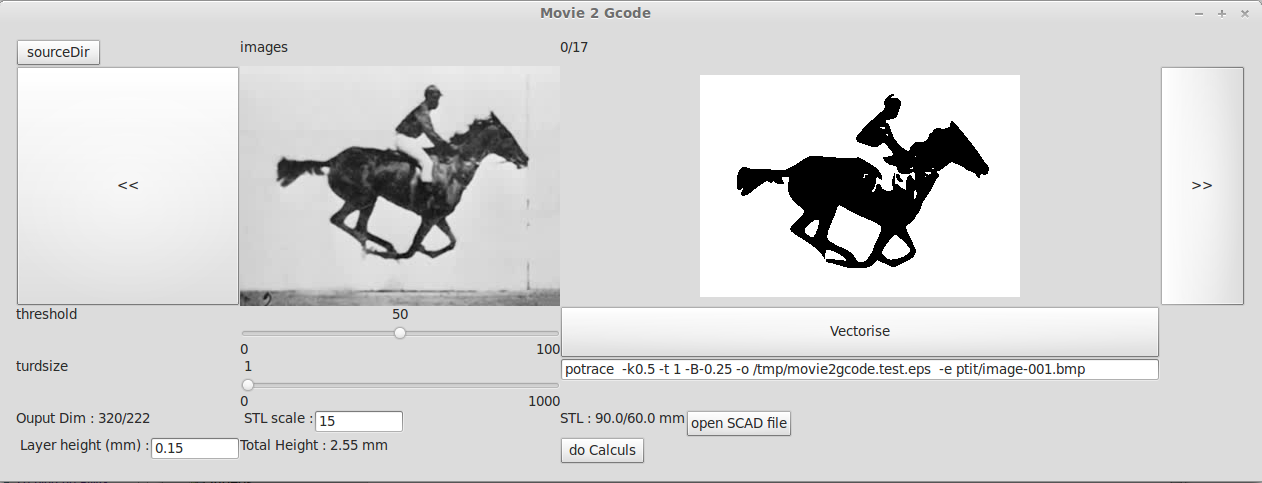

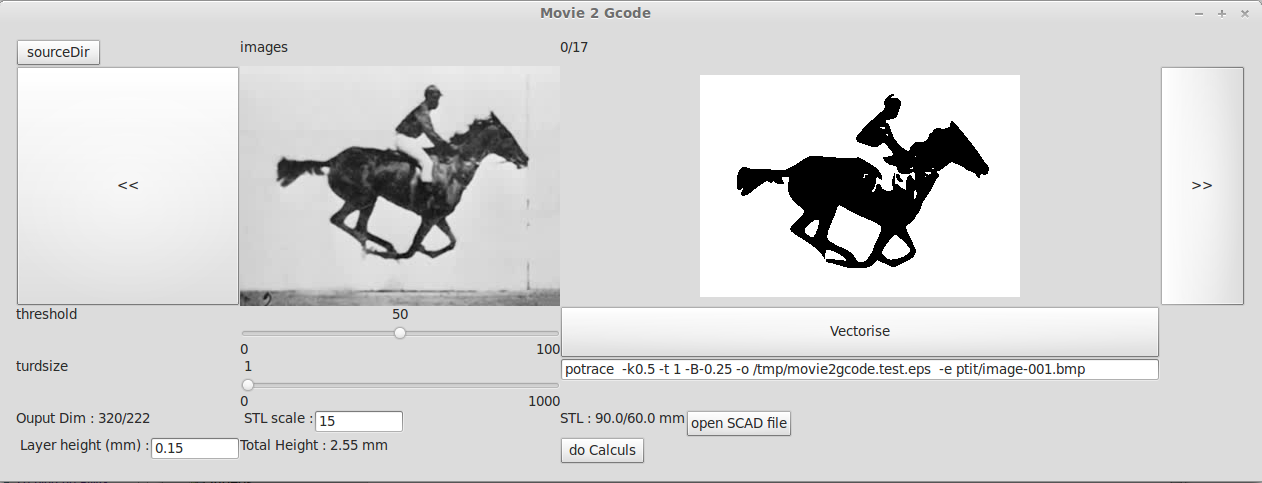

At this step, fine tuning of vectorisation, size and slicing isn't possible without go into the code : I need to program a graphical interface to made the program usable with different movies.

I start programming the graphical interface to be able to :

- choosing source images (in a folder)

- adjust the vectorisation

- adjust the real size of the final object

- tuning the slicing options

- launching the processing of all movie

optionaly, I will be good to get a direct communication with the reprap, via a serial interface, to stream the gcode directly.

In fact, I have to rexrite entierly the program, to made it more coherent and evolutive.

Because I still use some python scripts inside, I decide to use this language for the main program.

The interface will use the wxpython library.

At this time, the work isn't finished, but I can say that I've learned :

- using wxpython to create a graphical interface

- calling external programs and displaying results

- implement multithread processing (for the moment for an empty loop)

I plane to finish this program and publishing soon.

[File:movie2gcode.zip]